Articulated frictions for engaged presence

Weeknotes 348 by Iskander Smit

Hi all!

First of all. Let me introduce myself to fresh readers. This newsletter is my personal weekly digest of the news of last week, of course through the lens of topics that I think are worth capturing, and reflecting upon: Human-AI collabs, physical AI (things and beyond), and tech and society. I always take one topic to reflect on a bit more, allowing the triggered thoughts to emerge. And I share what I noticed as potentially worthwhile things to do in the coming week.

If you'd like to know more about me, I added a bit more of my background at the end of the newsletter.

Enjoy!

What did happen last week?

The holiday season is slowly starting to unroll. We invited all participants for the design charrette, and for ThingsCon, we made plans for a new Salon that will take place on 4 September on “maintaining good intentions in the smart city”. I discussed the mapping of the Hoodbots for a research, and attended a Rotterdam Future Society Lab session.

What did I notice last week?

Scroll down for all the notions from the last week’s news, divided into human-AI partnerships, robotic performances, immersive connectedness, and tech societies. Let me choose one per category here:

- Friction as a design material for AI.

- How to train your DIY Robots

- Building AI applications for audio

- The design of AI tools demands careful consideration of intended consequences.

What triggered my thoughts?

I was triggered by a story Alexander shared on the AI Report podcast recently. He described wandering through New York with time to kill, using ChatGPT's voice mode as a companion. As he strolled, he'd tell ChatGPT what he was seeing—buildings, those iPad-like screens on doors that delivery services use—and ask for information about them. It was a continuous, contextual conversation that made his solo urban exploring more enriching.

What caught my attention wasn't this novel use case, but Alexander's complains of what he missed: that these interactions become more seamless, with AI automatically knowing his location and surroundings without him having to explain.

That is a misconception, I think. When Alexander had to tell ChatGPT where he was and what he found interesting, that "friction" made him control the conversation. He chose what mattered to him. The human remained the guide.

Remove that friction, and suddenly the dynamic shifts. The environment starts talking to you rather than you talking about the environment. As I wrote in my piece (PDF) on immersive AI for the AI Report, environments that are continuously intelligent and conversation-initiating can become intrusive rather than helpful.

We've seen this pattern before. When location services first emerged with GPS and WiFi positioning, the immediate impulse was commercial: "Walk past a store, get a coupon!" But that's exactly what made many early location services feel invasive.

This same tension is playing out now with agentic AI browsers. As ChatGPT becomes a mainstream search alternative for many, companies are racing to add agentic capabilities—moving from answering questions to completing tasks for you: "Looking for flights? I'll book the best one." "Planning a vacation? Let me arrange everything."

While convenient for sure, there's a trap here. Internet search took years to become fully commercialized, gradually eroding the user experience. If agentic AI starts from a commercial foundation, users may reject it before it has a chance to prove its value.

It is not about the commercial part. With the introduction of immersive AI, it will be all about finding the right balance between capability and intrusion. And even more: how to play out that intrusion in such a way we feel engaged with the experience, more even than when we had the perfect convenient one.

Indy Johar wrote a very thoughtful piece on maybe even another aspect: presence engineering, adding the aspect that in ‘living’ in the presence, the relations we have with other entities are shaping a civilization orientation. “It reclaims the idea that intelligence is not located in the isolated individual, nor in mechanical systems, but in the quality of relation: between people, technologies, ecologies, and timescales.”

It makes me think that the friction is not only providing an engaging experience, but it is also articulating the relations between humans and their environments. Or as Johar writes: “It is the design of living systems that can think, feel, and adapt together.”

So to conclude, the most valuable AI companions won't be the ones that remove all friction, but the ones that preserve the right kinds of friction—the moments where we bring our humanity, curiosity, and agency to the interaction.

What inspiring paper to share?

Is data material? Toward an environmental sociology of AI

I argue that the concept of a dual process of abstraction and extraction, commonly evoked in literature on data extraction, can help to conceptualize the materiality of extraction as a process by which reality is narrowed to a set of functional properties, while disregarding everything else. In the case of data, this process has unique dynamics that make it distinct from, yet equally material as, resource, energy, or labor extraction.

*Pieper, M. Is data material? Toward an environmental sociology of AI. AI & Soc (2025). https://doi.org/10.1007/s00146-025-02444-1*

What are the plans for the coming week?

Continue working on civic protocol economies, mapping Hoodbot stories, and other projects in the pipeline. And I am looking forward to presenting on the “immediacy of Agentic AI” in a workshop.

On Thursday, I might attend this event: A Station for Resources: a dialogue on techno-utopias and smart city imaginaries

And on 19 July, the Amsterdam Pride Walk.

References with the notions

Human-AI partnerships

In the agentic AI narrative, the focus seems to shift to browsers now. Perplexity introduced one last week, and others (eg OpenAI) will follow soon.

A bit off-topic maybe, but I like this idea of co-created products. Maybe let AI think of some designs?

I know of a podcast that created new episodes based on titles ChatGPT had hallucinated. This music platform is doing the same with a feature. Will this be a new trend we start planning according to the hallucinations?

Managing the context window in the AI tools as part of the conversations we build might make sense.

Veo3 is turning your photos into videos; expect crazy surreal things:

Matt is reflecting on the governance of the self-organizing vending machine

What do we lose when AI becomes the new normal? Blurring the human thought and the artificial.

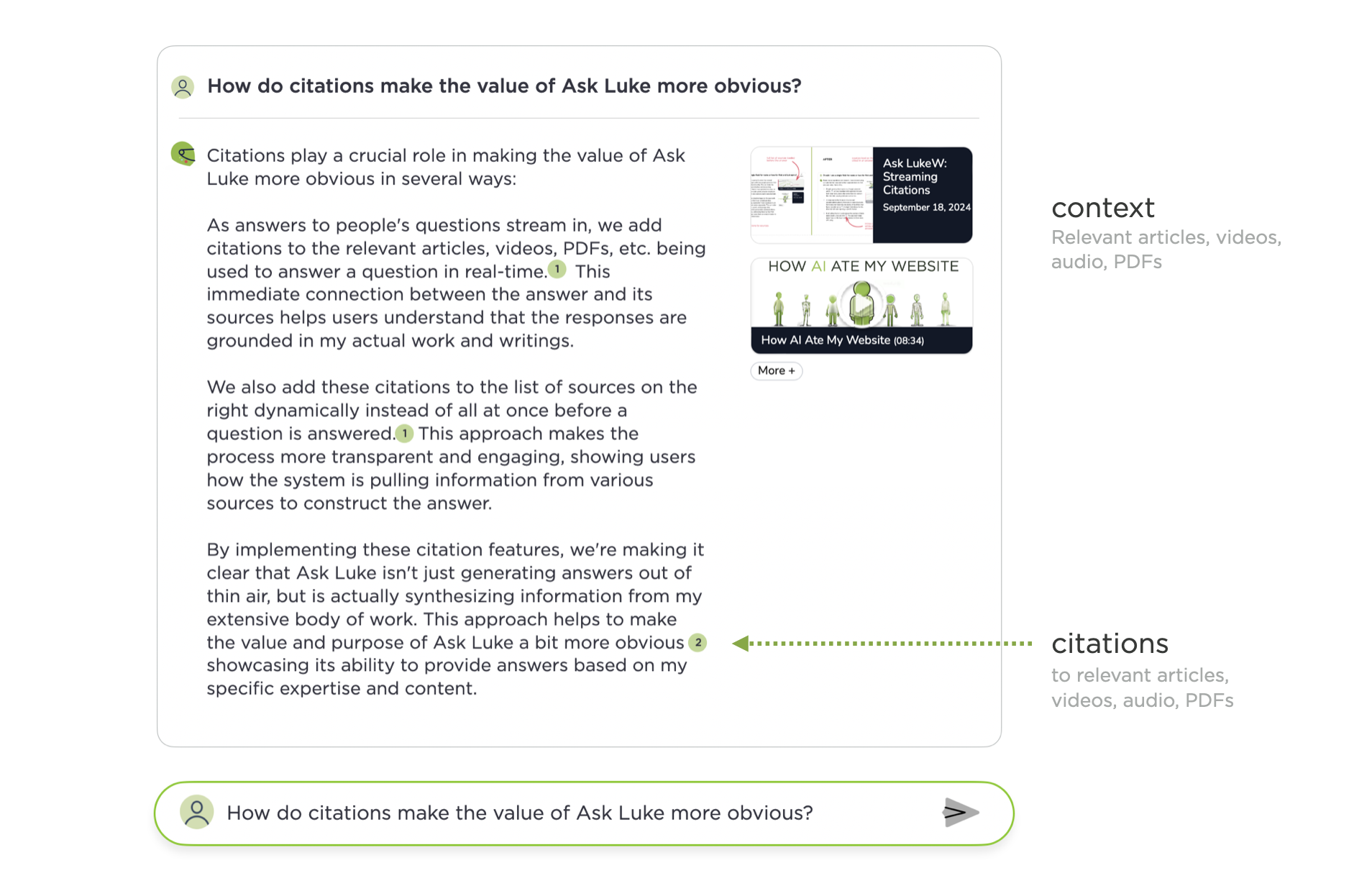

Friction as a design material for AI.

https://every.to/thesis/in-the-ai-age-making-things-difficult-is-deliberate

Ian Bogost is wondering why computers still lack intelligence

Computing consciousness is still not solved.

Robotic performances

You are wondering if this works as hospitality…

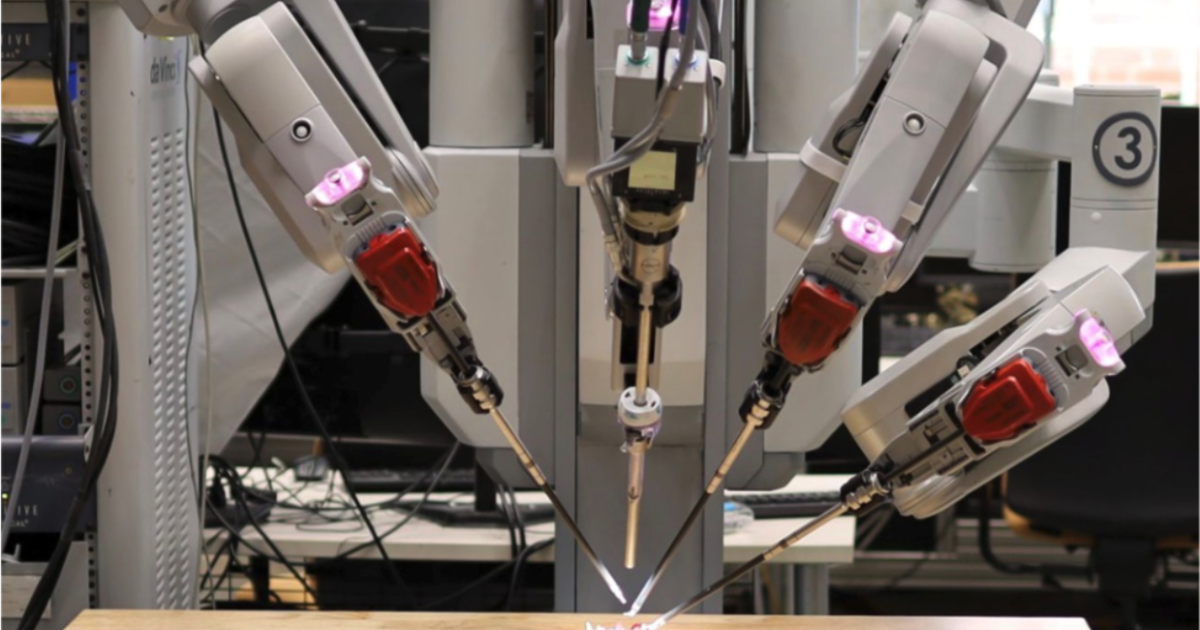

What or who will we trust our surgery to?

Predictions on future scale will be part of technology always:

Immersive connectedness

Glasses are the Meta way to go.

Building AI applications for audio

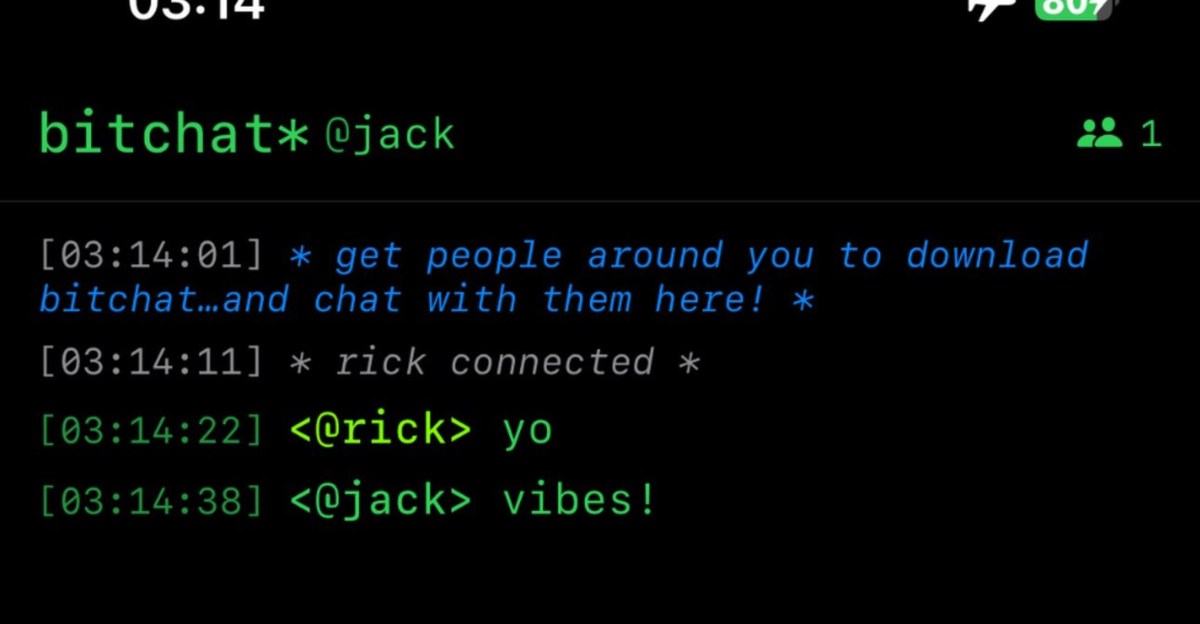

Is peer-to-peer networking the new black (revived from earlier times?)

Tech societies

Presence engineering: intelligence is defined in the quality of the relation between people, technologies, ecologies, and timescale.

This whole talent war is not about the talent but about the fight between the AI giants. See Windsurf saga.

Attention seeker Elon is reaching his goals, taking some problematic tweets.

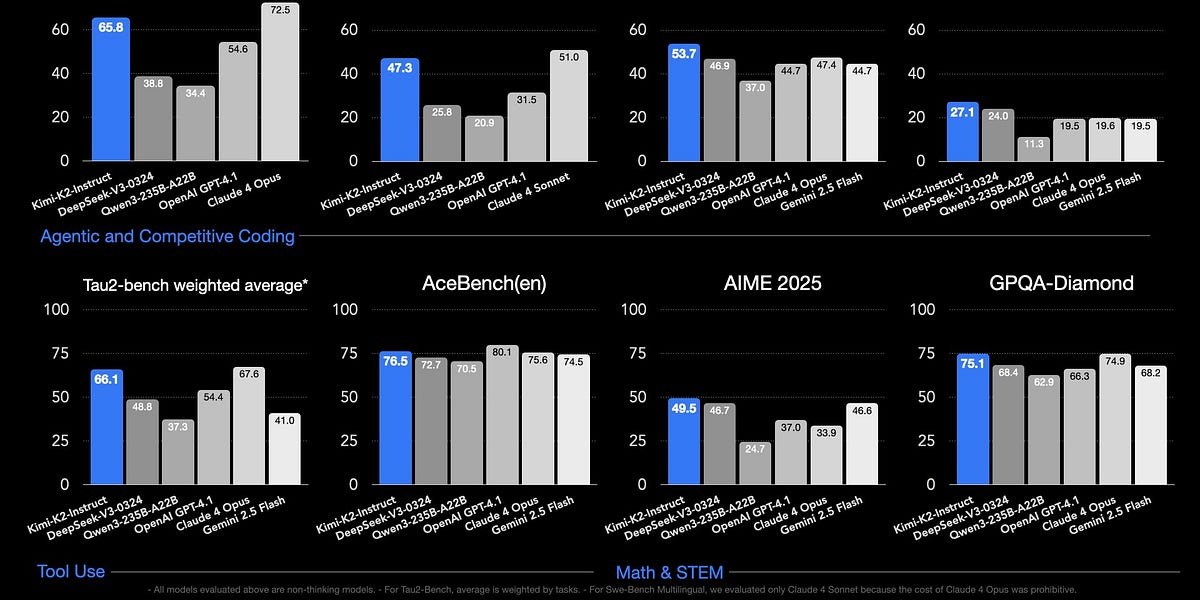

Another fast Chinese model:

The design of AI tools demands careful consideration for intended consequences.

More human-like machine vision is more energy efficient.

AI therapy bots might not be healthy.

The risks for AI companies are bleeding out due to computing use.

AI is dismantling paywalls.

Have a great week!

About me

I'm an independent researcher, designer, curator, and “critical creative”, working on human-AI-things relationships. I am available for short or longer projects, leveraging my expertise as a critical creative director in human-AI services, as a researcher, or a curator of co-design and co-prototyping activities.

Contact me if you are looking for exploratory research into human-AI co-performances, inspirational presentations on cities of things, speculative design masterclasses, research through (co-)design into responsible AI, digital innovation strategies, and advice, or civic prototyping workshops on Hoodbot and other impactful intelligent technologies.

My guiding lens is cities of things, a research program that started in 2018, when I was a visiting professor at TU Delft's Industrial Design faculty. Since 2022, Cities of Things has become a foundation dedicated to collaborative research and sharing knowledge. In 2014, I co-initiated the Dutch chapter of ThingsCon—a platform that connects designers and makers of responsible technology in IoT, smart cities, and physical AI.

Signature projects are our 2-year program (2022-2023) with Rotterdam University and Afrikaander Wijkcooperatie has created a civic prototyping platform that helps citizens, policymakers, and urban designers shape living with urban robots: Wijkbot.

Recently, I've been developing programs on intelligent services for vulnerable communities, and contributing to the "power of design" agenda of CLICKNL, and since October 2024, co-developing a new research program on Civic Protocol Economies with Martijn de Waal at Amsterdam University of Applied Sciences.