Weeknotes 298 - agenticness in prod-user symbioses

Hi, y’all!

Never a dull summer! It is troubling seeing big techies connecting to Trump; it is opportunistic and in line with a kind of backlash against regulators. And the vulnerability of the networks and interdependencies showed with the CloudStrike

Here in the Netherlands, the last batch of vacation slots started, so the weeks become more quiet with meetings and events especially. Last week, I was engaged in a scientific conference, EASST-4s, and visited a couple of panels next to the one we were part of with Wijkbot. It was a nice atmosphere (and a pleasant Brutalist building), with more than 4000 attendees and numerous sessions, which were too many to digest. Below, I will reflect a bit on the Transformation in agency (in the age of machine intelligence)-panel.

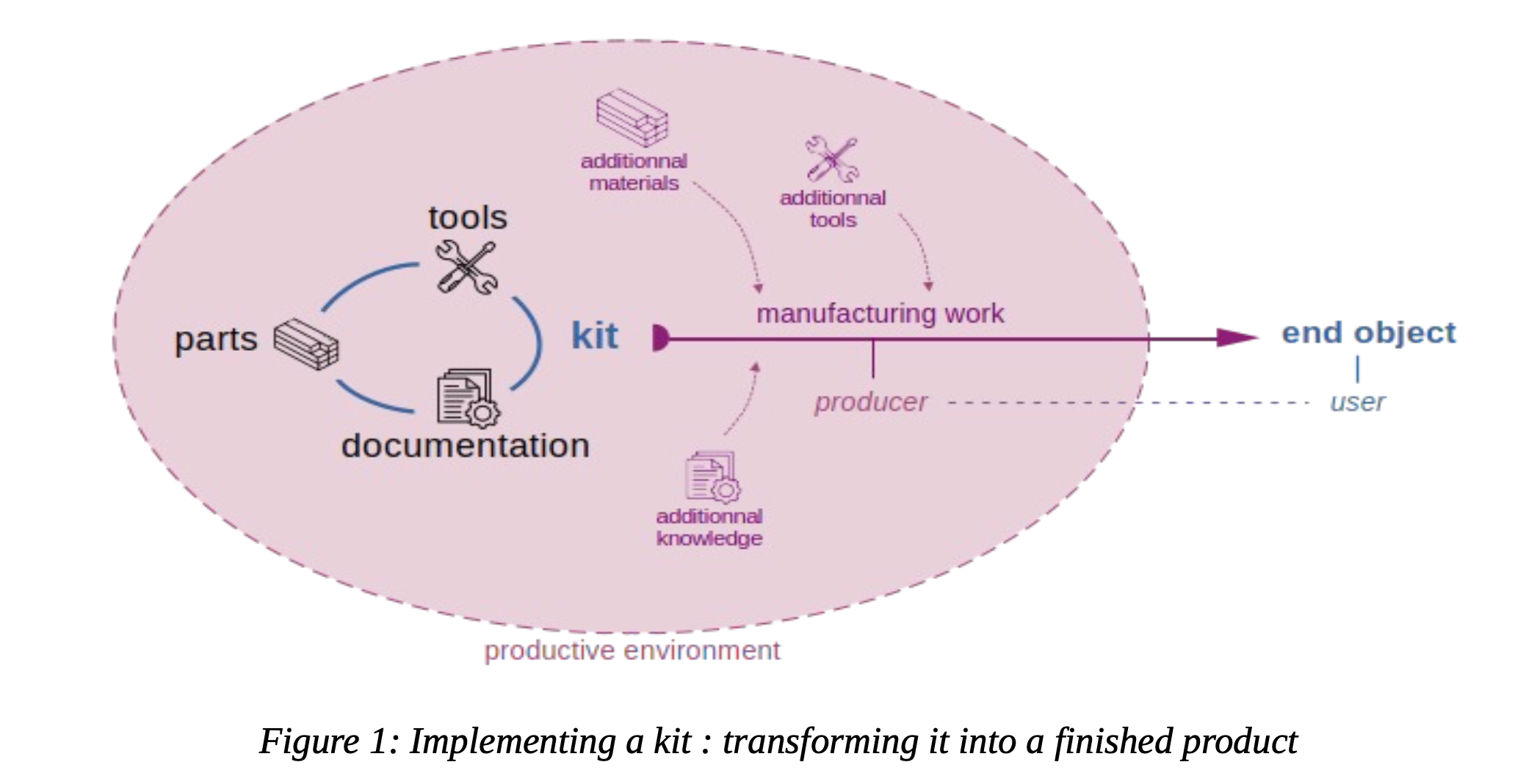

As mentioned, we presented the case of the Wijkbot in a panel on the Kit Economy, a newly coined concept that turned out to be more connected to the maker’s feelings than the description initially suggested. I think there are still open questions on the economy part.

In this diagram, the first ideas on the Kit are illustrated: a kit always consists of parts, tools, and documentation. The role of using kits as a learning instrument, or as a kind of action product. The producer and user merge in a “prod-user,” more than a prosumer, producer, and consumer, which is more passive. As referred to: “it brings us back to the classic STS issues of the joint development of objects and their technical and social environments (Akrich 1989).”

Kits were discussed with different types of examples. A meal kit is not a kit as it does not provide tools. Is a kit always meant to reach a defined end state like the IKEA kits? That differs, of course, from the WijkbotKit, where the outcome is undefined, and the kit is a means to an end, gaining insights for the future.

So is a kit part of a business model (IKEA), or marketing strategy to engage more with the brand (IKEA again). The examples of real open source and collaborative working with the example of the Vheliotech.

So to conclude; as expected the panel is opening up new questions. On the impact of the context on what a kit actually is. And how it relates to economic principles. Nice to be part of the panel.

Triggered thought

As triggered thought I like to refer to another session I followed at the conference: Transformation in agency (in the age of machine intelligence)-panel.

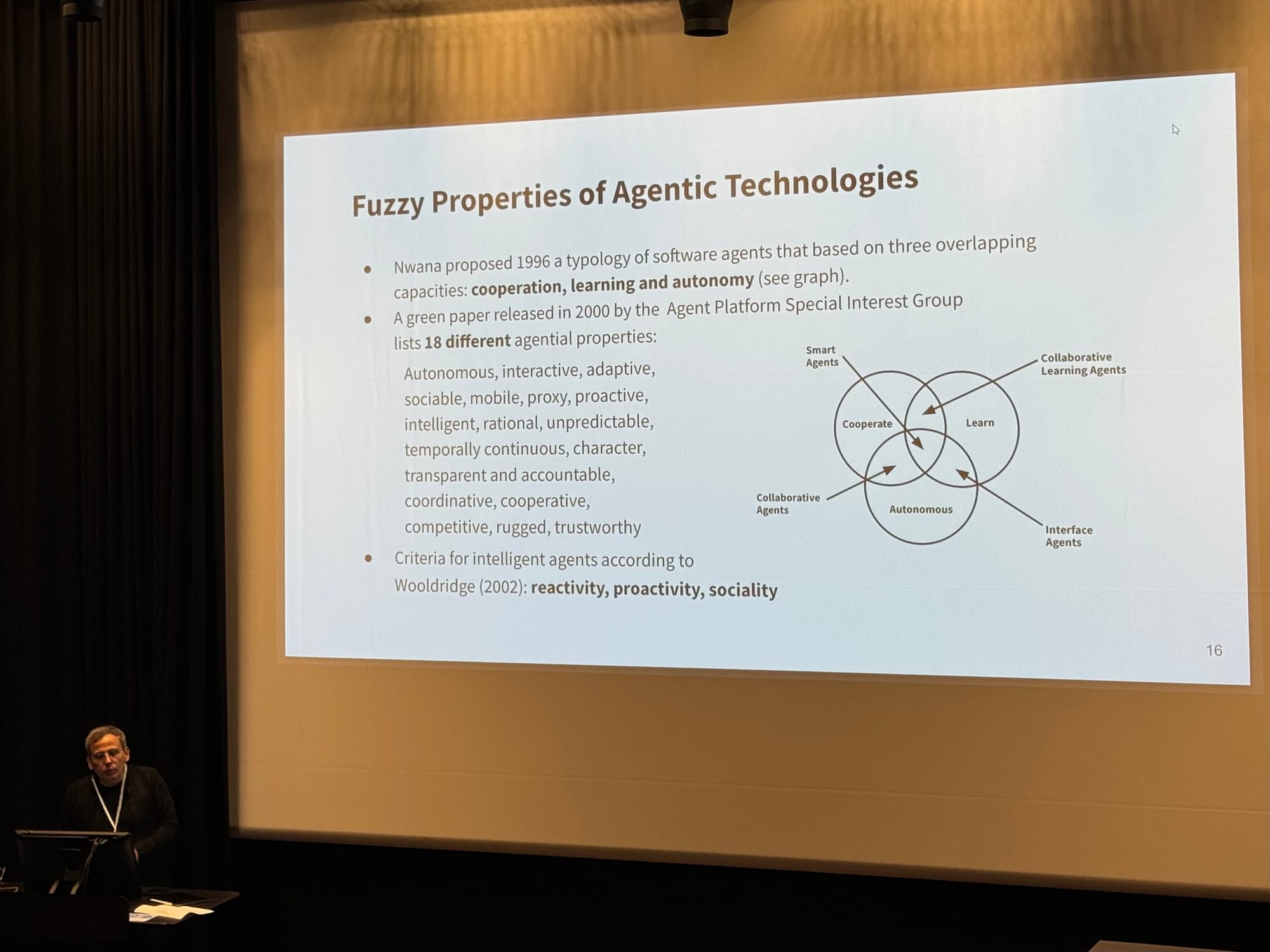

In this session, there were two parts with about 8 presentations, half more fundamental and conceptual and the others more applied. How worlding and world models are shaping agency. And the presentation Markus Burkhardt (University of Siegen) dived deeper in What is in an AI agent.

Some literature; An agent is something that acts, makes sense. And is a rational agent if it act to achieve the best outcome (Russel and Norvig, 2022). Criteria on intelligent agents according to Wooldridge (2002): reactivity, proactivity, sociality. Already in 1996 different agent typologies are proposed by Nwana, see this graph.

In the current times of LLMs agenticness of AI is defined by a degree, in goal complexity, environmental complexity, adaptability, independent execution.

He built a case around the difference between ChatGPT and GPTs. Even within AI, the level of agenticness is still in development. He created a practical guide on prompting, but what I find more interesting is the question of whether we will get synthetic agency and how we relate to this.

Later, Eitan Wilf's presentation on AI and music creations gave an interesting example of an agentic piano. The concept of the continuator is continuing the human's playing. It is not inspiring or inventing anything new. Therefore, it is necessary to mix different styles, not missing out on the conversation between the human and the machine.

Circle back to the model of the kits. What if this is mixed with the agenticness? What if the kit has agency? What if the exchange of producer and user participating in an agentic process becomes a symbiotic exchange of producing and using? Is the kit more a boosting of the learning and engaging experience or breaking down the necessary agency of the user? With the kit model, the producer is opening up its intentions and creating options for adapting user agency.

For the subscribers or first-time readers (welcome!), thanks for joining! A short general intro: I am Iskander Smit, educated as an industrial design engineer, and have worked in digital technology all my life, with a particular interest in digital-physical interactions and a focus on human-tech intelligence co-performance. I like to (critically) explore the near future in the context of cities of things. And organising ThingsCon. I call Target_is_New my practice for making sense of unpredictable futures in human-AI partnerships. That is the lens I use to capture interesting news and share a paper every week. Feel invited to reach out if you need some reflections; I might be able to help out! :-)

Notions from the news

Some new versions from AI players, small models ftw: Mistral NeMo, OpenAI-4o mini. Would it fit on the Rabbit? Probably not. :-)

At the same time it is a continuous development met we are becoming more and more eating our own synthetic dogfood. Challenges the training data. AI should be aware for the Winds of an AI Winter.

What is the speed of the developments? What impact will it have? Is AGI nearer, what do we see as AGI? Is our moving target towards AEI (E for emotional)? Just some thoughts popping up:

And will AI-free platform or services become a thing. Will it be legal to jailbreak AI as forced transparency?

Human-AI partnerships

The uncanny valley is everywhere. Hyper realistic.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25532159/20240712_102615.jpg)

Roque waves. I learn every week. Good to have AI to help us deal with it.

Helping out predicting risks makes sense but dimension it for better safe than sorry and continuous learning loops with human feedback (RLHF).

I am wondering if Gemini will be able to predict the number of medals for countries and athletes…

/cdn.vox-cdn.com/uploads/chorus_asset/file/25507173/STK477_2024_OLYMPICS_2_D.jpg)

Another great deconstruction of current contemporary realities; a mapping of the landscape of gen-AI product user experiences. Very valuable if you are planning the role of gen AI in your existing service catalog or as inspiration for opening up your mind for new opportunities.

Robotic performances

Drones are an important part of warfare now. Bit surprised they are just now getting AI-enabled capabilities.

This looks rather silly. Just how it looks, but it also feels counterintuitive for a vacuum behavior to have to do steps…

Stimulation programs based on price make sense. If you can create a situation where the only vehicles are FSD, the system might become cheaper to operate.

A nice example of rethinking existing forms and functions.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25534993/crashperch_drone1.jpg)

Baseline studies for humanoids; good for comparison and promotion; making a market.

Making living robots remains a goal in Japan. Especially finding ways to communicate subtle gestures and facial expressions.

Immersive connectedness

This could become the theme park of the future. An immersive experience in unreachable but really existing places…

/cdn.vox-cdn.com/uploads/chorus_asset/file/25534970/Titanic_wreck_bow2.jpg)

Our digital life is ephemeral per definition.

Create your own basic internet device.

Tech societies

It will be a returning topic until the US elections; how tech will enable and later enforce the dark plans from the project 2025. Especially now with the support pledges of some big tech players that are not known for socially driven agendas (Thiel, Musk, Andreessen)…

These abbreviations… This could be a research program or group.

/cdn.vox-cdn.com/uploads/chorus_asset/file/13292775/acastro_181017_1777_brain_ai_0003.jpg)

Meta might follow Apple in slowing down functionality in EU.

Good for reviews too.

I need to chew on this a bit. Is AI preventing the democratizing power of amateur non-design or enlightening the ambitious amateur?

On the other side we have AI enhanced art that creates compelling stories for non-compelling realities.

Having digital realities recreated in real life objects is a returning meme. Like this building.

And some shout out to some YouTube channels that are fostering makers of all sorts; Van Neistat is repairing, Laura Kampf is preparing for a new phase in building, tool-nerd The Practical Engineer, and super nice to hear Dries DePoorter being interviewed on his tangible art.

Paper for the week

Will we get AI dementia? In this paper the researchers dive (technical) deep into a potential model collapse.

The curse of recursion: Training on generated data makes models forget

In this paper we consider what the future might hold. What will happen to GPT-{n} once LLMs contribute much of the language found online? We find that use of model-generated content in training causes irreversible defects in the resulting models, where tails of the original content distribution disappear. We refer to this effect as model collapse1and show that it can occur in Variational Autoencoders, Gaussian Mixture Models and LLMs.

Shumailov, I., Shumaylov, Z., Zhao, Y., Gal, Y., Papernot, N., & Anderson, R. (2023). The curse of recursion: Training on generated data makes models forget. arXiv preprint arXiv:2305.17493.

Looking forward

Not so many events are happening. Or at least, none are in my calendar, except Creative Mornings this Friday.

Save the date: 5 September we organize a ThingsCon Salon (workshop and meetup) on participatory machine learning and the role of the designer, in collaboration with the Human Values for Smarter Cities program. Find more information here.

Time to experiment a bit with the public beta on my iPad; that is always the first in line to test it for me. The math handwritten calculator is great just like everyone stated.

Enjoy your week!