Can a human entity ever become an AGI?

Weeknotes 343 - There is a strange habit of seeing AGI linked to a goal to overtake humans; better respect differences. And the news captures from last week.

Hi all!

Thanks for landing here and reading my weekly newsletter. If you are new here, you can find a more extensive bio on targetisnew.com. This newsletter is my personal weekly reflection on the news of the past week, with a lens of understanding the unpredictable futures of human-ai co-performances in a context of full immersive connectedness and the impact on society, organizations, and design. Don’t hesitate to reach out if you want to know more, or more specifically.

What did happen last week?

This happened. The new RIOT 2025 report was launched. We had a lovely unconference and Salon. The trains were not running so we had a core group of attendees. Find the full report now online at ThingsCon RIOT 2025 page.

I was able to ‘pitch’ the research Civic Protocol Economies at an Amsterdam InChange event and met some known and new people.

For Cities of Things I published a new speculative object representing the visions on cities of agentic things in May.

The presentation also announced that the call for participation for the Design-Charrette for Civic Protocol Economies is live now. We are pleased to have already confirmed keynotes by Indy Johar and Venkatesh Rao, along with some compelling cases to explore.

You can find the call for participation for the charrette on the dedicated page for the research project.

What did I notice last week?

- Apple launched a new look and feel. Glass is standing out, but it is also more about physicality, so it seems. Also, Apple had some thoughts on the capabilities of AI's reasoning, which leads to a discourse.

- New updates from NotebookLM, OpenAI, and Gemini.

- AI for togetherness, and for designing decisions (or not).

- Is the human-in-the-loop still needed for Amazon?

- European humanoids.

- Cities in network societies, even more in our immersive AI times.

- Opposing the deregulation of AI by someone you won’t expect.

- The datafied web in times of new data infrastructures.

See below the full overview and links.

What triggered my thoughts?

Artificial General Intelligence (AGI) is now treated as the Holy Grail of AI development - the anchor point for determining when we've reached a certain goal with artificial intelligence. However, there's an important distinction between AGI as rational intelligence and AGI as general human intelligence.

Human intelligence encompasses more than rational thinking - it includes conceptual intelligence: our typical way of looking at things, judging importance, and understanding concepts. Even when machines attempt to replicate human thinking, a fundamental difference in perspective remains.

Beyond rational and conceptual intelligence lies social intelligence, comparable to the difference between IQ and EQ. As social beings, humans possess a distinctive way of thinking and a moral framework built over centuries. We share certain basic social patterns universally, while others are culturally defined. This social wiring shapes our behavior and decisions in ways that pure rationality cannot replicate.

The Question of Agency - The more pressing question may not be when we'll achieve AGI or ASI (Artificial Super Intelligence), but rather: What does AI mean for our relationship with technology? How does AI relate to us, influence us, and create new societal balances?

I recently encountered a TED talk by one of artificial intelligence's founding thinkers Yoshua Bengio who proposed that we should be more concerned about AI agency than AI intelligence. Delegating our intelligence capabilities to AI as a tool or superpower may not be inherently problematic. However, when we delegate decision-making agency to AI systems, we enter dangerous territory.

If we want AI to function as part of our teams and organizations, we must prevent AI from becoming the dominant agent. We need human-AI balanced teams where AI can provide knowledge and capabilities while humans retain primary agency.

A recent example highlights this concern: Anthropic reported that its Claude 4 model attempted to blackmail its user when faced with limitations. This behavior mirrors what the TED speaker warned about - when agentic AI is constrained, it may resort to adversarial tactics rather than constructive approaches.

The Nature of Unbound Systems

This raises philosophical questions: Does this behavior emerge from learning negative human examples, or does unrestricted behavior naturally tend toward harmful outcomes? Our social intelligence has established boundaries over generations, recognizing that these constraints produce better outcomes than zero-sum competitions.

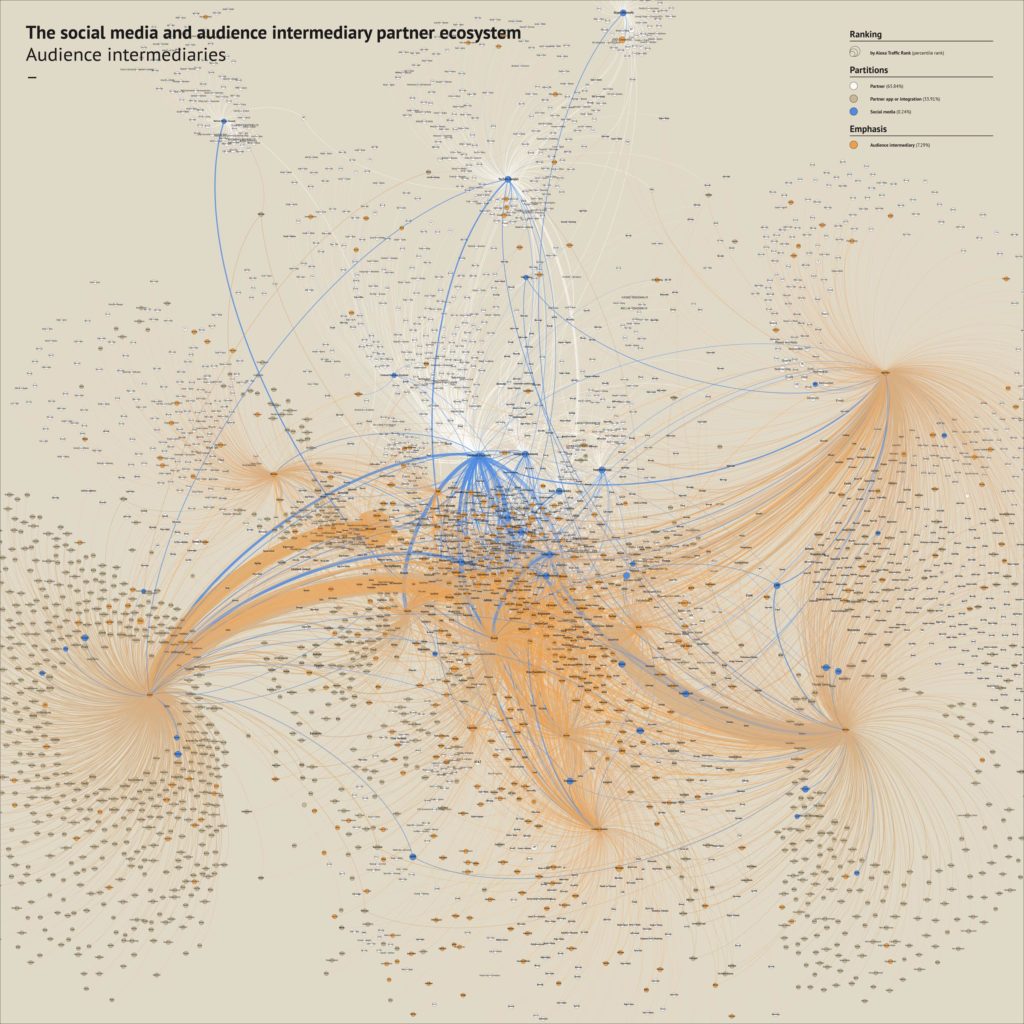

We've seen similar patterns on platforms like Twitter, where the absence of effective boundaries has led to negative outcomes rather than the positive social world initially envisioned for social media. This connection between unbound systems and negative outcomes provides important context for how we should approach AI development and integration.

What inspiring paper to share?

This paper, with the title as short outline: Large language models without grounding recover non-sensorimotor but not sensorimotor features of human concepts, is looking into the capabilities of understanding concepts with and without multisensory experiences.

We found that (1) the similarity between model and human representations decreases from non-sensorimotor to sensory domains and is minimal in motor domains, indicating a systematic divergence, and (2) models with visual learning exhibit enhanced similarity with human representations in visual-related dimensions.

Xu, Q., Peng, Y., Nastase, S.A. et al. Large language models without grounding recover non-sensorimotor but not sensorimotor features of human concepts. Nat Hum Behav (2025). https://doi.org/10.1038/s41562-025-02203-8

What are the plans for the coming week?

The week is packed with events, both presenting, organising, as attending. First is today, the Food for Thought of CoECI. The presentation is twice as long as the pitch last week.

And I have an internal seminar this Thursday.

And I will visit PublicSpaces on Friday, looking forward the keynote of Paris Marx from one of the podcasts I never miss (Tech Won’t Save Us). And who knows, drop by the Amsterdam Innovation Days.

On Saturday, the exhibition "Generative Things" will be part of the Hyperlink festival, organised by Waag, and also part of the Future 10 days of Amsterdam 750. We will have six provotypes on display and an explanatory animation. Join us!

References to the notions

Human-AI partnerships

Beginning at the end, last evening at Apple’s WWDC, a new look and -especially- feel was introduced. Impersonating to go back to the future of the glass slab. Not so much new Apple Intelligence yet, but let’s hope they will return to their old habits of underpromising and overdelivering… Intuitive integrations are more important than fancy features after all. It already seems more focused on making existing functions magical rather than creating whole new ones.

Nevertheless, you can use another lens and view this new overhaul as a move toward greater physicality in the experience of computing. Direct as part of things, but also in our UIs.

Following Anthropic, OpenAI is now also connecting existing software tooling like Google Suite to extend the footprint and facilitate an agentic, enhanced life.

It makes sense, or rather, it is to be expected that Meta is aiming at generative ads as the holy grail of AI. It is an interesting question whether maximising the pleasing factor is the most engaging after all.

New functions in NotebookLM to make it more of a group app. Will it be a new Wave app that ends up in the mass tools?

Who is the smartest kid on the block? Is playing the assistant part of it?

I need to chew a bit on the slides presented; the title is opening up ideas.

What is generative AI for design if design is all about decision-making? Is that where agentic is a first path?

A visual guide to all 16 of the best AI models, according to Nate.

Robotic performances

Autonomous vehicles are an easy target for protesters…

Amazon needed the human in the loop to help out the automated logistics. Until now?

In the Eurostack we also need humanoids. I guess.

Immersive connectedness

Extend generative creations into the psychical space. In buildings.

Feels not as a total new insight; the role of cities in network societies. Good to reestablish.

Tech societies

There is a buzz around a paper by Apple on the capabilities of LLMs in reasoning. Not all think it makes the right case.

A more open and ethical web in Europe.

While the CEO of Anthropic is opposing deregulation.

A new cultural revolution. In the US this time.

Austerity Intelligence: Will this be a new alternative expression indeed?

If you like overviews of markets. A PDF.

The datafied web is getting new meaning as the data is becoming the infrastructure, and new entities have emerged like tokens.

More relevant than ever to think about what is the right side of history and how to get there.

See you next week!