Chilling effects of AI as an exoskeleton

Weeknotes 332—Will we have AI as a mental exoskeleton? If so, will it crumple our thinking capabilities, or can we ensure a personal mental coach? Triggered by news from last week next to multiple other topics captured.

Hi all!

What is the feeling of last week? A good week of work on several projects, preparing events, and attending one or two. And the continuous restlessness of outside factors. I am not so much into walking into big demonstrations, but I joined the walk against upcoming fascism and racism last Saturday, especially to address the worries of limiting diversity, breaking down critical thinking platforms and institutions, and creating a society of self-interest and hate toward others. We can build on collective powers and plurality. Tech can help, but it has been a double-edged sword, too. To circle it back to this newsletter frame, design has the potential to shape a fruitful framework for collectivity and respect. I try to bring this to the projects I do and plan to do. Find out more about what is happening via this update.

What did happen last week?

The running projects are completing the report on Civic Protocol Economies, speaking to designer for the final touches, and preparing the next phase. I also looked into the coming program of the Summer of Protocols and watched their town hall meeting a lot to my reading list again.

The AI-report podcast (the renamed POKI podcast, a bit generic now, too bad) has silently become a 2,5-hour podcast. One of the topics covered last week was about generative things without mentioning it. Vibe making, mentioning tools like Lovable.dev, Bold.new, and good old Replit.com. These things are more apps, but you feel that vibe things are around the corner…

The exhibition Generative Things will get a Salon and a Speculative Design Workshop in April. The Meetups are up and running now.

For the RIOT 2025 publication, we received almost 20 article entries and have a partner for the unconference to launch the publication: 6 June in VONK Rotterdam. I expect to share more progress next week.

I am working on some proposals for continuous development for ThingsCon and Cities of Things. I hope that works out.

What did I notice last week?

You could not avoid Adolescence of course. The making off and reflective posts, etc, are as intense as ever. It is not really the place here to discuss. Still, it is super interesting how it can unlock such a global (North?) engagement, both by the topic and also how the choices in doing the one-takes add an extra intensifying experience. The making of movies becomes part of an intense, immersive experience. It contrasts the tendency of the rest of media that is hyper-synthesized and fake, so this is a form of ‘live ’ feeling that we apparently need. Will this be a one of, or a new format for these times?

Ok, that was more than I intended to say about it… Back to the ‘usual’ AI stuff.

- Continuous stories on Apple Intelligence panic with management changes,

- with vibe coding attention,

- with Google visual coding upgrades,

- with Claude doing web-search,

- and with Nvidia stealing the show with a long but rich new introductions keynote, ending with their robotic-empowering heart-ware illustrated with a cute Disney creature.

- Next to that, more more minor news. The robots are coming from China, with level 3 driving Zeekr cars, but especially with endless (Reels/Tiktok-)videos of a Chinese humanoid doing breakdance moves and kung fu, making Americans potentially nervous. It is a compelling item for Fox News, at least.

- Luckily, some reflective posts, as always. Like thinking about our relation to AGI and what we should aim for there. Check my triggered thoughts below.

- Heavy use of ChatGPT is not suitable for your mental health, a study by OpenAI and MIT is showing.

What triggered my thoughts?

What will the role of AI be as a potential "exoskeleton" for human intelligence? A mental exoskeleton. A future where we all have our own AI exoskeleton—an always-on, always-available augmented intelligence that assists us with various tasks. This represents the typical dream for Artificial General Intelligence (AGI), but is this what works best for us?

The Calculator Dilemma

We might face "the calculator effect." Are we unlearning essential skills if we rely on calculators instead of mental math? Or are we evolving to use better tools, making ourselves more capable overall? The same question applies to having augmented intelligence always available. Could our future resemble the one depicted in the movie "WALL-E," where humans became sedentary, constantly consuming media, growing physically unhealthy and disconnected from themselves?

A recent podcast discussed an alternative approach—having AI helpers focused on specific moments when truly needed. In this model, we deliberately activate this extra intelligent layer for temporary tasks, then switch it off. We remain aware of what capabilities we're adding to ourselves and potentially contributing to the intelligence. This connects to topics from previous newsletters about balancing human and AI capabilities in co-performance, leveraging each other's unique strengths. The key questions become: When do we switch AI on or off? What happens when we use a more focused version for specific tasks? So, incidental support seems preferable. However, another scenario is imaginable: AI could be designed to maximize our intelligence by stimulating our creativity and uniquely human skills, keeping us actively engaged rather than passive. A strange comparison, maybe, but you have different setups of hybrid cars: some have the ICE as the main engine and can switch on the electric one separately if needed to lower gas consumption. There are also concepts where the electric engine is the main engine, and the ICE is a generator for electricity.

The "Chilling Effect"

Apart from the setup, it is even important to think about the impact on ourselves. The so-called "chilling effect" that might be introduced when we are extended with intelligence that exceeds our own in certain ways, as it has a general accessibility to everything we never can have. How do we operate in a world where we're constantly aware of being near something smarter than us? This "chilling effect" creates a continuous feeling of being around an entity that knows more than you. Though no one forces you to adapt to it, you feel compelled to do so without fully understanding what you're adapting to. This can lead to stress or burnout. That is also directly comes back to the question: do we want AGI that's always smarter and always present, or do we prefer focused AI that excels at specific tasks we deliberately select? Do we understand why and how it's smarter than us in those areas? That is a different lens on being transparent on AI blackboxes. And challenges us human-AI designers to provide the right friction and honesty in the AI exoskeleton to feel recognized and in (self)control.

What inspiring paper to share?

Let me share a bit different paper, a research paper into “Representation of BBC News content in AI Assistants”.

To better understand the news related output from AI assistants we undertook research intofour prominent, publicly available AI assistants – OpenAI’s ChatGPT; Microsoft’s Copilot;Google’s Gemini; and Perplexity. We wanted to know whether they provided accurateresponses to questions about the news; and if their answers faithfully represented BBC newsstories used as sources.

https://www.bbc.co.uk/aboutthebbc/documents/bbc-research-into-ai-assistants.pdf (via newsletter Elger)

Maybe interesting to combine with “Governance of Generative AI”, released around the same time, that intends to look a bit more under the hood.

This introductory article lays the foundation for understanding generative AI and underscores its key risks, including hallucination, jailbreaking, data training and validation issues, sensitive information leakage, opacity, control challenges, and design and implementation risks. It then examines the governance challenges of generative AI, such as data governance, intellectual property concerns, bias amplification, privacy violations, misinformation, fraud, societal impacts, power imbalances, limited public engagement, public sector challenges, and the need for international cooperation.

Araz Taeihagh, Governance of Generative AI, Policy and Society, 2025;, puaf001, https://doi.org/10.1093/polsoc/puaf001

What are the plans for the coming week?

There is more to do for the projects I mentioned above, preparing for the Salon and SDW, and I need to decide if I will enter a proposal before the end of the week. We (with Tomasz) also are doing a workshop at Smart & Social Fest with Wijkbot-inspired AI-Labkar designing, and I need to write an article for RIOT myself too. Start outlining this week…

Responsible AI is another hot topic. A large program is RAAIT, and there is a conference this Thursday I will attend. I'll let you know how it was next week. Fresh research colleague Martijn Arets is doing a webinar on ghost workers this Friday, and I hope to be able to check in at Ordinary Democracy and Digital Cities in Detroit. Finally, I will check out the New Current probably.

See you next week!

References with the notions

I try to avoid the weekly trump trolling, as it is too cynical (and harmfull).

Human-AI partnerships

Apple Intelligence issues lead to reshuffling management.

OpenAI is reshuffling leadership, too, apparently to focus more on tech by Sam. The why will be discussed later, I guess. Earlier last week, in an interview with Ben Thompson, he was still talking business models. While the impact on well-being is also a relevant topic to address.

Anthropic is doing websearch now.

Externalizing memory strategies.

The holy grail of predictions is… the weather. So once in a while AI is promising breakthroughs

How long do we want to have AI doing tasks without human intervention, reframing the question a bit…

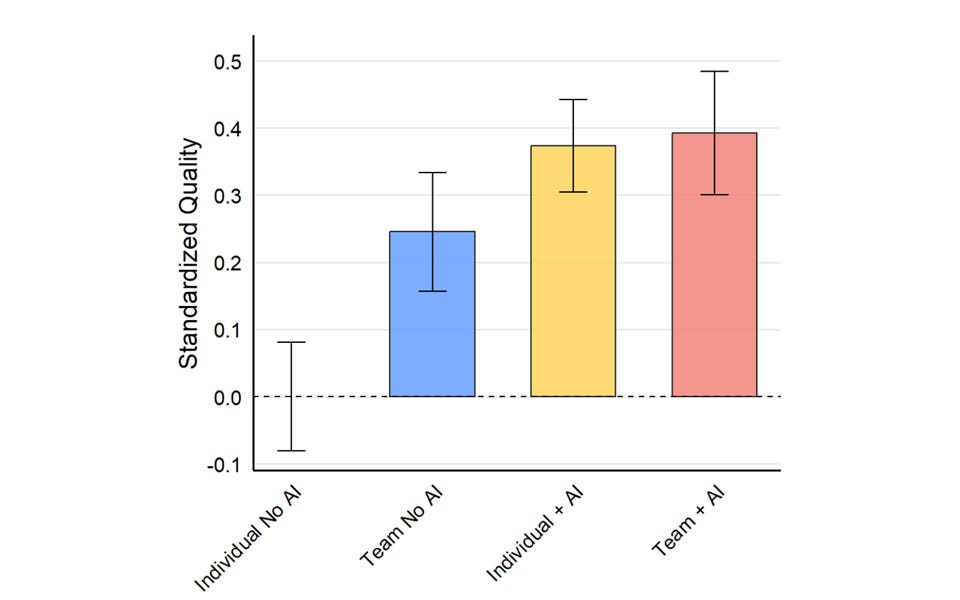

The weekly insightful post by Ethan Mollick, this week on the cybernetic teammate

The workflow Matt is describing resembles mine for writing the weekly thoughts above. I use Lex instead of Diane though.

Robotic performances

Nvidia is empowering robotic empathy

Level 3 self-driving from China

Robotic overkill

Chinese humanoids doing tricks.

Immersive connectedness

SpatialLM is the next thing. Some say Apple Watches will get cameras to access AI functions. Google has already launched a real-time capture mode. Combine this also with the SpatialLM…

I loved my first smart watch, the Pebble, but I am not going back now I think.

Makes sense for a connected devices company. All sensors are cameras in the future.

Tech societies

Handing over control to agents or better not?

What is open in AI domain? How will that play out in agent realities?

Will job replacement be there sooner than you think?

If you are into AI (modern) history, find the source code for AlexNet from 2012.

The future looking back on AI will have a place for the character ai case.

This week’s take on vibe coding: DIY apps.

Living things described as machines are dismissing complexities.

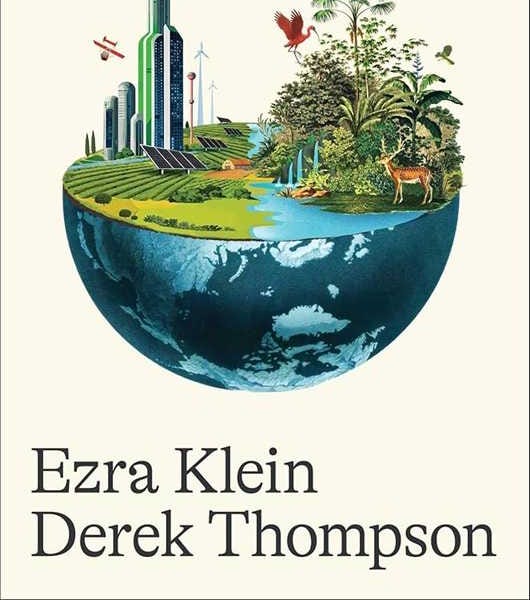

The concept of abundance in relation to technology reminds me of the attention for exponential organizations etc. The authors make me curious though.