Compressing time as a trigger for different co-performances

Weeknotes 339 - Compressing of time as trigger for different co-performances Thoughts on compressing time and our impact. Next to more news.

Hi all!

Thanks for landing here and reading my weekly newsletter. If you are new here, have a more extended bio on targetisnew.com. This newsletter is my personal weekly reflection on the news of the past week, with a lens of understanding the unpredictable futures of human-ai co-performances in a context of full immersive connectedness and the impact on society, organizations, and design. Don’t hesitate to reach out if you want to know more or more specifically.

What did happen last week?

The new pope chose his name, also because he saw his period as a social change in an industrial revolution-like period. He refers to the developments in AI. That is indeed even more than that other industrial revolution, challenging fate and religion. The new spirituality…

I have this other newsletter, a Substack, for Cities of Things. Every month I do a little exercise and and/or experiment to synthesize these newsletters of the past month into a speculative object, using the Design Fiction Work Kit of Near Future Laboratory. The exercise is to do a rapid speculative design session, and the experiment is to do it in close collab with Claude (or any other flavour of the month). Check out the latest for April here.

Other things from last week were linked to the freshly published research report on Civic Protocol Economies, with some related meetings for the design charrette.

Planning for the ThingsCon unconference 6 June and reading the drafts of the articles for RIOT 2025.

On Friday, we were guests at Maker Faire Delft, at the stand of Stadslab Rotterdam. Two types of wijkbot were presented: a first version of a Labkar, and a traditional workshop kit that was popular for building vehicles. Find more at Wijkbotsite-post.

What did I notice last week?

Scroll down for another week full of news on human-AI partnerships, robotic performances, immersive connectedness, and the impact on tech societies.

- Is AI your friend? A useful pal for sure.

- Indy is exploring the change in designing goals in multi-agent complex systems.

- Would you use an AI builder for Lego models? Feels like missing a point.

- Not training on your behavior becomes a brand asset.

- A new dash to distinguish our writing from AI. Am dash.

- It's too bad I missed his talk at AIxDesign.

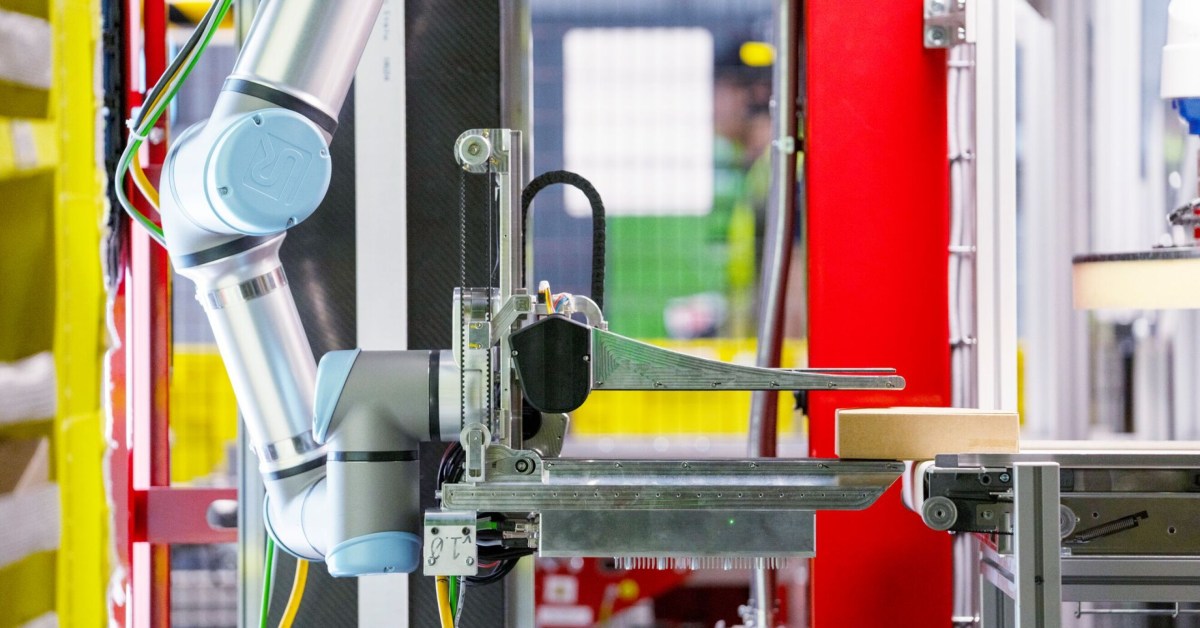

- Some new forms of robotic behavior and roles within the Amazon fulfillment centers, by improving the capabilities.

- Is this robot going nuts, or is it doing a new type of dance?

- Cheap robot pet. Edible robots. Good for recycling.

- This feels like a fit of the future; things that are taking initiative.

- Personal robotic devices, is this a first weak signal or a gimmick?

- I'm not sure how to read this: The partnership between IKEA and Sonos is ending.

- Is there finally a step forward with the Matter standard?

- It's Meta glasses after all. Apple is sourcing rumors about its own counter glasses.

- Never put off your headphones anymore.

- Eddie Cue is testifying in the Google Search antitrust case and mentioning that Google Search is dropping for the first time. Stirring commotion with analysts. Both Google and Apple make about 25% of their profit from kick-back fees of Google searches.

- OpenAI buys Windsurf, an AI code assistant, as part of the new battle for vibe code tools. Figma is announcing new tools, too.

- Creating your own market for new products.

- AI is a strategic goal for every company. And Amsterdam built its own (trusted) chatbot for civil servants to start with.

- The Venice Biennale architecture addresses some of the core themes, I would say: Intelligens. Natural. Artificial. Collective.

- The need for rituals in societies is built on ephemeral experiences.

- Four bad AI futures. Acc Brain Merchant.

- AI is the domain of new geopolitics.

- Connect this to the AI draining.

- Future labs are happening. The UN, as one exploring the global south, and the EU have a Futures Garden.

What triggered my thoughts?

What I'd like to address today is the compression of time—or the influence of time on time—through AI and AI tools. Several interesting thoughts have emerged on this topic recently.

Two weeks ago, during a speculative design workshop at ThingsCon, one group discussed this very timeframe phenomenon. AI tools that become integrated into our lives are creating a different relationship with time. Tasks that might have taken two hours in pre-internet times were reduced to two minutes in the internet era, and now we're approaching two seconds. While this may be a bold simplification, it captures the feeling of how we're eliminating slack from our work processes and discovering new efficiencies along the way.

Another perspective came from one of the AI ‘readers’ I follow, Nate B. Jones. This weekend, he discussed what he calls "compressed time"—not primarily about how humans gain time through AI, but about a fundamentally different way of experiencing time. As Jones puts it: "for humans it feels like time is getting short because there is so much work to do, while for AI it feels like work is getting compressed in because there is so much more compute, and time is therefore expanding."

We humans have a relative notion of time. When we sleep, we skip hours. When we're younger, time moves slower; when older, faster. Our perception makes time relative. AI, however, experiences time linearly. The mismatch occurs when AI performs tasks similar to human activities, creating a disconnect between how we and generative AI process temporal experiences.

This connects to how much time it traditionally took us to understand concepts or conduct deep research versus how quickly AI performs similar functions. The vast difference forces us to develop new approaches to working with these tools.

Jones also mentions Jim Fan from Nvidia, who is proposing a physical Turing test—a concept that remains distant from current capabilities. However, this gap might be bridged through simulation environments created to facilitate AI learning in compressed time. These environments allow AI agents to develop and evolve at accelerated rates, creating a different intent over time. This further illustrates the temporal disconnect between human and AI experience, while simultaneously pointing toward potential synergies in our relationship with these systems.

This brings me to a related thought from Emmet Scheer's talk at Summer of Protocols. He describes how the traditional alignment protocol stack starts with formulating shared success, evaluates different specializations, and then enforces this as the last step. With AI, however, we've inverted this approach—enforcement has become the point of departure for achieving alignment between AI and humans. Scheer suggests we need to return to designing from a foundation of shared success and thoughtfully dividing tasks. This is precisely what co-performance is about: creating collaborative relationships where both human and AI strengths are recognized and leveraged.

When considering time perception differences, the question becomes: how do we create effective co-performance? Rather than fighting these different time concepts or trying to make AI mimic our temporal experience, we should seek the optimal distribution, delegation, and collaboration.

This feels more like an introduction than a conclusion, but it raises important questions about co-performance between humans and our AI supporting tools. As we increasingly live alongside generative things (as mentioned previously in this newsletter), we must develop relationships that acknowledge our different timeframes.

How can we foster collaboration without trying to change each other's fundamental nature? Humans still possess qualities distinct from machines—though this might change in a decade or two. For now, how do we create genuine co-performances that leverage the strengths of both? More specifically, how do we shape co-performance related to these divergent concepts of time—compressed time, experienced time—in the human-AI collaboration?

What inspiring paper to share?

The paper by Angela Mackey et al. from last CHI (and also related to the research group at HvA) is looking into how we might relate to more-than-human worlds.

What Comes After Noticing?: Reflections on Noticing Solar Energy and What Came Next

In particular, the technique of “noticing” has been explored as a way of intentionally opening a designer's awareness to more-than-human worlds. In this paper we present autoethnographic accounts of our own efforts to notice solar energy. Through two studies we reflect on the transformative potential of noticing the more-than-human, and the difficulties in trying to sustain this change in oneself and one's practice.

Mackey, A., McCallum, D. N., Tomico, O., & de Waal, M. (2025, April). What Comes After Noticing?: Reflections on Noticing Solar Energy and What Came Next. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems (pp. 1-14).

What are the plans for the coming week?

In random order; Civic Protocol Economies, RIOT 2025, Field lab strategies, Responsible AI masterclass planning, first debrief team Makerslab minor.

And sessions on the curse of big tech, ethical dilemmas and AI

See you next week!

References with the notions

Human-AI partnerships

Indy is exploring the change in designing goals in multi-agent complex systems.

Is AI your friend?

A useful pal for sure.

Would you use an AI builder for Lego models? Feels like missing a point.

Not training on your behavior becomes a brand asset.

A new dash to distinguish our writing from AI. Am dash. (via Sentiers).

Too bad I had to miss his talk at AIxDesign.

Robotic performances

Some new forms of robotic behavior and roles within the Amazon fulfillment centers, by improving the capabilities.

Is this robot going nuts, or is it doing a new type of dance?

Footage claimed to show a Unitree H1 (Full-Size Universal Humanoid Robot) going berserk, nearly injuring two workers, after a coding error last week at a testing facility in China. pic.twitter.com/lBcw4tPEpb

— OSINTdefender (@sentdefender) May 4, 2025

Cheap robot pet

Edible robots. Good for recycling.

This feels like a fit of the future; things that are taking initiative.

Personal robotic devices, is this a first weak signal or a gimmick?

Immersive connectedness

Not sure how to read this: IKEA and Sonos partnership is ending.

Is there finally a step forward with Matter standard?

It are Meta glasses after all. Apple is sourcing rumors about their own counter glasses.

Never put off your headphones anymore.

Tech societies

Eddie Cue is testifying in the Google Search antitrust case and mentioning mentioning that Google search is dropping for the first time. Stirring commotion with analysts. Both for Google, as for Apple, that make about 25% of there profit from kick-back fees of Google searches.

OpenAI buys Windsurf, an AI code assistant, as part of the new battle for vibe code tools. Figma is announcing new tools too.

Creating your own market.

AI is a strategic goal for every company. And Amsterdam built its own (trusted) chatbot for civil servants to start with.

https://www.wsj.com/articles/johnson-johnson-pivots-its-ai-strategy-a9d0631f

The Venice Biennale architecture addresses some of the core themes, I would say: Intelligens. Natural. Artificial. Collective.

The need for rituals in societies build on ephemeral experiences.

Not sure about this.

Quite a lengthy manifesto.

Four bad AI futures. Acc Brain Merchant.

AI is the domain of new geopolitics.

Future labs are happening. The UN as one exploring the global south, and EU has a Futures Garden.

https://un-futureslab.org/stiforum2025-isc/