Edge Surveillance and Civic Resistance

Weeknotes 344 - This week’s thought(s) is triggered by talks at PublicSpaces and other news on the development of edge-ai, opening up new chapters in surveillance. And other news as well.

Hi all!

Thanks for landing here and reading my weekly newsletter. If you're new here, an extra welcome! You can expect a personal weekly reflection on the news of the past week, with a lens that understands the futures of human-ai co-performances in a context of full immersive connectedness and their impact on society, organizations, and design. Let me know if I can help make sense of a particular topic. Read more on how.

What did happen last week?

Yoohoo! Last week was a celebration, as it was 20 years ago that I started blogging via targetisnew.com. This weeknotes ritual is therefore just part of that period 🙂

Last week was busy with presentations for HvA colleagues introducing the Civic Protocol Economies research and an interactive session to discuss the format of the design charrette.

Speaking of the latter, the call for participation is open. First deadline 6th July. Find more information here.

I attended the PublicSpaces conference, which featured some interesting talks, panels, and workshops. Check some reflections below.

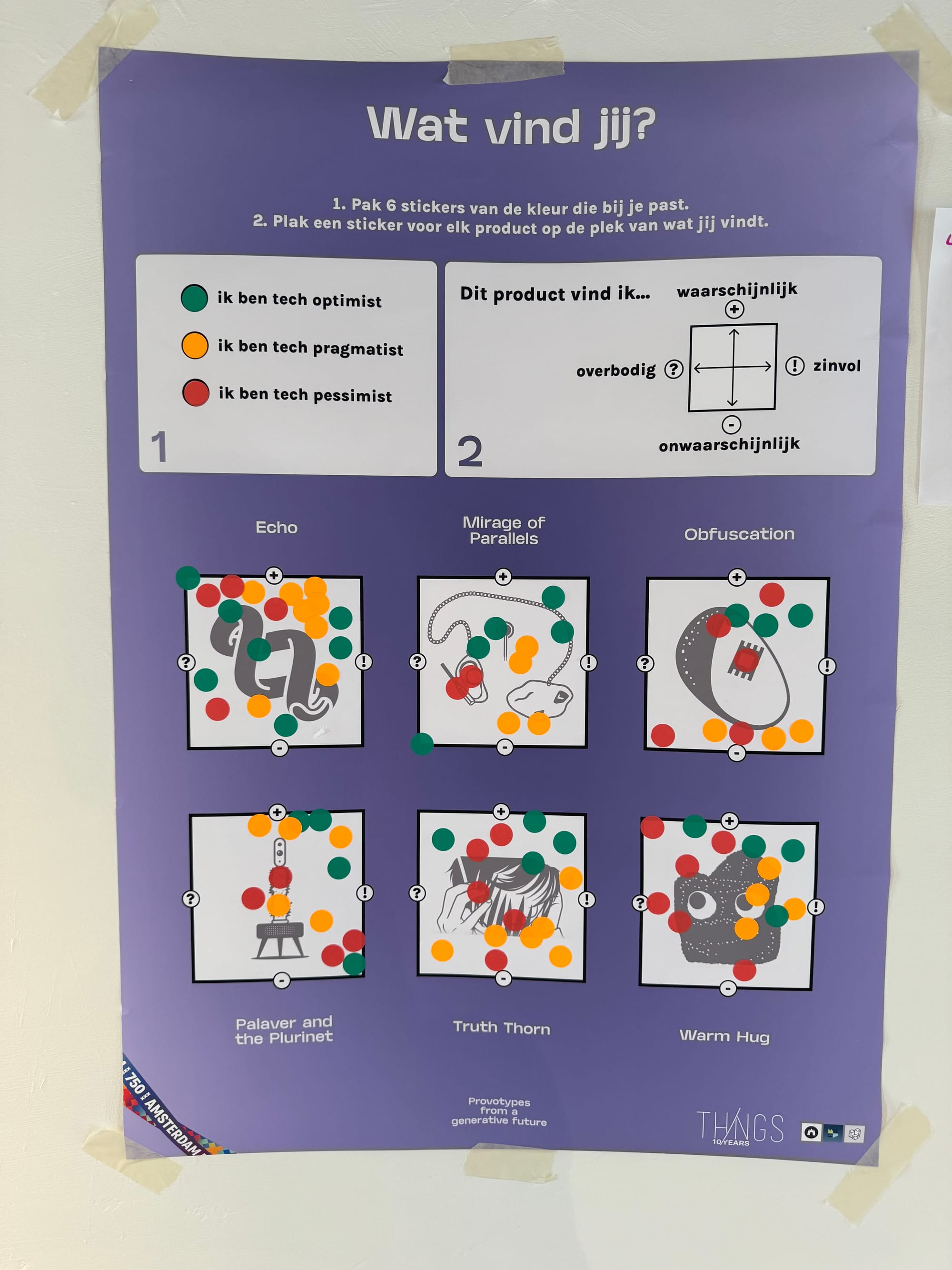

And part of PublicSpaces was the Hyperlink event on Saturday, where the ThingsCon exhibition on Generative Things was embedded. Many people passed by, and some provided feedback. Check this animation to learn more about the projects.

What did I notice last week?

- The day (and week) after the opinions on the Liquid Glass introduction: distraction, unnecessary, or a new archetype for our mobile life?

- A new blog post on gentle singularity timelines by Sam Altman unlocked different viewpoints. The paper by Apple on the inability to reason keeps triggering new opinions.

- How will OpenAI respond to the acquisition of Scale AI (and Google)?

- Find out what an AI-browser is. Beyond AI overviews in Google that is.

- And more on how to deal with continuous AI.

- Both Google and Apple are silently updating their connected home platforms.

- The role of media on the consequences of AI, power use of AI.

And much more.

What triggered my thoughts?

Recent protests in Los Angeles targeting Waymo autonomous vehicles have sparked a fascinating discussion around technology, surveillance, and resistance. As I listened to Paris Marx and Brian Merchant on the System Crash podcast analyze these incidents, I found myself contemplating the deeper implications beyond mere property destruction. The protesters didn't randomly select these vehicles. They targeted Waymo cars as symbols of Big Tech's entanglement with governmental power. While Google (Waymo's parent company) maintains relationships with the administration, the danger isn't necessarily intentional collusion but rather how easily the vast data collected by these vehicles could be subpoenaed and absorbed into existing surveillance infrastructure.

At first glance, one might ask: with extensive surveillance already embedded in our urban environments, what significant threat do these roaming vehicles pose? The answer lies in the qualitative difference of data collection. Unlike static cameras, autonomous vehicles gather dynamic, contextual information—identifying not just faces but patterns, relationships, and movements. Their mobility creates a different kind of surveillance net, one potentially more invasive than traditional methods.

These protests function on a second level as well—as messages to the tech-savvy, middle-class users of such services who might otherwise remain uncritical of technology's societal implications. By disrupting the comfortable relationship between privileged consumers and their tech conveniences, protesters may spark awareness among those who have the power to influence change within the industry itself, similar to how the Tech Workers Coalition advocates for more just and ethical products.

Rather than merely expressing opposition to Waymo specifically, these actions signal a broader warning about the intensifying relationship between government and Big Tech. This relationship could evolve into "edge surveillance." A concept that is an evolution beyond traditional surveillance, where intelligence increasingly operates at the endpoints of our technological ecosystem, not only used for the ‘traditional’ visual sensoring and selecting of images, but also possibly taking an active role in understanding the intentions of captured situations.

At last week's Public Spaces Conference, Paris Marx was a keynote speaker and expanded on this theme, presenting two essential pillars for addressing Big Tech's dominance: regulation and building alternatives. The regulatory pillar involves not only limiting data collection and improving labor conditions, but also challenging the false dichotomy between innovation and regulation. The real question isn't whether innovation should happen, but what kind of innovation we should prioritize.

The second pillar emphasizes building something new: state-led investment in alternative technology infrastructure, public technology centers rooted in communities (similar to libraries), and international alliances developing global approaches that prioritize the public good over shareholder value. This vision represents a fundamental shift from our current trajectory toward what the "Generative Things" exhibition at the conference explored—an environment where AI becomes ambient, omnipresent, and increasingly embedded at the edge of our technological systems.

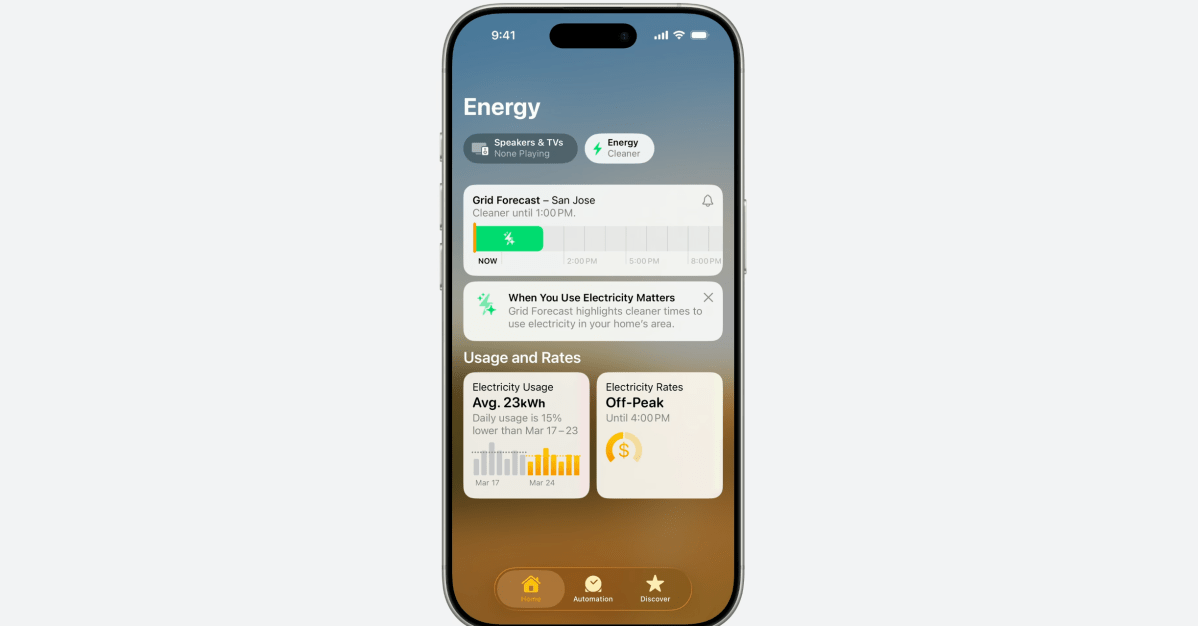

This connects directly to the concept of "edge surveillance"—where intelligence increasingly operates at the endpoints of our technological ecosystem rather than in centralized clouds. With AI models becoming smaller and more efficient (as Apple demonstrates with “Apple Intelligence"), processing happens locally on our devices. While this approach offers certain privacy benefits, it also creates new vectors for surveillance and control when these systems are designed without civic interests at heart.

The parallel initiative on civic social media, researched by the Civic IxD research group, offers a potential response. Rather than replicating Big Tech's individualistic, consumer-oriented approach, this project explores social (civic) software designed around collective interests and the public good. The critical insight here is that we must design not just for functionality but for intention—embedding our values directly into the architecture of these systems. At PublicSpaces TheNextSocials was introduced as an alternative intention.

A returning call at PublicSpaces was to be aware that we need to pay attention that the new alternatives we are building not get the same intentions. The challenge ahead requires us to reimagine technology as a civic infrastructure—one that serves communities, that empowers collectives rather than groups of individuals. Let’s hope that we don’t need to burn Waymo cars, but start the real “revolution” in how we conceive, design, and govern our technological future.

What inspiring paper to share?

Speaking on the role of energy and AI, is part of the twin transition approach by the EU. A reflection on this in the paper: On the environmental fragilities of digital solutionism. Articulating ‘digital’ and ‘green’ in the EU’s ‘twin transition’

This article explores how the twin transition is constructed within EU policy discourse, examining how these two initially separate transitions are brought together**.** This integration, however, is asymmetric: the logic of digital solutionism increasingly shapes what qualifies as an environmental problem, thereby digitally framing sustainability challenges.

Horn, C., & Felt, U. (2025). On the environmental fragilities of digital solutionism. Articulating ‘digital’ and ‘green’ in the EU’s ‘twin transition.’ Journal of Environmental Policy & Planning, 1–19. https://doi.org/10.1080/1523908X.2025.2515225

What are the plans for the coming week?

In the aftermath of some events, admin and cleaning are necessary. The design charrette planning is the main thing. And some nice conversations planned. I am also looking forward to a twice-postponed expert session on Civic Urban AI, and a half-day visit to “Design for Human Autonomy.” On Friday, it is the day of the Gemeenschapseconomie.

I cannot attend Sensemakers on swarm robotics (too bad), and a different type of event, the introduction of Bio/Bot at Mediamatic. Also not near, but if you are, ArtBasel has a post digital media fair.

On Saturday, you can experience the Ring in Amsterdam in a different way, or do an UX camp.

See you next week!

References with the notions

Human-AI partnerships

Much to do about the Sam Altman blogpost predicting a gentle singularity. It is near, and also near the intelligence, too cheap to meter. Only fix the alignment.

Reasoning becomes quickly too cheap…

I am still a fan of Arc browser, and I'm still sad it will die out softly. The replacing new Dia browser, shaped around AI, I need to dive deeper into. It seems to open a kind of a backchannel to your watching and using of the sides you are using.

In the category of not-so-surprising news. We find a way to deal with the new reality.

A returning theme; we need our boredom to remain engaged.

Having AI summaries of your Google search results is a first step towards conversations. Oh that is just like Chat…

The rise of the reasoning machines. Or not, if we follow Apple. Multiple opinions.

Multi agent research at work.

Robotic performances

The safety of humanoids.

New insights for autonomous driving.

Immersive connectedness

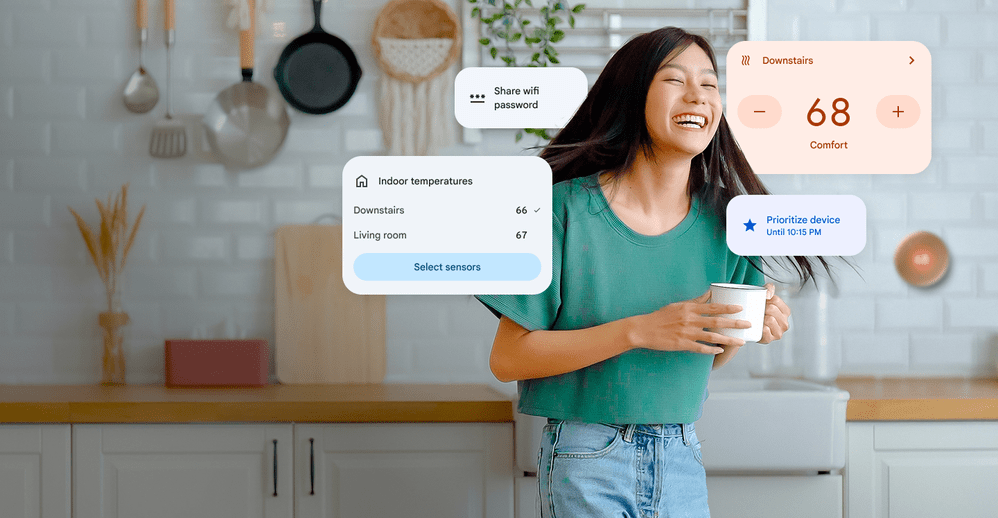

IoT and smart home are not dead yet. New features are added to Google’s Home app. Apple also did some tweaks.

We need cameras that both observe and shape reality

Immersive AI, in the toys.

Tech societies

Meta is buying Scale AI, which triggers a lot of questions about how their clients (eg, competitors) will react.

Matt on video call rituals in cafes makes me wonder if there is something as ‘dark cafes’ (like dark stores and dark restaurants) happening sometime?

You probably know from experience and people around you; the use of the web is changing with the GenAI tools.

https://www.washingtonpost.com/technology/2025/06/11/tollbit-ai-bot-retrieval/

The role of media with sensational headlines in the temperature on impact of AI on jobs. Ed Zitron in a long piece.

Next to the claim on AGI timelines, makes Sam Altman in his piece also a claim on energy use of genAI. It is challenged by others.

The new battle is for power in energy more than language model power.

If you are into the law side of AI companions, this might be a podcast for you.

It is not getting better.

And another long read for the list.