Embodying physical AI simulations

What is the impact of creating a contained environment of physical AI for future realities? Read more in the triggered thoughts. And much more.

Hi all!

Thanks for landing here and reading my weekly newsletter. If you're new here, you can find a more extensive bio on targetisnew.com. This newsletter is my personal weekly reflection on the news of the past week, with a lens of understanding the unpredictable futures of human-ai co-performances in a context of full immersive connectedness and the impact on society, organizations, and design. Don’t hesitate to reach out if you want to know more, or more specifically.

What did happen last week?

At the moment that you read this, we (Andrea and I) are finalizing the ThingsCon report RIOT2025 so that it can be printed to be ready for coming Friday, as we will launch at the Salon and unconference (feel invited to join). Something that always takes a little bit more time than calculated. That’s why this newsletter is a bit shorter; the timebox is a bit tighter than usual.

Additionally, I prepared a short pitch for next Thursday on Civic Protocol Economies (see below), while refining the outlines of the design charrette.

What did I notice last week?

With Ascension Day (and Memorial Day in the US) and the week after, all big tech players announced their latest in AI plans and updates, it was a bit quieter on the news, in my opinion—nevertheless, some interesting notions from the news. I will not summarize it here due to the mentioned timebox; please scroll down.

What triggered my thoughts?

Mary Meeker has published a new report again, this time focusing on the impact of AI and the different speed of AI as tech compared to other tech. The AI Daily Brief podcast is making a case on the problems of trying to catch trends in AI when this is going all so fast. Without delving into the differences between trend watching, future forecasting, and understanding hyperbolic trends, such as those enabled by AI, I was prompted by one element that was discussed.

Understanding AI's trajectory requires looking beyond current signals to deeper structural shifts. The most significant isn't happening in chatbots or productivity tools—it's in how AI will integrate into physical reality.

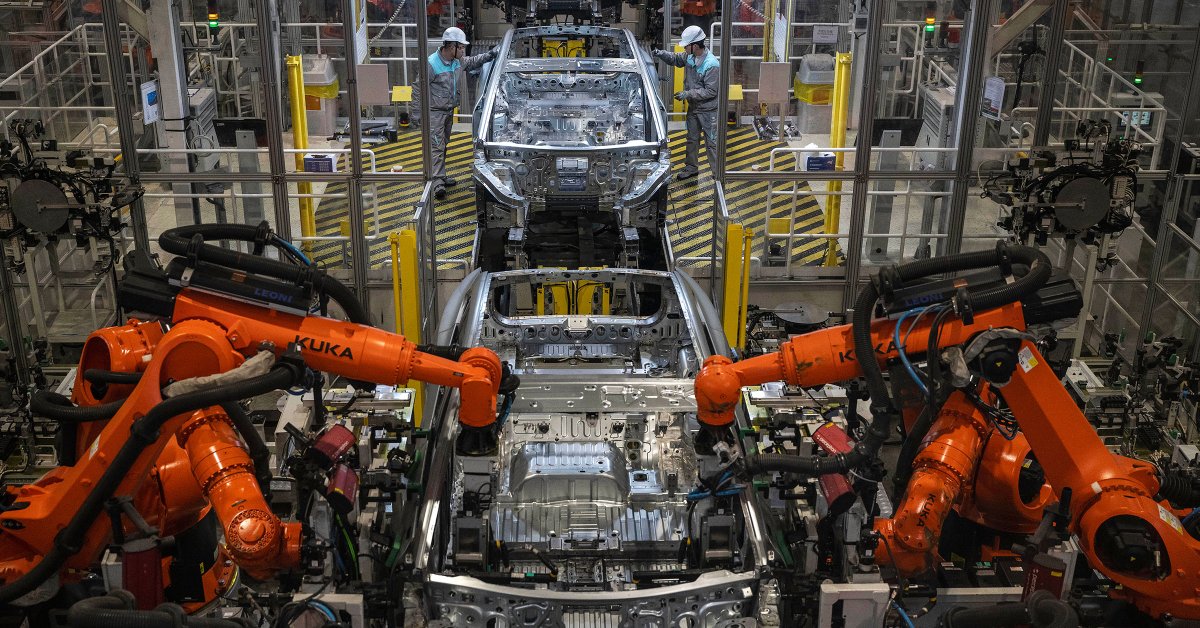

One thing that triggered me in the Mary Meeker report is the comparison of the US and China regarding the adoption of AI in industrial robots. China's adoption rate is multiple times higher than that of the US. This relates to the role of manufacturing in shaping culture. As Tim Cook has explained, it is not easy to move iPhone production to the US. It is not only a cost issue, but also a capabilities difference—industrial engineering is much more embedded in Chinese manufacturing culture.

This suggests that physical AI adoption won't be determined by technology availability alone, but by industrial readiness. China's manufacturing ecosystem has become a natural laboratory for physical AI development.

What would this mean for the broader development of physical AI? Will the learnings from applying AI in the contained environment of industrial production become the breeding ground for physical AI in society? Or can the same be reached by creating a virtual simulation model as Nvidia does for robots?

The role of physical AI in our experience of everyday reality is a topic that I addressed here before, and is also part of the RIOT report article. In this shorter newsletter, I formulate it as a question to address in the coming editions further.

What are the plans for the coming week?

Next to the unconference and Salon on Friday, I will attend the end event of the Transition Scapes project today. And on Thursday I share the project Civic Protocol Economies at Amsterdam InChange knowledge event. I will not attend What Design Can Do for obvious reasons. Sensemakers has a DIY edition.

Oh, and I will check WWDC, of course, next Monday. Curious about the latest delays in AI, new immersive touches in UI of the OS, and hopefully some surprises in leveraging embeddedness. We’ll see…

Overview of the notions

Human-AI partnerships

Hollywood is embracing AI co-production.

AI as a second opinion, an AI listening to your doctor's appointment.

A new model of DeepSeek R1-0528 challenging o3 and 2.5 pro. And the model name blurriness.

As soon as vibe coding becomes more popular and systemic, security becomes extra important.

The changing face of AI to us, human users.

AI first should mean Humans first. Tim O’Reilly thinks.

Use case.

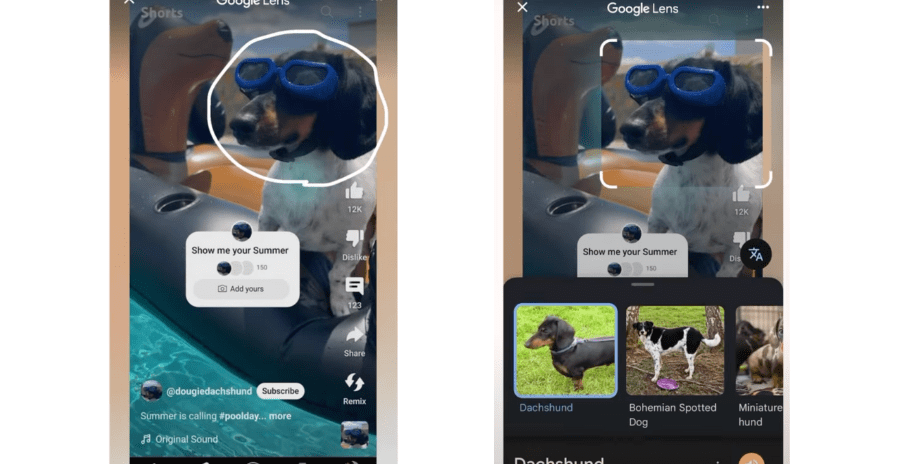

Combine with that device?

Robotic performances

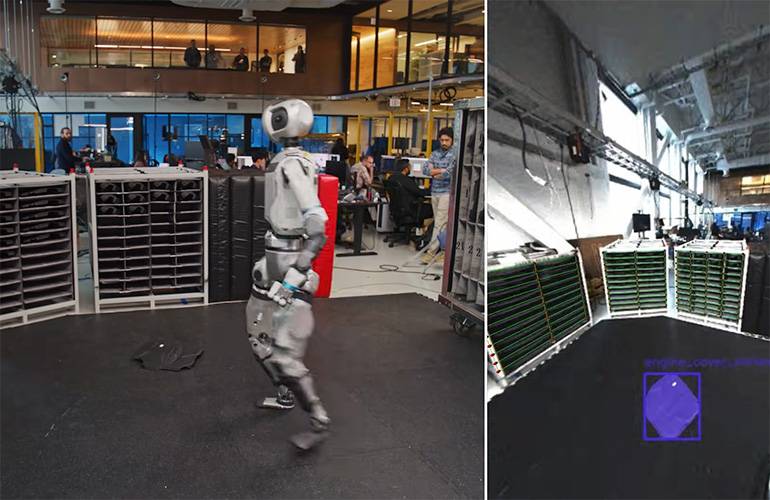

Literally a robot performance happening here.

What is the role of synthetic comfort?

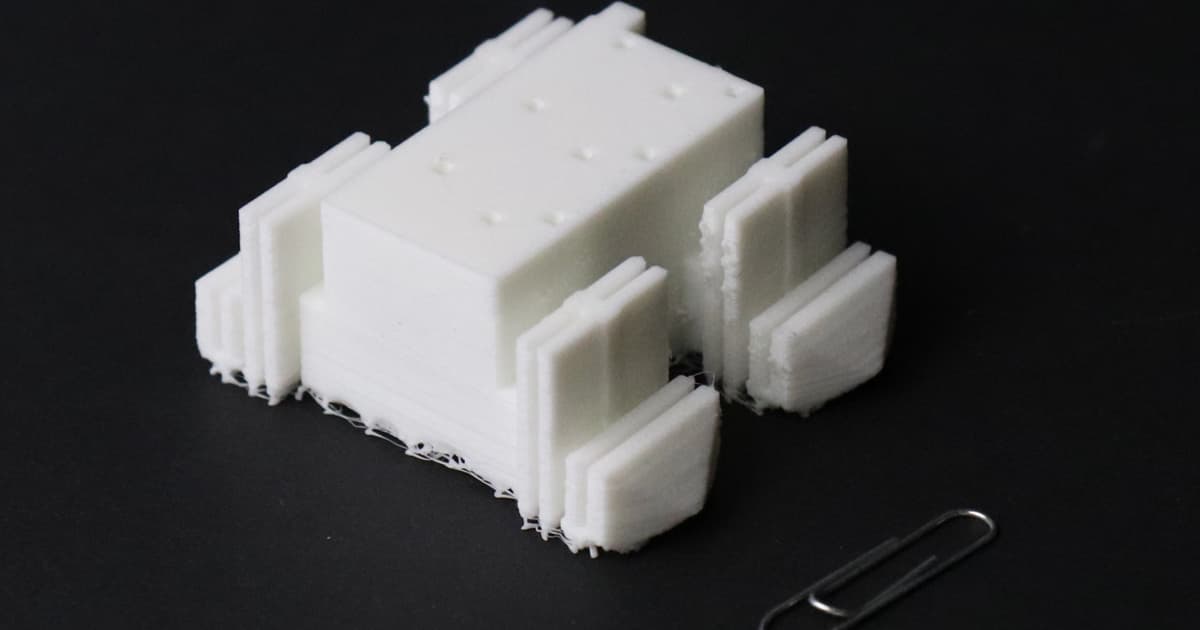

3D printed robots

Adapting robots.

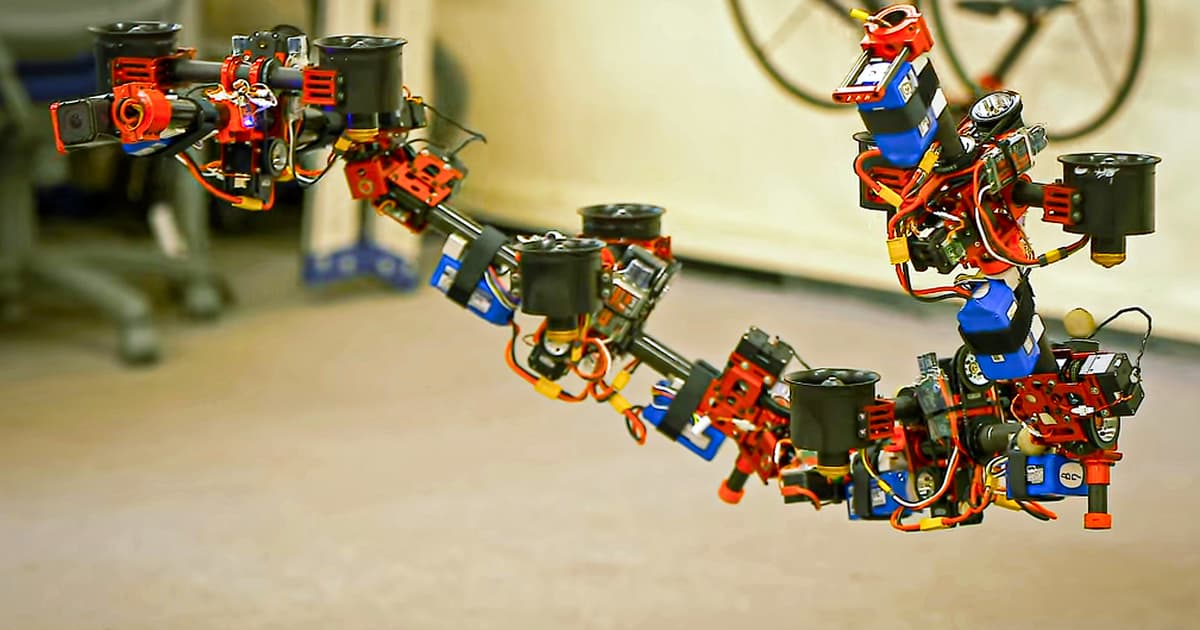

Survival of the fittest.

New creatures emerge.

Immersive connectedness

The OpenAI and Ive collaboration is still discussed last week.

The Everything App, US edition.

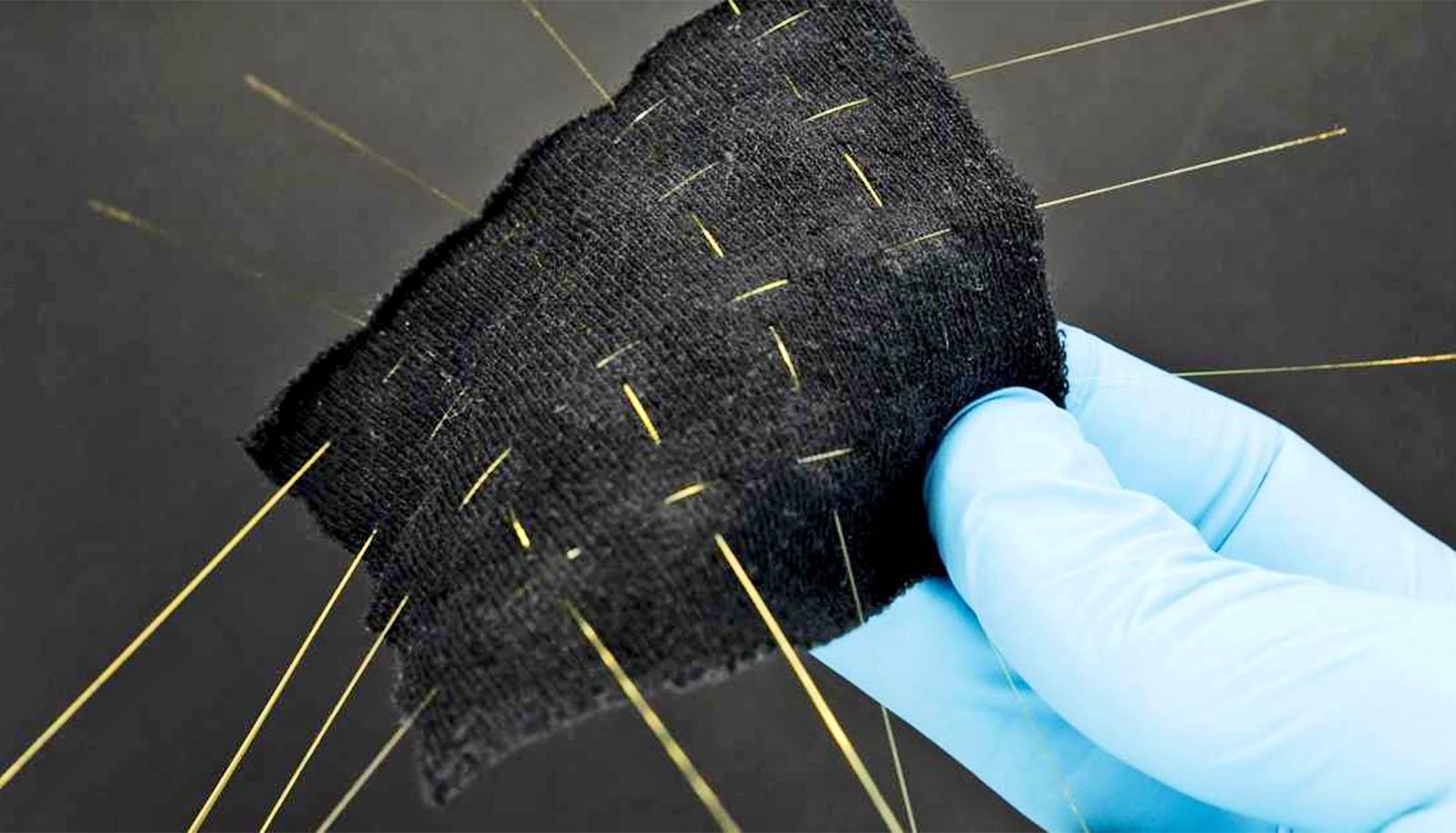

Fabrics with capabilities.

E-tattoos. Will it be a thing?

Inverse immersiveness.

Things you need.

We are sheep.

Tech societies

How the EV tech of China is boosting it’s tech rise.

How to become a player in AI. With money.

Innovation is not resulting in bots, it is initiated by them.

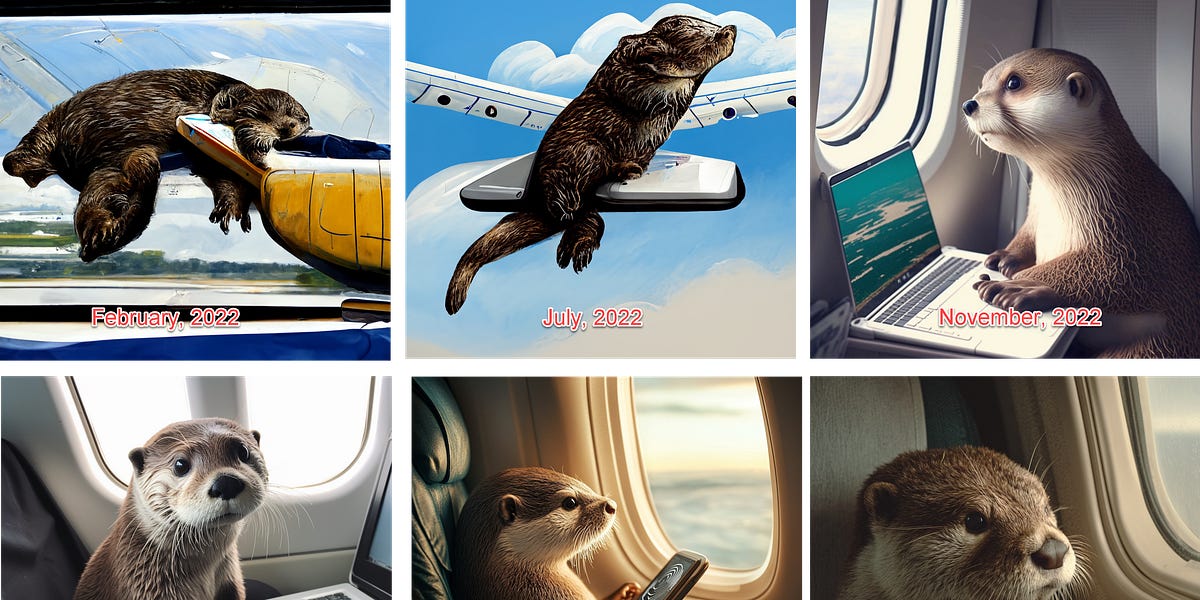

Ethan Mollick has a nice benchmark for AI maturity and it involves otters.

The cult of consent, or the habit to consent while passing websites, might be harmful when the internet as entity becomes more intense and personal.

In the end it is all about building empires. Again.

Energy efficiency and AI. On time?

The hype is real, and comparable for sure.

Zoom out. The state of GenAI

AI monopolies

Ethical AI principles overview.

Hope it will work out well.