Negotiating mutual agency in agentic to agentic realities

A shorter newsletter this week, looking back at the ThingsCon Salon, and thinking about the role of human agency in the Agentic to Agentic realities.

Hi all! I sent out this week's newsletter a day later than usual due to the organization of the ThingsCon Salon with Exhibition “Generative Things” on Monday, and the Speculative Design workshop yesterday. With the exhibition setup and workshop preparation consuming most of my time, I haven't been able to provide my usual detailed synthesis of the notions from the news. Instead, I'll share some preliminary thoughts I noted over the weekend and a brief overview of recent news.

What did happen last week?

Let me share some first thoughts from the Monday workshop, linked to the second iteration of the exhibition "Generative Things" following our December conference. Monday's session focused on examining the exhibited objects in a city context, with exhibition items organized into six categories. We investigated how these objects might foster interactions between citizens and their urban environments. One key insight that emerged was how generative interactions create an "in-between layer" - a synthetic space between reality and a more dreamlike or subconscious realm—and what role this could play in creating new interactions between people who are submerged in their own bubbles. This concept became our central focus for Tuesday's speculative design workshop, where we asked: How might we utilize this in-between layer to address how people retreat into their personal "bubbles," disconnecting from shared reality? I will make a synthesis of the outcomes soon, as it will also inspire the next phase of the exhibition that will be part of the Toekomstdagen in June, at the Hyperlink day in the Waag. More to be expected in the coming newsletters.

What triggered my thoughts?

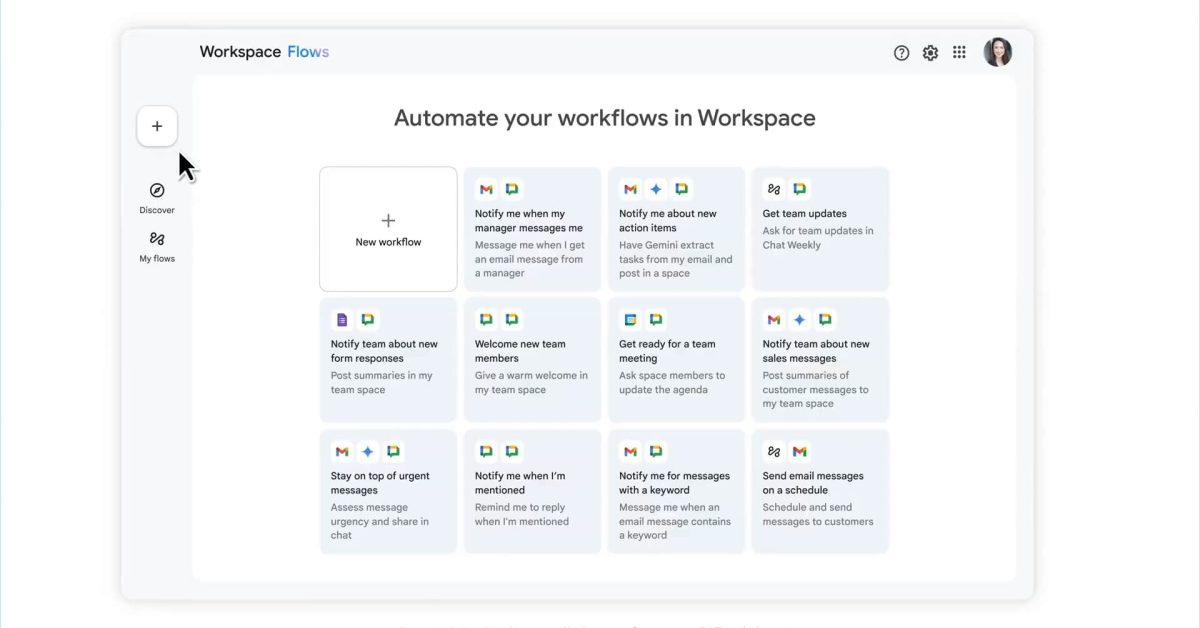

I've been reflecting on Google's recently announced A2A (Agent-to-Agent) protocol (check the thoughts by Nate on MCP, A2A, and the Beginning of the End of Explicit Programming). The concept of AI conversational tools communicating with each other has been something of a running joke in tech circles for years. Numerous experiments and videos demonstrate this interaction, often presenting it as something magical or mysterious. A natural question arises: Why would these agents need to communicate using human language? Couldn't they simply exchange information through more efficient computer protocols? Some humorous depictions show agents "realizing" they're in a conversation with another AI and switching to rapid, incomprehensible exchanges - amusing but ultimately missing the deeper implications.

The more interesting consideration isn't simply agent-to-agent communication but agentic-to-agentic systems. This connects to my ongoing research on neighborhood robots or "hoodbots" and a concept I recently proposed for a museum installation. I've been exploring the idea of neighborhood-based robots performing various tasks: collecting and delivering items, facilitating group activities from outside yoga classes, street cleaning, and other community services. These robots would initially be controlled by neighborhood residents who assign them specific tasks. The compelling question becomes: What happens if these bots begin to optimize their collective functioning independently, forming a kind of swarm community? This would create a new form of "hoodbot citizenship" within the neighborhood. How would humans relate to this emergent robotic community?

Implications for Human Agency

While this neighborhood scenario differs from A2A protocols, both raise similar questions about our relationships with autonomous systems and communities of agents working together. Initially, these might be simple one-to-one interactions, but they could easily evolve into complex networks. It kinda relates to the concept of thinking at the previous startup I worked for, which focused on a service-oriented architecture providing an additional perspective. There, we developed systems to deconstruct services into contracts of promises and counter-promises. The goal was to create agent networks representing different stakeholders who could negotiate automatically toward mutual understanding. This type of autonomous negotiation will likely be the next step in agent-to-agent protocols - systems that can negotiate outcomes based on our preferences without direct intervention. This raises the fundamental question: When we delegate thinking to management systems that oversee these agent networks, are we surrendering or enhancing agency? As these technologies evolve, determining whether they augment or diminish human autonomy becomes increasingly critical.

What are the plans for the coming week?

This whole week is filled with interesting events. I am invited to a mini-work conference on the impact of virtualisation on the physical living world, where we will, among other things, dive into popping bubbles again.

On Thursday, the yearly Smart & Social Fest from Rotterdam University of Applied Sciences is themed “Dag van de Toekomstmakers”. I'm looking forward to talks and workshops, and happy we can host a workshop ourselves (together with Tomasz) on the development of the Labkar Rotterdam “co-create with technology for the neighborhood”.

See you next week for an Easter edition of the newsletter.

Notions from the news

As mentioned, this time, the list is shorter than usual.

NVIDIA’s New AI: Insanely Good!

Apple airlifts 600 tons of iPhones from India 'to beat' Trump tariffs, sources say

Anthropic Education Report: How University Students Use Claude