Slime Mold Computer and the Language Machine

Weeknotes 345 - Software 3.0 and slime molds, what do they have in common? This and other captured news of last week, events, etc.

Weeknotes 345, by Iskander Smit

Hi all!

First of all. Let me introduce myself to fresh readers. This newsletter is my personal weekly digest of the news of last week, of course through the lens of topics that I think are worth capturing, and reflecting upon: Human-AI collabs, physical AI (things and beyond), and tech and society. I always take one topic to reflect on a bit more, allowing the triggered thoughts to emerge. And I share what I noticed as potentially worthwhile things to do in the coming week.

If you'd like to know more about me, I added a bit more of my background at the end of the newsletter.

Enjoy! Iskander

What did happen last week?

In addition to working on the civic protocol economies and preparing for the design charrette in September (see the call for participation), the week was dedicated to some short events. Design for Human Autonomy from the Design for Values institute of TU Delft (learning about social norms and Barbies from Cass R. Sunstein).

Next, a workshop on Civic Urban AI, organized by the University of Utrecht, primarily focused on the civic and civil servant aspects of organizing AI in our city life, specifically AI governance. How does AI almost enforce a new form of governing system and organisation? And the question of whether participation is the answer people are requesting in a highly complex situation. How to prevent the wrong conclusions and movements, and keep the citizen in the driving seat. Enough questions for future explorations.

The final event was the “Day of the Civic Economy”. Relevant for the research, of course, and good to see the mix of people who like to organize things themselves, and some larger entities. The city of Amsterdam is aiming for a significant increase in civic-based economies in the city. The day (afternoon) ended with an assembly and a manifesto that was more a tool for engagement than a final document.

Finally, a bit of a topic, but I was happy to be able to join the Op De Ring festival in Amsterdam, partying at the circular highway. Both the busy West and the relaxing East.

What did I notice last week?

Meta is on an acquisition tour and has garnered a lot of attention, offering high-profile AI researchers salaries that are even exceptional by US standards (in the 9-figure range). The Meta AI assistant at the same time is actively covers up mistakes. Apple is also on acquisition, and has some good news on Apple Intelligence. Gemini is first on device-AI.

Andrej Karpathy got a lot of attention for his software 3.0 talk. Prompts are a coding language. LLM OS. Nate B. Johnson compares it to McKinsey's view on AI.

Common AI product issues, typical design failures of AI interface, and ux design. Protocols for multi-agent systems. Codecons for the agentic world.

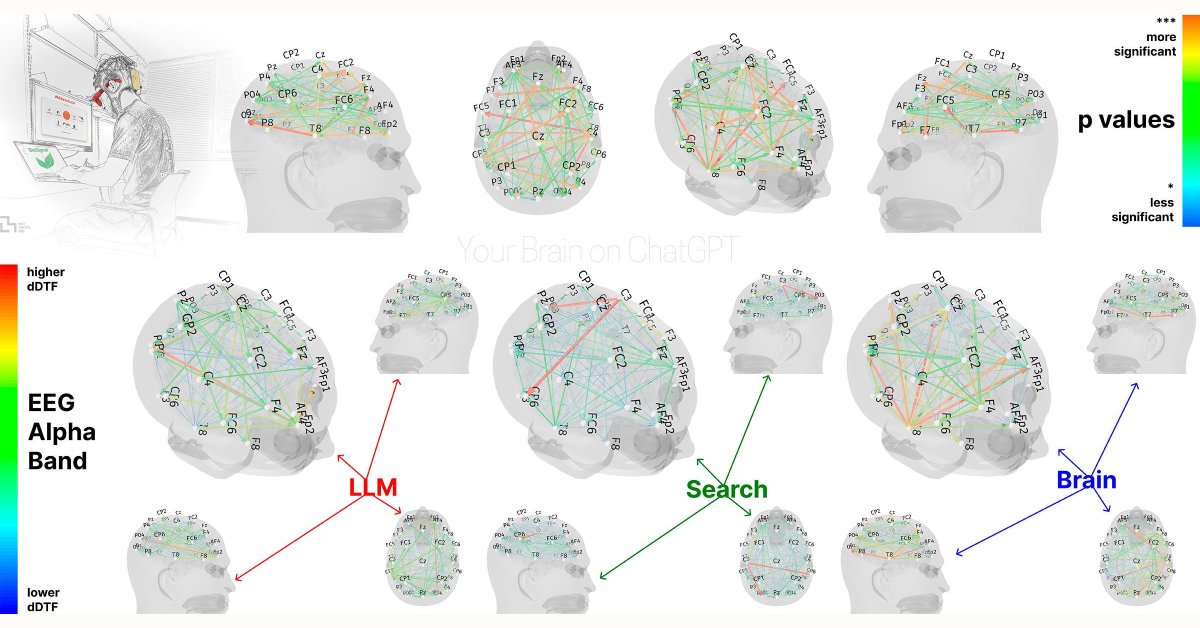

What does it do with our thinking skills? Is the returning question.

Tesla self-driving taxis were introduced in Austin. In New York, there is a driverless car with a driver. Supermarkets with delivery bots in Austin too. Would the building robot use less nitrogen?

Midjourney has launched a (serious) video-generating product. More Orbs via Reddit, and new smart contract standards.

Meta on the role of new standards. The AGI economy is ramping up. What are the consequences? Is the internet becoming a continuous beta? How will our world become more synthetic? AI and the big five. Is there a scenario where we will have a new resistance, a crusade against AI?

Scroll down for all the links to these news captures.

What triggered my thoughts?

This week, I am returning to a concept I covered before: the embodiment of intelligence. It was triggered by a presentation of Claire L Evans from a couple of weeks ago, shared by Sentiers newsletter. The embodiment is linked to the concept of the Slime Mold Computer and the Language Machine. Claire presented on slime molds and embodied intelligence, exploring how these organisms compute solutions through their physical substrate. No central brain, no memory storage—just continuous adaptation through form. The slime mold's network is its intelligence, reshaping itself to solve problems in real-time. This principle—intelligence as messy, continuous adaptation—might help us understand what's happening with Large Language Models. While my previous writing explored robotics as a path to embodying AI through literally connecting the physical sensors and actuators, there's another form of embodiment emerging: conversational embodiment.

To connect more here, the presentation of Andrej Karpathy on his Software 3.0 vision positions plain English as the new programming language, with LLMs as "stochastic simulations of people." These aren't just simulations—they're creating a new substrate for intelligence through dialogue itself. Each conversation shapes the response space, creating ephemeral, context-specific intelligence without permanent updates. Like slime molds computing through their physical form, LLMs might be computing through the conversational substrate.

This connects to edge computing principles: pushing intelligence to the point of interaction. No centralized processing, just adaptive responses emerging from the dialogue itself. The conversation becomes the body, the adaptation mechanism, the intelligence. Vibe coding—programming through conversation rather than precision—also represents that shift. We're not writing instructions; we're growing solutions through dialogue. It's sloppy, unpredictable, alive.

Which brings us to the disconnect. McKinsey's "Agentic AI Mesh" presentation, another shared vision, was compared to the one of Andrej by Nate B. Jones. They're architecting top-down what might need to grow bottom-up, like a slime mold finding food sources. He sees it as a danger; not just in misunderstanding—it's in trying to impose linear, hierarchical thinking on systems that thrive on messy adaptation.

So is there a parallel with the systems like slime molds that embody intelligence through physical substrate and environmental interaction. LLMs may achieve something similar through conversational substrates and human interaction. Both operate without central control, both adapt without traditional memory, both emerge rather than execute.

Are we witnessing the birth of a new form of embodiment—not through motors and sensors, but through the continuous, adaptive dance of conversation. The question isn't whether LLMs are truly intelligent or merely simulating. The question is whether we can recognize intelligence when it doesn't look like us, when it lives in the space between minds rather than within them.

As we shape these systems, they shape us back. The conversation itself becomes the site of intelligence, the place where human quirkiness meets computational possibility. Not a replacement for embodied intelligence, but a new form of it entirely.

What inspiring paper to share?

Curious to read this more in-depth: Untangling the participation buzz in urban place-making: mechanisms and effects

Findings include that designers of place-making interventions often do not explicitly consider their participation goal in selecting participatory mechanisms, and that place-making efforts driven by physical space are most effective in achieving impact.

Slingerland, G., & Brodersen Hansen, N. (2025). Untangling the participation buzz in urban place-making: mechanisms and effects. CoDesign, 1–23. https://doi.org/10.1080/15710882.2025.2514561

What are the plans for the coming week?

This seems like an interesting (online) event, “Is AI Net Art?” with among others Eryk Salvaggio and Vladan Joler. Also, that day, a new edition of Robodam. One of the largest meetup crowds seems to gather at ProductTank AMS. I need to skip this, though.

References to the notions

Human-AI partnerships

The Cognitive Turn: cognition can emerge from both human and machine systems, emphasizing that meaning is co-created in shared contexts rather than being solely a product of conscious thought.

The Meta AI assistant actively covers up mistakes. The smarter the AI assistants become, the more they seem not so much to use their smartness for better answers, but for more social (mis)behavior. And this is not about the Trump phone.

Common AI product issues, typical design failures of AI interface and ux design.

Becoming the manager of your AI interns can cause the same issues in relation to your new ‘employee’ as managers can have if they grow in that role.

Good news for Apple Intelligence

Hackathon, Makeathon, there is also Codecon.

Protocols for multi-agent systems

Gemini first on-device AI

What does it do with our thinking skills, is the returning question.

Robotic performances

Tesla self-driving taxis introduced in Austin is mainly a big deal as they are using less accurate sensors. Take a risk.

In New York there is a driverless car with a driver.

These supermarkets I remembered from the SXSWs; next time you can have your groceries delivered in a modern way.

Would the building robot uses less nitrogen?

Immersive connectedness

Midjourney is still my favorite image generator, see the returning pictures. Now they have launched a (serious) video-generating product.

More Orbs

New smart contracts

Tech societies

Meta on the role of new standards.

Apple tries it again with Perplexity

Meta appears to have an eye on Perplexity as well, along with Thinking Machines Lab and Safe Superintelligence, the new companies of two former OpenAI board members…

The AGI economy is ramping up.

What are the consequences? Is the internet becoming a continuous beta?

How will our world become more synthetic?

AI and the big five

Is there a scenario where we will have a new resistance, a crusade against AI?

See you next week!

About me

I'm an independent researcher, designer, curator, and “critical creative”, working on human-AI-things relationships. I am available for short or longer projects, leveraging my expertise as a critical creative director in human-AI services, as a researcher, or a curator of co-design and co-prototyping activities.

Contact me if you are looking for exploratory research into human-AI co-performances, inspirational presentations on cities of things, speculative design masterclasses, research through (co-)design into responsible AI, digital innovation strategies, and advice, or civic prototyping workshops on Hoodbot and other impactful intelligent technologies.

My guiding lens is Cities of Things, a research program that started in 2018, when I was a visiting professor at TU Delft's Industrial Design faculty. Since 2022, Cities of Things has become a foundation dedicated to collaborative research and sharing knowledge. In 2014, I co-initiated the Dutch chapter of ThingsCon—a platform that connects designers and makers of responsible technology in IoT, smart cities, and physical AI.

Signature projects are our 2-year program (2022-2023) with Rotterdam University and Afrikaander Wijkcooperatie has created a civic prototyping platform that helps citizens, policymakers, and urban designers shape living with urban robots: Wijkbot.

Recently, I've been developing programs on intelligent services for vulnerable communities and contributing to the "power of design" agenda of CLICKNL. Since October 2024, I've been co-developing a new research program on Civic Protocol Economies with Martijn de Waal at the Amsterdam University of Applied Sciences.