Understanding intelligence as collaborating components

This week’s notes offer thoughts about AAR and intelligence, collaborative power, synthetic satellites, and more based on the latest news.

Hi all!

Thanks for landing here and reading my weekly newsletter. If you are new here, have a more extended bio on targetisnew.com. This newsletter is my personal weekly reflection on the news of the past week (for 10 years soon!), with a lens of understanding the unpredictable futures of human-ai co-performances in a context of full immersive connectedness and the impact on society, organizations, and design. I am now working on a research exploration on Civic Protocol Economies within Civic interaction Design of HvA, and building on the Cities of Things knowledge hub. Also, I co-curate and coordinate a ThingsCon exhibition and publication. So, rather busy, but still looking for gigs, like facilitating Cities of Things workshops/masterclasses.

What did happen last week?

So I started with Majid's training. On AAR (Analogy, Abstractions, Reasoning). It is building on the theory that was the basis for Structural, where I was involved two years ago already. I found it interesting to join for a couple of reasons. To refresh the thinking, that is even more relevant in the current shifting times from generating to reasoning AI, connecting it to the real world. And of course meeting new and longtime not-seen people. The triggered thoughts below include more impressions and connections with other insights from last week.

For the next phase of the research on Civic Protocol Economies, I conducted some more conversations about possible case studies that can inspire or reference the workshop we will organize in September.

I am not jinxing it, but the explorative research report is now designed and needs only some final text updates to be ready to send out. So, hopefully, next week, there will be a link here, too.

I briefed the students of the Minor Makerslab of AUAS for an exploration of generative things. I also had nice conversations on the potential of the Wijkbot, and while connecting this to the other activities, things started to connect even more. I took the chance to make an overview of the activities of Cities of Things as part of the step-by-step update of the web presence. I am still looking into an opportunity to do the last iteration of the Wijkbot-Robo-perspectives workshop in its full length (say about 3 hours with reflecting, ideating, prototyping, and field testing as parallel activities). Let me know!

Oh, and I learned about the potential of satellites as sensors in a lecture by Dimitri Tokmetzis at Sensemakers. It made me think that there is another layer of synthetic reality built upon a heavy layer of computation that has our trust to be a super reality, while it is life. But what if computational cleaning and even nudging predicting becomes part of this…? The most significant change in our current times is not that we will be augmented with a new level of intelligence exoskeleton (ubiquitous intelligence), but what the computed reality will do with our sense of reality. Our sense of being…

What did I notice last week?

Find the notices from the news below, in human and AI partnerships, robotic performances, immersive connectedness, and tech societies.

- Among others, introduced Perplexity, an extension of the mobile app that is eating in Apple Intelligence promises.

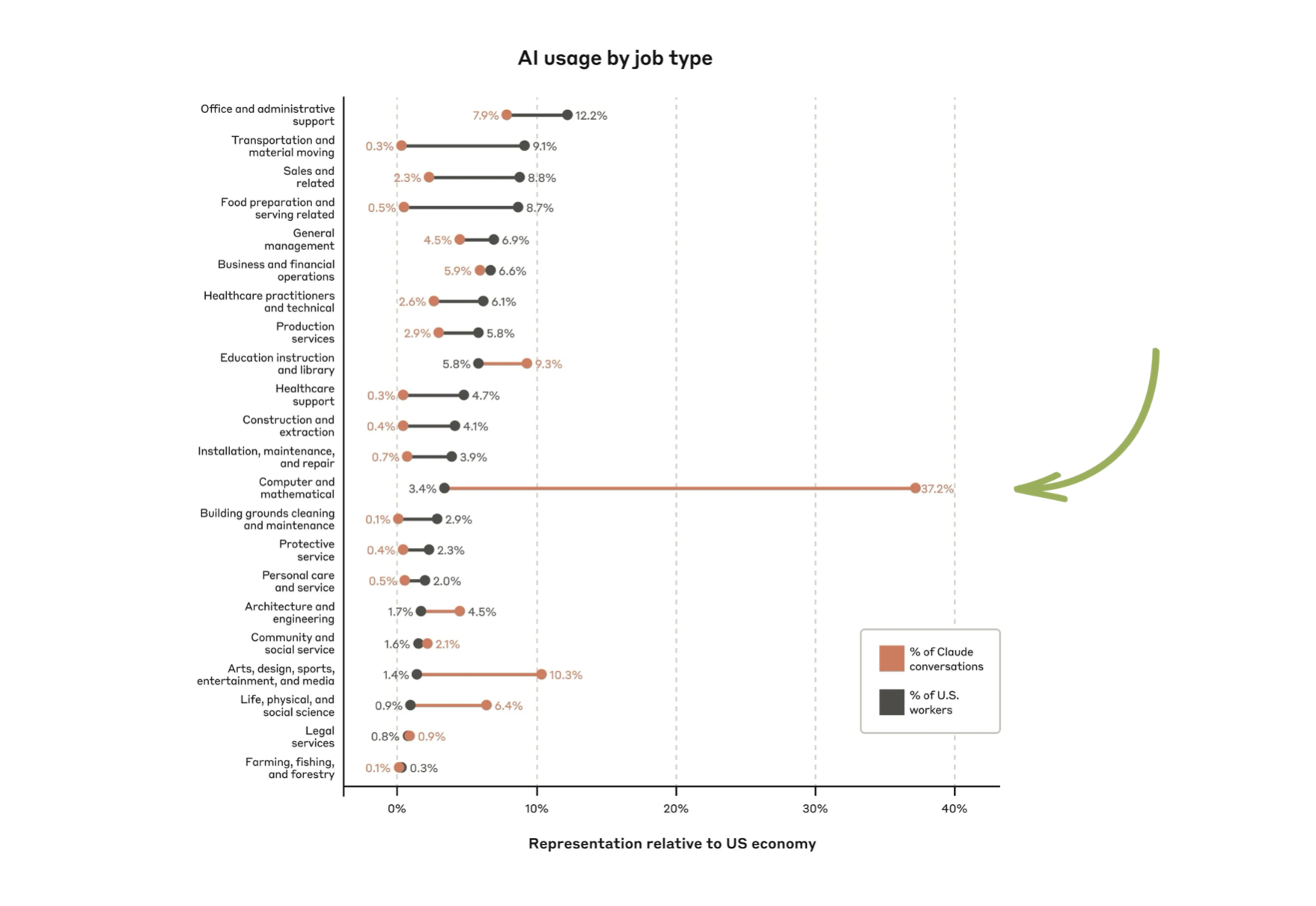

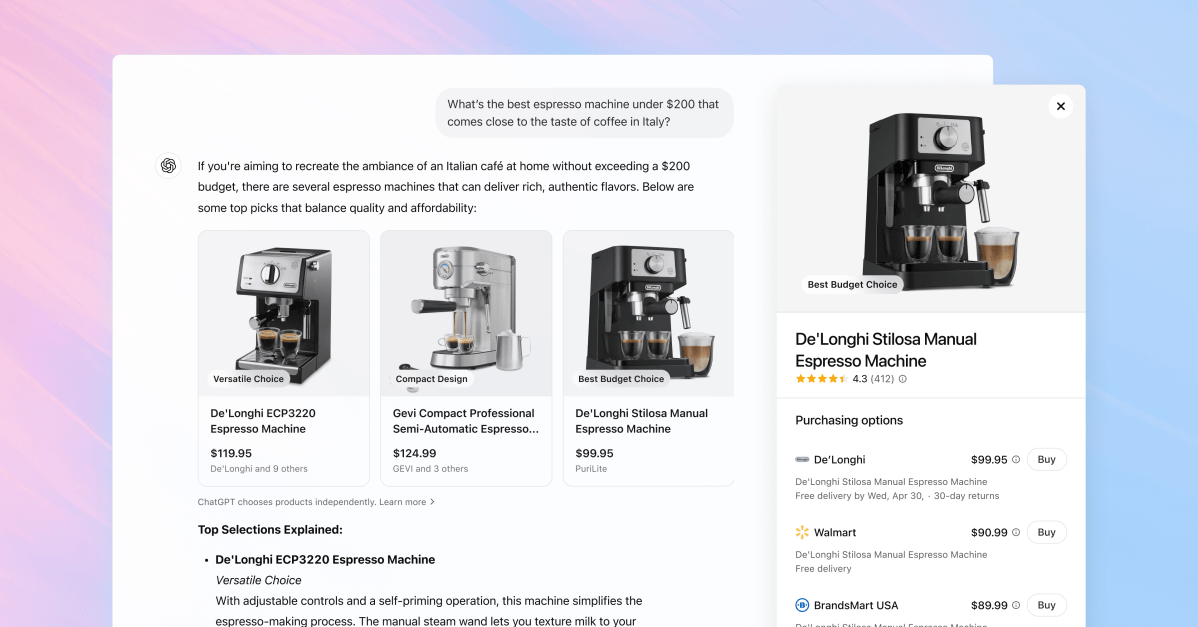

- AI as search or shopping buddy, it becomes default sooner than you think

- The state of autonomous driving. And an off-the-shelf exoskeleton for doing construction work.

- Human as luxury. The next thing.

- Continuous talk about vibe coding as a societal phenomenon. Also physical ones.

- The new American panopticon

What triggered my thoughts?

This might be a returning theme, but we should rethink how we conceptualize intelligence in relation to artificial intelligence. This idea has emerged in several recent discussions. Maybe the most compelling was during the talk betweenDan Shipper (Every) with Kevin Kelly (long-time tech philosopher). This theme also appeared in the long read special of the AI Daily Brief where an article on agency was covered (Agency is eating the world).

While processing the articles Majid suggested for his training on AAR (Analogy, Abstraction, Reasoning), the entanglement of humans with intelligence was explored. I checked in with NotebookLM to see what these reads delivered in combined insights:

Essentially, while both engage in analogy and abstraction, the sources highlight differences in how these processes function. Human intelligence, described as having analogy at its core, and involving the perception of 'essence', seems to generalize and abstract effectively and dynamically from limited examples. In contrast, current AI models, like LLMs, are characterized more by sophisticated pattern matching and reliance on vast datasets, struggling with true generalization to novel tasks that require 'Type 2' abstraction or program synthesis from minimal examples, unlike humans who effectively combine both 'Type 1' intuition and 'Type 2' discrete reasoning. These distinctions range from philosophical views on analogy to computational challenges in replicating human-like generalization and the pervasive role of these processes in human thought according to perspectives like Hofstadter's.

In his conversation with Shipper, Kelly suggests that intelligence is something like electricity when it was first discovered; we could demonstrate it, and observe it, but we did not understand it. He argues that understanding intelligence requires another thinking framework; Kelly is conceptualizing intelligence as built up from components.

In the Econtalk episode with Dwarkesh Patel, the collaboration between humans is highlighted as an important part of being able to understand things . This raises the question: Can different AI agents reach that same level of stimulating exchanges or is there something else needed?

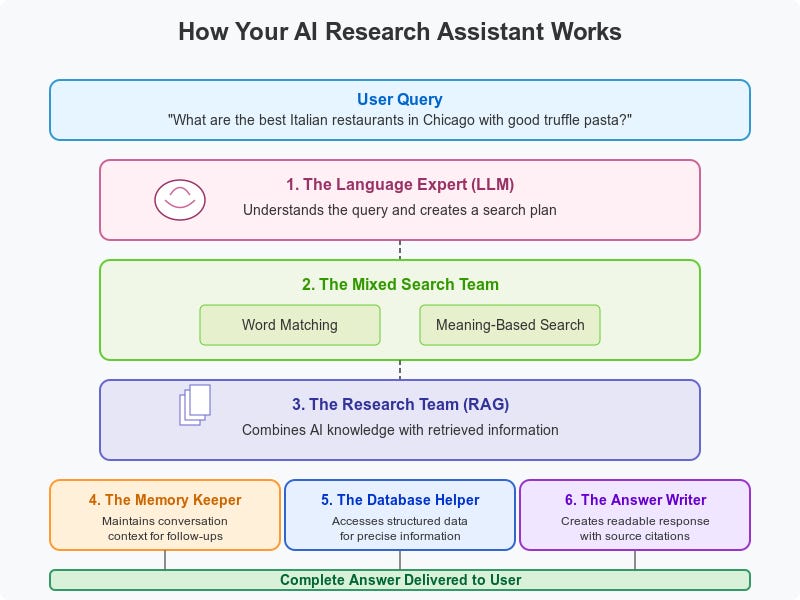

These discussions bring me back to early ideas about the use of AI in the Structural product we were designing in early2023. The engineered model was discussed continuously , with concepts rethought, which was necessary to create something that could cover all kinds of complexity in service ecosystems where mutual understanding between all stakeholders was the aim. We concluded that rather than a single AI solving this challenge, AIs that act as delegates of the different parties involved in the ecosystem discussing together would make more sense.

I still believe this could be an interesting approach. While the 'governance' needs to be carefully considered, the system cards need to be primed with a sense of responsibility , and humans still have an important role in bringing in a different form of intelligence (see what I do here…). And ideally we would mix human intelligence with other forms of intelligence too.

AAR might be a good framework to bring this all together. As Francois Chollet proposed at a conference on AGI: it is not about the level of data and capacities of inference, but the way we can unlock the power of thinking as generalists. He argues that true artificial general intelligence (AGI) is not about achieving high skill on specific tasks or benchmarks, but about the capacity for robust generalization and abstract thinking.

Are we reaching a point of AI saturation? How much intelligence can we handle? Nate is wondering.

What inspiring paper to share?

Designing for collectivity is key, as I talked about in last week’s newsletter. Kars is building foundational research to explore what is needed for “people’s compute”, where design for collectives is part of the three critical dimensions for human autonomy in AI systems. Very thoughtful work.

This paper argues that protecting human autonomy in AI systems requires moving beyond application-level contestability to address three critical dimensions: (1) shifting focus from applications to infrastructures that shape technological possibilities; (2) designing for collectives rather than individuals to foster democratic governance; and (3) adopting realist rather than idealist approaches to address actual power relations.

Alfrink, K. (2025, April 11). People's Compute: Design and the Politics of AI Infrastructures. https://doi.org/10.31219/osf.io/uaewn_v2

What are the plans for the coming week?

I already mentioned the training, the work on ThingsCon activities, building on the Cities of Things knowledge hub, and civic protocol economies. I will also attend, at least for one day, the AIxDesign festival in Amsterdam. A new General Seminar on “Science Fiction is Strategy” this Wednesday. You can also dive into (wander through) all the new CHI research presented in Japan this week.

References with the notions

Human-AI partnerships

The evolution of AI products. Which is something else, as the evolution of the role of AI.

Anthropic is aiming at AI model welfare.

AI as native search. And as a shopping buddy.

Perplexity's new mobile voice assistant was announced with some references to Apple Intelligence, so it seems. Is it teasing or pitching? Or maybe hinting? Apple Intelligence could mean, in the end, best for embedding and integrating AI experiences.

Designing Perplexity. Learnings and choices.

Edward Zitron is sick and tired of the hype about AI's impact, especially on company valuations.

Are the models living up to their experiences?

Robotic performances

Robots are part of China's resilient strategy for tariffs.

I am not sure if eight mln is a lot for deliveries.

It is a known phenomenon; we don’t expect the same rates of accidents with autonomous cars.

The development of autonomous cab services continues.

Intriguing creatures.

Exoskeletons as a simple tool.

Robotic learning curves and context windows for new learning will become an important factor.

Can you afford a human waitress? New luxury might appear.

Immersive connectedness

One of the important topics of the Trustmark created some years ago (a project of ThingsCon with Mozilla) was the risk of bricked products that depend on the connective aspect. You would less expect this from Google.

Tech societies

Are there two OpenAIs? Development of technology and products. But if these are really separated.

The periodic table of Machine Learning.

It is telling how Microsoft is framing their ideas about relation with AI. Could be team effort.

Maybe a bit disappointing to read this from Dan Shipper, maker of some great publications and tools for collaborating with AI. ‘Just’ leading to better personalization; it would be more interesting if certain AI could pop bubbles.

This new Nothing phone is modular and also stimulating creating your own 3D printed accessories. Is this a form of physical vibe coding? Not the same as 3D printed shoes imho.

VibeCore, and all other forms of Vibe, it can be confusing as you think of vibe coding, that is not following a vibe but creating your own.

The framing of an American Panopticon triggers me. Is the collection of all the data of the US citizens and begin very vocal about it, a different 21st version of the panopticon; you need to think that data is collected about you to prevent you doing things that are not appreciated…