Weeknote 235; Somatic agents in AI space

Hi all!

A shorter week after Easter, and next to that I combined a visit to STRP on Friday as I was in Eindhoven for the ThingsCon Salon we organised. For the third time, we teamed up with the Eindhoven community; it has been a while since the last in-person Salon was before Covid. This time we planned it as a side program of STRP. That made it easy for me to visit the STRP activities before. I liked this edition; with several nice art installations on the Art of Listening.

The Salon was also very inspiring, with three good speakers offering a mix of insights. All on listening profiles, sensing homes and bringing data to life. The theme of Listening Things about our personal space that is becoming more and more a sensing environment with the silent IoT revolution. With the new standard of Matter, it will be even easier to add consciously connected devices to our homes. As Lorna mentioned, back in 2010, when we both were present at the launch of The Council Internet of Things, initiated by Rob van Kranenburg (read about the impact), we were in a session on the impact of the smart home on the sense of personal space (organised by Alexandra Deschamps-Sonsino). How listening is part of acoustic biotopes is what Elif showed in her research. How the sensor data is made tangible and given meaning is the work of CleverFranke, and Bob showed how this could work in a smart home context. Our relationship with the tech surrounding us is changing, something that can be clearly experienced in the years of student projects with the IoT Sandbox, a scale model of a home that is triggering new forms of ideas to interact through the physicality of the data. Home IoT is a growing system, Joep showed us.

Our relationship with our more or less connected home will change even more if that relationship becomes more intelligent. See all the news again this week; agency becomes the topic of the coming time…

Peet made some nice pictures you can find here.

Events for the coming week

- For London readers, this evening, IOT London.

- Smart & Social Fest, this Friday in Rotterdam. And I will be co-hosting a workshop at 13:00 in EMI op Zuid on Wijkbot:

- If you happen to be around Milan this Thursday, this is a cool panel imho.

- This Wednesday, The Hmm has an evening on… not a clear theme, but some interesting speakers.

- Sensemakers New Tech Talks, also on Wednesday.

- Or if you are more into Metaverse developments, especially in the public space, also on Wednesday in Amsterdam.

- An AI hackathon by some inspiring writers about AI - Every and Ben’s Bites - is starting today.

- For Dutch speaking: Techdenkers with Maxim Februari, Thursday 20 April in Amsterdam (de Balie)

Notions of last week’s news

The more we become partners with the new AI tools, new relations will be built. This week a paper on generative agents got a lot of attention. I added it to the ‘paper for the week’ part below; it is next to an interesting way of research, also an insight into a possible new form of relation in AI. Not new to research and people looking into human-tech agent relations, but it seems to trigger thinking with a broader audience.

Another tool is AgentGPT which is an experiment in this area.

Gary Marcus is addressing another aspect; anthropomorphising of AI; treating AI like people.

The appearance of robots everywhere is influencing, of course the way we communicate with them. “Effective integration of robots into human life requires balancing responsibility between people and robots, and designating clear roles for both in different environments.”

The robot as an intelligent creature is just starting. I liked how Stacey Higginbotham in her latest podcast brought the combination of TinyML and ChatGPT to bring more ‘life’ into IoT devices, Microsoft was sharing research that converts national language instructions into executable robot actions.

Applications might be seen first in an industrial context, where robots are more common already, of course.

The role of embodiment in intelligence is linked to the current focus on AI forms. This makes the link with robotics even more interesting, according to the New York Times.

Soft robotics might be necessary for this.

And creating team-operating robots could be needed for more complex work.

Dan Shipper always has interesting explorations (and makes a great tool); this one might be a bit controversial:

“We put a premium on explanations because historically we’ve felt that unless we know “why” something happens, we can’t reliably predict it or change it. (…)

“That’s not true anymore. AI works like human intuition, but it has more of the properties of explanations. In other words, it is more inspectable, debuggable, and transferrable.”

Not sure if AI resembles intuition, especially if the transformer models, are more forced intuition or planned intuition then.

“None of this means we should leave scientific explanations behind. It just means we don’t have to wait for them.”

In other genAI news.

AWS is entering the Generative AI space from a storage and processing perspective.

Elon Musk seems to plan for a Generative Twitter

/cdn.vox-cdn.com/uploads/chorus_asset/file/24085014/STK171_L_Allen_Musk_03.jpg)

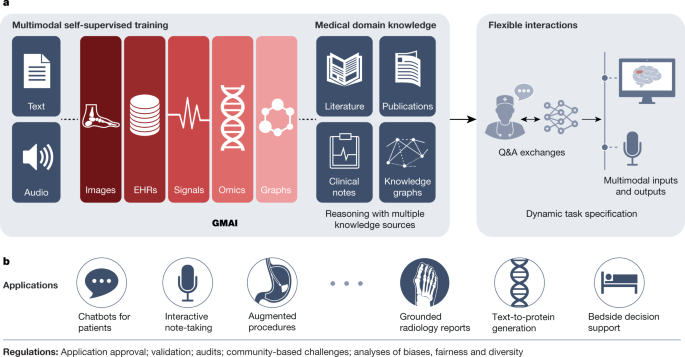

Not generative but generalist medical AI

“It starts with a familiar intro, unmistakably the Weeknd’s 2017 hit “Die for You.” But as the first verse of the song begins, a different vocalist is heard: Michael Jackson. Or, at least, a mechanical simulation of the late pop star’s voice.”

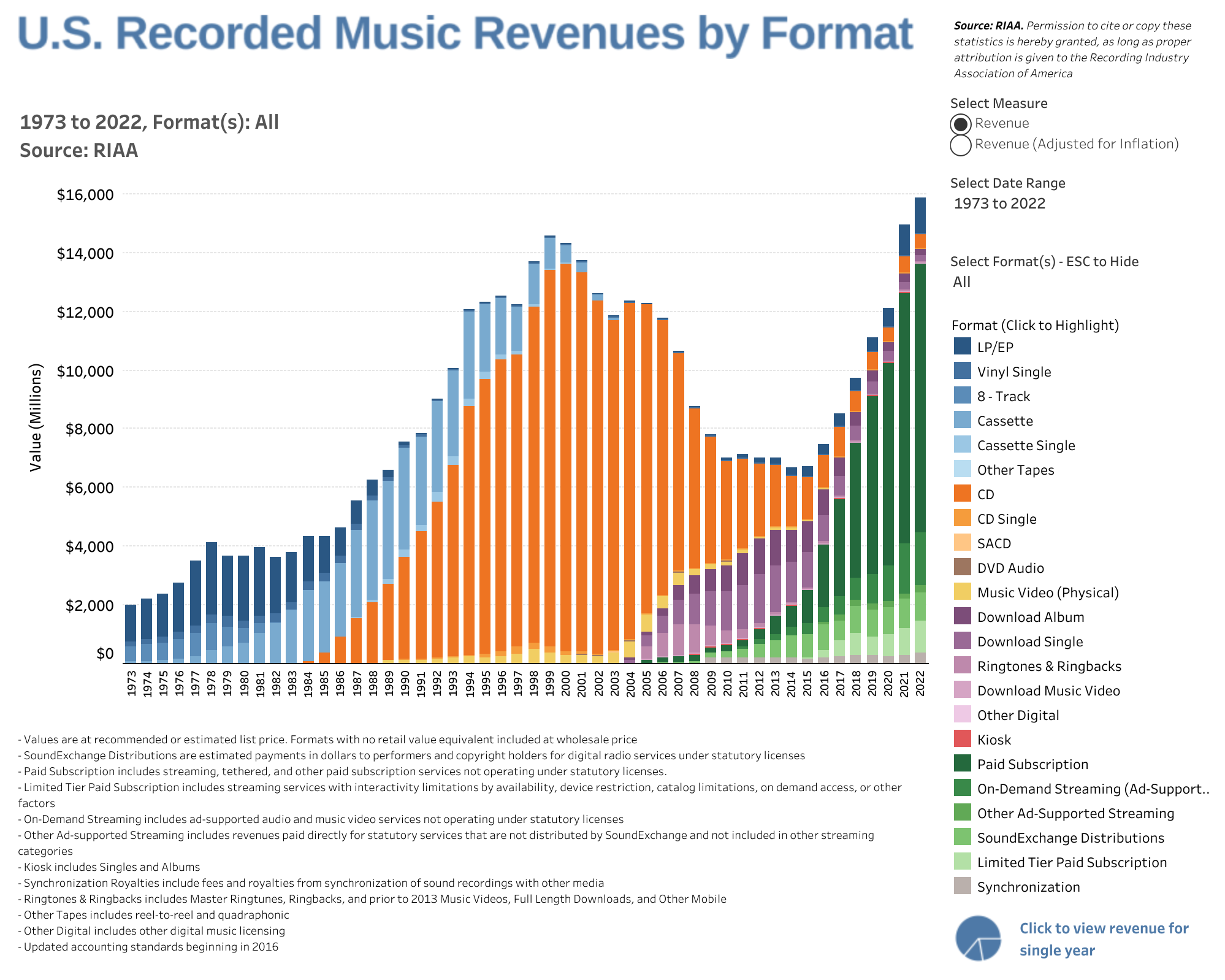

AI-generated music as a new thing?

Google is creating a song creator

Curious how Coachella will look next year 🙂

GPT-5 is not rushed; Sam Altman of OpenAI is securing us.

/cdn.vox-cdn.com/uploads/chorus_asset/file/24247717/lp_logo_3.0.jpg)

And did you hear about crypto? A report on the current state;

The protocol economy is on another level; the shift to cloudless computing is based on protocols instead of centralised services.

And to close on a geopolitical note. “Cold War 2 won't be decided by the opening moves.”

Ok, let’s end with another type of AI prompting human behaviour: AI-generated skateparks…

Paper for the week

Generative Agents: Interactive Simulacra of Human Behavior

Research through game design is a really interesting way of simulating the experience of Generative AI. The paper received much attention as a signpost for a new form of AI; the agents. Just like the earlier mentioned AgentGPT.

In this paper, we introduce generative agents—computational software agents that simulate believable human behavior. Generative agents wake up, cook breakfast, and head to work; artists paint, while authors write; they form opinions, notice each other, and initiate conversations; they remember and reflect on days past as they plan the next day. To enable generative agents, we describe an architecture that extends a large language model to store a complete record of the agent’s experiences using natural language, synthesize those memories over time into higher-level reflections, and retrieve them dynamically to plan behavior.

Park, J. S., O'Brien, J. C., Cai, C. J., Morris, M. R., Liang, P., & Bernstein, M. S. (2023). Generative Agents: Interactive Simulacra of Human Behavior.

https://arxiv.org/pdf/2304.03442.pdf