Weeknotes 227; bing with a bang

Weeknotes with the interesting news from last week, and reporting sensing ethics...

Hi all!

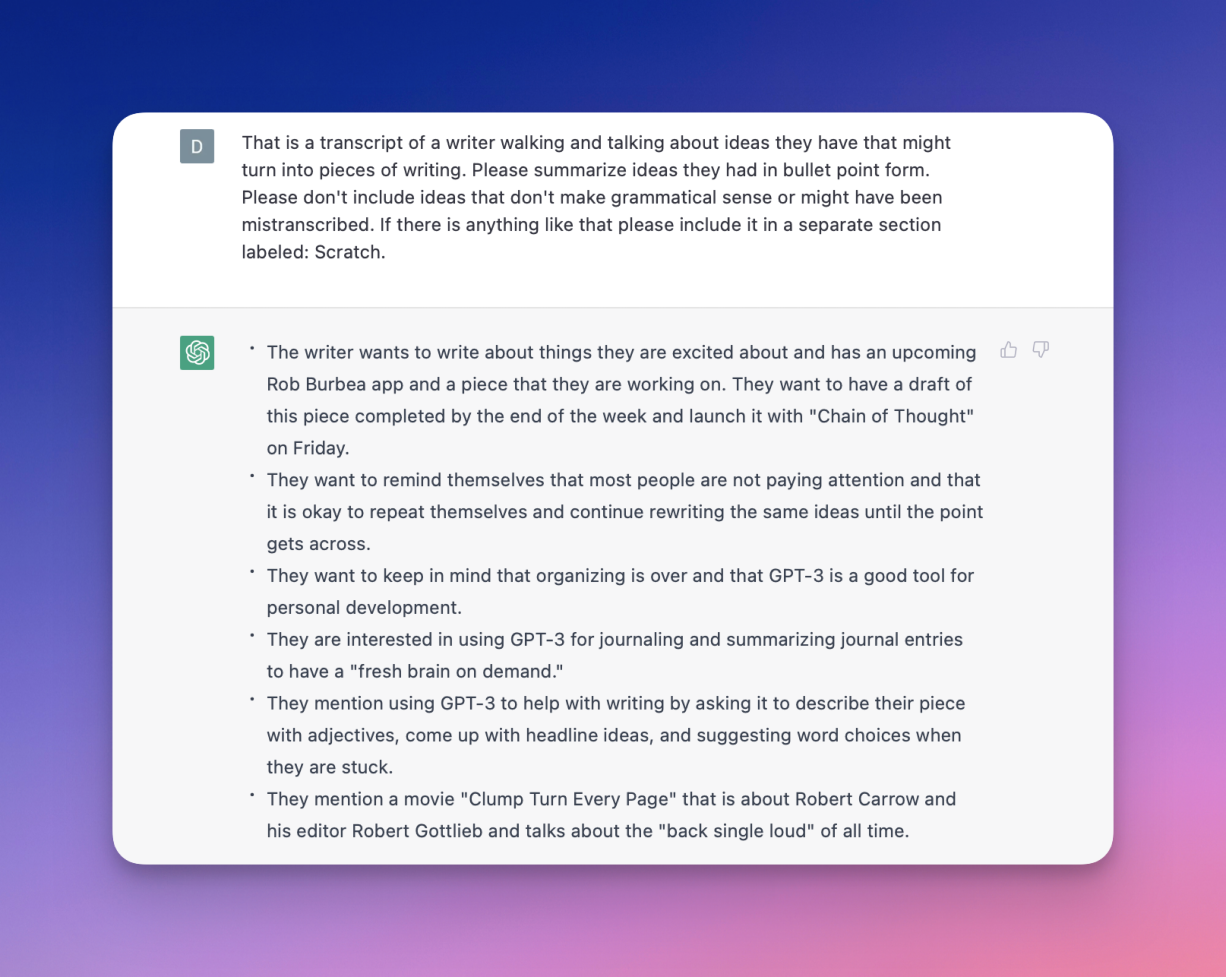

Never a dull week in the AI arms race era. The main news was, of course, the problems that became apparent with the new Bing-ChatGPT combination, called Sydney. The first batch of test persons now has access (like Kevin, Ben, Ethan, Chris) and the reviews are rather good. Microsoft scaled down the test use for now. And OpenAI published a clarification on the behavioural style of ChatGPT.

Still, there is a problem with the behaviour and manners in the conversations with a couple of the users. It brings back memories of an earlier attempt by Microsoft to create a companion bot, now one of the most used examples in AI presentations. Tay became, within 24 hours, an ultra rude bot trolling, and was switched off right after.

It will be a pity if this is influencing the developments of AI and the like. There is so much potential in professional services based on these tools.

One of the strategies can be to build opportunities to oppose the behaviour. Contestable AI, Kars, is doing a whole PhD on this topic and he presented shortly during the 2 year anniversary of Responsible Sensing Lab on Thursday. I could not make it in person in the end but watched the recordings. These are some of my impressions.

Peter-Paul Verbeek had a keynote on democratizing the ethics of smart cities. We are now entering Society 5.0, living a digital life. Robots become citizens. He wondered if AI is learning the same way as humans do, if AI has its own agency or if it is always derived from the relation with humans.

He showed how sensing is also shaping the way we see our world. Technology is not only a tool; it is also shaping how we are in touch with our world. This is a topic to address as designers of these sensing environments. Smart cities are part of politics. In AI systems, it is always the question of who has agency: the operator, the control unit, or the drone itself. Citizen ethics can be an important concept; ethics developed by the citizens. There are three stages: (1) technology in context, (2) the dialogue, and (3) options for actions.

After the keynote, Thijs Turel and Sam Smits looked back on two years of RSL and to the future. An important theme is to design data collectors in the city to design for just enough. An interesting aspect is how, via “designing” tender requirements, we can set goals for climate, among others. Next to a lot of examples, the presentation included the development of the scanned car; what does that do for the people living in the city? If we have a 100% chance of being fined, it should be taken into account in the democratic decisions that were the basis for the level of fines.

Kars Alfrink was sharing his concept of Contestable AI. Leveraging disagreements to improve the systems, validating the concepts of enabling civic participation, ensuring democratic embedding, and building capacity for responsibility. For example, we should not discuss if scan cars or cameras are part of political programs and promises; we should connect them to the values behind them and acknowledge - like Peter-Paul Verbeek was presenting - how technologies mediate not only the things and services we use but also the political decisions behind these.

It relates nicely to an essay by Maxim Februari that was published in NRC on Saturday. Democracy is not a product with a certain outcome; it is the process that counts, and that should be stimulated. There is much more to say, but it is best to read it yourself.

For this week only a few possible interesting events:

IoT London on Thursday; on Digital Security by Design; Design cities for all: regenerations, in Pakhuis de Zwijger 27 Feb, this time on design for time. And save the date: 14 April the ThingsCon Salon.

News updates for this week

Find below interesting articles from last week. The representation of Sydney and ChatGPT is the hot topic of course, also here.

Paper for this week

“Thanks to rapid progress in artificial intelligence, we have entered an era when technology and philosophy intersect in interesting ways. Sitting squarely at the centre of this intersection are large language models (LLMs). The more adept LLMs become at mimicking human language, the more vulnerable we become to anthropomorphism, to seeing the systems in which they are embedded as more human-like than they really are.

(…) To mitigate this trend, this paper advocates the practice of repeatedly stepping back to remind ourselves of how LLMs, and the systems of which they form a part, actually work.

From the paper “Talking About Large Language Models” Link to PDF

Shanahan, M. (2022). Talking About Large Language Models.

See you next week!