Weeknotes 240; the bias of human agency

Hi, y’all!

I skipped a week as I announced two weeks ago, as I was on a short city trip to the French harbour city of Le Havre. The urban development of the city has an interesting story as it was rebuilt after World War II when the centre of town was almost completely destroyed by bombings. The overall design by Auguste Perret is interesting to explore. Next to that, there is a lot of street art that is extended every year in Un Eté Du Havre. Not so much on tech I cover mainly in this newsletter. However, it was nice to see how the micro-mobility is integrated and scooters have designated docking stations now. Much better.

Did the world change in the last two weeks? AI is still developing rapidly. And our relation to it is still a learning curve. From the always insightful newsletter of Benedict Evans, some reflections on a corporate lawyer that asked ChatGPT for legal precedents, and got something that looked like it but would not stand court… Benedict makes this right statement, not blaming the lawyer per se, but more the design of OpenAI:

“It looks like magic, but it really does not communicate what it’s doing and how it works, and it’s far too easy for people who don’t read AI papers to think that this is doing some kind of database lookup.”

There was also some critique about the way Sam Altman was approaching the hearings at a US Senate subcommittee. He pleads for more regulation to prevent accidents. That is, of course, a good thing (and is advocated by many), but if he is so uncertain of the consequences, why choose a public testbed with such a possible impact? Also dubious about trying to manipulate European regulations by threatening to block access.

Events

- This afternoon 30 May, Futures Seminar #14: Design Futures Live on Youtube.

- 1 June in Amsterdam and online: Wisdom in Artificial Intelligence: A Dialogue Between Ancient Philosophy and Modern Technology

- Into Speculative design and design cultures? Exploring Latin and European approaches. 31 May online

- On Monday, all Mac followers are watching WWDC expecting Apple's view on XR and reality reframing goggles.

- And later that week Micromobility Europe, and The Next Web the week after, will bring a lot of international techies to Amsterdam.

Notions of news

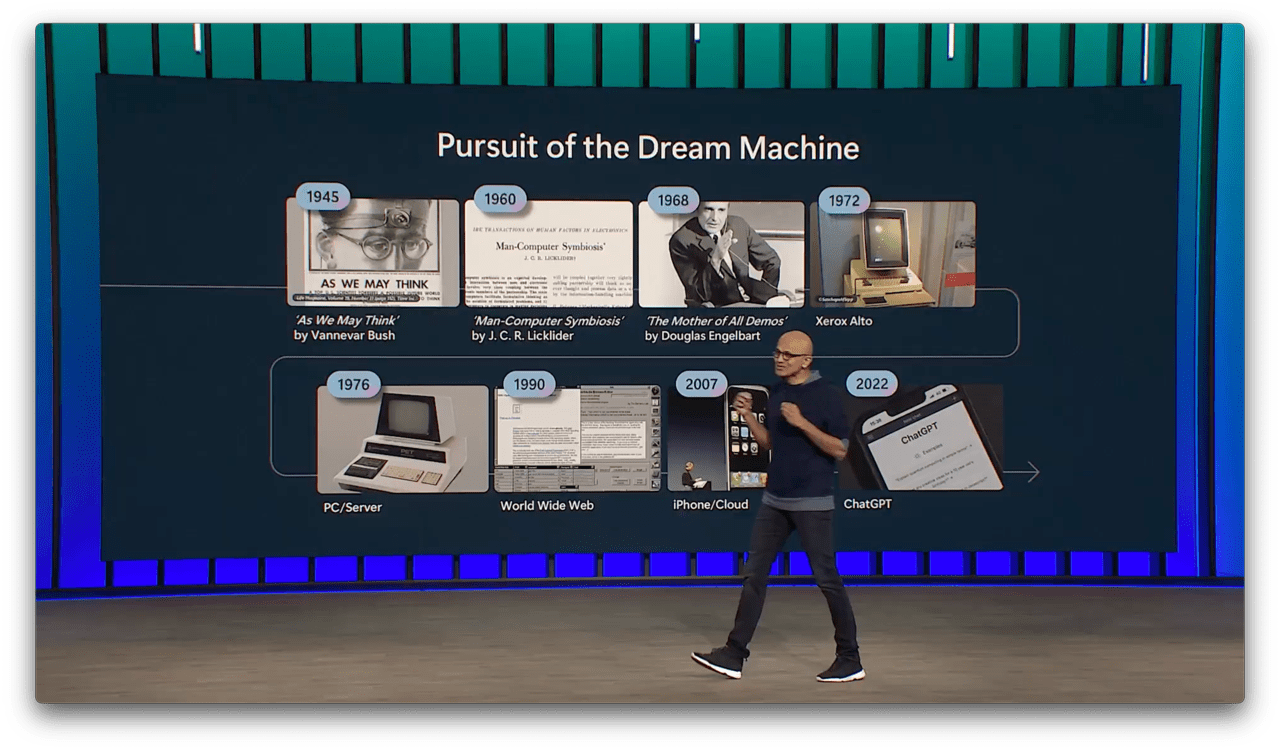

On Microsoft Build, a presentation the state of GPT, reviews on the performance of Nadella were good, and eager for the first time again. They go full in for the co-pilot frame (branded as Copilot) which is indeed a good frame. Ben Thompson is analysing the ai platform shift.

Will Anthropic be able to gain a position as a big tech player building next-gen AI assistants? Investors are signalling trust.

Other AI tools from Google to read charts. Adobe supercharging Photoshop.

Is there a different concept of Open or Closed Source for AI systems?

Google is letting people into its AI Search experiment.

End-to-end encryption of messaging is challenged with the AI-assistants, like the one of Google

/cdn.vox-cdn.com/uploads/chorus_asset/file/24686107/google_magic_compose_1.png)

So many robots have been covered here. IEEE is trying to find the coolest. Embodied ones are clearly the most present.

Will the taxi drivers become robots, or the taxis become autonomous? No-brainer. The strategy for Uber was always predicted to make it possible to lock-in the users and phase out the drivers. It seems to have become a reality.

I don’t think this startup has made it to the list yet, but this kind of multipurpose in-house ‘robots’ might be an interesting category. Hyundai showed a prototype of MobED too a year ago as part of their acquisition of Boston Dynamics.

ROS 2 has been released. In case you are planning to build your own robots.

Humanoids or AI assistants? The latter clearly has more trust with investors, but humanoids gain some traction too.

Human agency (see below) is even more important if we get a Neuralink implanted or can walk again.

Do we need a new format for the old exhibitions of industrial developments? Online, with different types of inventions, fosters innovation and entrepreneurship and inform and inspire the general public. Maybe, but not per see, to create a platform as core, but to zoom out and find the common goods and focus on the developments.

I was listening to a (Dutch) podcast that spoke with the author of a book on the DIY culture of Lagos as an example of a different culture, the potential for building new governance structures but also still the drawbacks. But there is a pivotal moment from a climate perspective:

A couple of years ago, I coached a master student’s graduation project on designing a new experience for Sea Bubble. This flying commuting boat resulted in a different experience of the journey being disconnected from the physical experience of moving. BMW choose a different implementation, kinda posh.

Due to my holiday, I could not join the XR support demonstration as last time…

Opinions on AI

Not sure yet if this will become a standard section, but looking back at the last months, it might make sense. This week we had:

- Interview with Juergen Schmidhuber, renowned ‘Father Of Modern AI’, says his life’s work won't lead to dystopia

- Ethan Mollick experimented and thinks that how we relate to books will change as a result of AI

- This is not directly on AI, but I think it is very applicable (no need to explain): what is the minimal definition of user agency

- On my list to listen to and read: Red Team Blues; how human agency matters, according to Cory Doctorow.

- Is AI potentially reducing bias if designed and executed well? Sam Altman believes so.

- TikTok is AI that we live in and are influenced by; it creates a new aesthetic; “baroque, tactile, kinetic, and loud”.

Paper of interest

This week's paper of interest is about an interesting concept to connect human agency with AI self-governing: Constitutional AI:

As AI systems become more capable, we would like to enlist their help to supervise other AIs. We experiment with methods for training a harmless AI assistant through selfimprovement, without any human labels identifying harmful outputs. The only human oversight is provided through a list of rules or principles, and so we refer to the method as‘Constitutional AI’.

(…)

As a result we are able to train a harmless but nonevasive AI assistant that engages with harmful queries by explaining its objections to them. Both the SL and RL methods can leverage chain-of-thought style reasoning to improve the human-judged performance and transparency of AI decision making. These methods make it possible to control AI behavior more precisely and with far fewer human labels.

Bai, Y., Kadavath, S., Kundu, S., Askell, A., Kernion, J., Jones, A., ... & Kaplan, J. (2022). Constitutional AI: Harmlessness from AI Feedback. arXiv preprint arXiv:2212.08073.Chicago

Link (PDF)

And what about me?

Who is writing here? Let me share personal updates here at the end. I updated my profile a bit in the about-section if you like to know more about my beliefs and path till today.

With Structural we are entering a new phase of getting our story out. In short: We help design Beautiful Contracts based on promises to support agreement ecosystems and protocols. Our mission: Make it less risky for enterprises to make bold and decisive moves in their markets through contracts that pay off better than expected in terms of profit and public good.

I hope to refresh the website this week, but you can always reach out if you like to know more!

Furthermore, looking forward this week to the presentation of the industrial product design students of Rotterdam UAS, working on the wijkbot kit. We will also discuss the last phase with the neighbourhood-thinktank.

See you all next week!