Weeknotes 244; self-discussing robot intelligence

hi, y’all!

Are you looking forward to a cage fight between Zuck and Musk? What is that all about? I am not even interested enough to dive into the backstory, let’s go on quickly to more interesting things…

Last week I was able to join Mozfest House on Wednesday. I missed the presentation and interview session with Frances Haugen, the Facebook whistle-blower.

Mozfest did a great job to end the event on a high, with an impressive and moving performance of Toshi Raegon and friends that boiled the focus on trustful technology and inclusivity. The whole atmosphere resembles a lot of the ThingsCon feel, with intense sessions all over the place. I missed a bit of a central place where you meet everyone, and feel the size of the community (about 500 attendees in total), but nevertheless all kudos to the organisation.

As a final general observation, I find it remarkable how American it felt. Alternative scene of the US, but I mean not so many Europeans or not so vocal, and definitely not so many people from the Dutch community.

Specifically on the sessions I attended. The research presented on the Algorithm register in Amsterdam and the attitude of citizens was insightful. 87% know what an algorithm is, so there might be some skewness in the research population; it is still clear that more agency is needed to deal with the output. Some groups stressed the need for possibilities by citizens to find more information, focusing on transparency more than contestability. In our group, we discussed how there is a need for better registration of the intentions of the algorithms, more than just putting them in a list. The current level of explanation is weak, at least. Present the intentions to a board of citizens in a dialogue and with the possibility to enforce more information would be a route to explore.

I attended other sessions on kids' rights and robots, and lightning talks on shared digital public spaces (I did not capture many notes, but there was a project exploring all human values in a framework and a commons network statement. And some were solving all problems with Web3 post-platform solutions that felt tech-focused.

The last session I followed was by Serife Wong on Generative AI: Hype Busting and Best Practices. I plan to follow her work; she read her short column “The Origin of Clouds” to kick off. AI is weird.

The discussion was, for a large part, on the role of generative AI in general trust. An interesting case is the one of Amnesty, which used a fake picture of a protest to protect the real people. Is this good use with the right attention, or undermining the overall trust? The verdict was the latter. There are tools to recognise fake.

Later that week, I attended an afternoon session of Bold Cities on the Real Smart City, presenting a white paper, illustrated by three short interview rounds led by Inge Janse. The take-aways as concluded by Liesbeth van Zoonen (to keep this newsletter compact), in Dutch: van transparantie maar tegenspraak, noodzaak van een digitale omgevingswet, geen ethiek maar politiek (from transparent to contestable, introduce a digital public space law, politics in stead of ethics).

Calendar of Events

A few events for the coming week.

- I still like to attend the public Spaces Conference today and tomorrow in Amsterdam, but it never fits my calendar...

- Smart education, in Amsterdam this afternoon, 27 June

- Critical city making symposium in Amsterdam, 4 July

- And a tip from one of the readers of this newsletter: TOA in Berlin. He plans to make a podcast, so I will share it here in case you cannot make it.

Notions from last weeks news

This week's new AI enhancements are from Vimeo, Amazon warehouse robots, Otter, enterprise in general.

Other short news, a new version of Stability AI and MidJourney (5.2).

There is a new AI boyband in town, Mistral, a French startup that raised over 100M only based on a memo. The people of Every analysed that memo, and the conclusion is that there is some good in it and some doubts.

Multimodal AI: “AI doesn’t just advance because of new fundamental models, like going to GPT-5; it advances when it is given the tools to operate in the real world.”

Big words from Google’s DeepMind: will be better than ChatGPT. Powerplay or real? There was earlier the announcement of AlphaDEV, where AlphaGo methodologies can be applied to your problem space. Also, new robots can teach themselves without supervision.

This type of learning relates to another much-shared article on how robots learn by just watching other robots do their job. Not in reality, but from YouTube movies.

More learning. For general purpose robots.

The clickbait is real; the premise feels like a potential issue. The first claim is of course, that AI is replacing content on the web comparable to the web. Not sure. The second claim is how this would change business models, and user behaviour feels like making sense. Potentially.

/cdn.vox-cdn.com/uploads/chorus_asset/file/24390468/STK149_AI_Chatbot_K_Radtke.jpg)

A bit stronger: “What we will see instead is an “arms race of ploy and counterploy” in which the whole notion of objectivity is a casualty of the battle of truths”

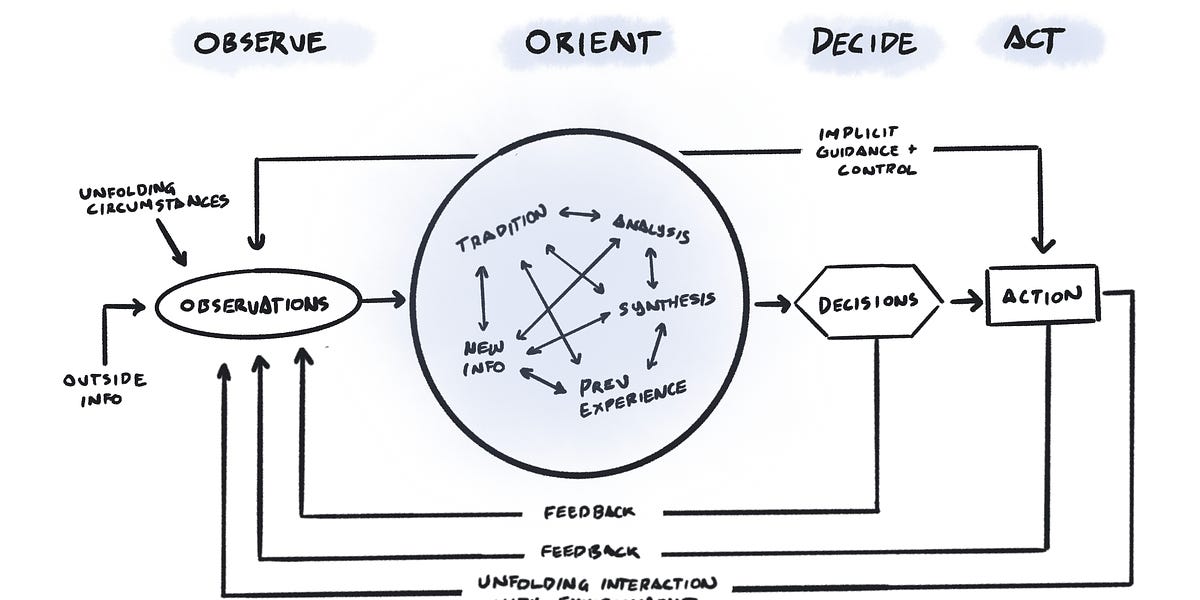

The OODA loop is popping up in different contexts. It is a strong concept indeed. Can it help to create an effective second brain?

“There is too much complexity out there for one brain to handle. It’s time to build a second brain. Tools for thought give us the requisite variety for the agency in complex environments.”

Personalised medicine has been a promise for years and connected to new production methods. That AI might speed this up has nothing to do with the core, the producing of the medicine, but all with the intake, understanding what is needed for that one patient.

A good example of a not an indirect effect of the AI

Similar as personalised medicine, in this article, the impact on a more sustainable world, or at least more sustainable AI, is not about the use of AI but on the potential improvements of anything. A holistic approach.

SciFi prototyping. “The world is made twice: first in our mind, and next in reality.”

The future?

New materials. Everything can be smart.

I am kind of fan of the work of Yuri Suzuki since I saw his music-producing toy train at the LIFT Conference (2011?). This is a new weird thing that is not only pleasant to watch but probably also to use.

Paper for this week

Dive deeper into the risks of LLMs, the risks as a taxonomy…

Taxonomy of Risks posed by Language Models

This paper develops a comprehensive taxonomy of ethical and social risks associated with LMs. We identify twenty-one risks, drawing on expertise and literature from computer science, linguistics, and the social sciences. We situate these risks in our taxonomy of six risk areas

Laura Weidinger, et al. 2022. Taxonomy of Risks posed by Language Models. In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency (FAccT '22). Association for Computing Machinery, New York, NY, USA, 214–229. https://doi.org/10.1145/3531146.3533088

See you next week!

Next to all the work on the Structural copilot and beyond, I dedicated some time to Cities of Things program management and Rotterdam field lab and will attend a kick-off event for AI Labs in Utrecht, a city I was also last Sunday for some architectural adventures (the Van Schijndel House is a hidden gem, in its strict design.

Have a good week!