Weeknotes 263: flocks of packet-switching intelligence

Follow-up of GPT store, surface Humane AI, and more notions from last week’s news in human-tech relations, with events and paper for the week.

Hi, y’all!

Every week there is a (tech) news event that defines the debates. So was Humane last week. The AI in your pocket… Interesting and cringe at the same time. Some thoughts I shared earlier via Mastodon/BlueSky

Nice to read the impressions from the Humane demo by @caseynewton

How he is appreciating (again) the clear form factor of the phone. The quest for the position of wearable AI.

Will it be just another touchpoint to the intelligent cloud like the watch? Or is there a new space?

I am thinking the voice interaction and hand palm tricks are blocking the view of the real new (potential) interaction principle: creating an AI touchpoint of every surface around you.

Platformer

But the real AI debate was driven by the aftermath of OpenAI dev event, I mentioned shortly last week, as it was held just the evening before the newsletter dropped. The analysis about the event all agreed on the impact and also the positive format of the event, which brought back some of the feelings of the early Steve Jobs. Sam Altman has a charm, a very different one from Jobs, but especially the move away from a heavenly-produced product introduction was refreshing. More important was what was introduced.

There is an interesting connection, as mentioned in The Verge Podcast: it was strange there was not the slightest reference to Humane AI, while Sam Altman is one of the investors. Of course, he would not distract from the main messages, but on a side note or at least mention the physical AI as a development.

Triggered thoughts

Listening to Pivot speaking at the OpenAI keynote, not sure what triggered it, but the first wave of AI apps is the new search of chunks of data produced by people, creating knowledge, new versions, etc. All podcasts as transcripts, writers, and researchers.

Doing synthesis based on content databases instead of ‘just’ search. I can remember that there was an attempt years ago by Google to mix the search with a knowledge graph of trusted sources (a quick look in old articles); it never flew. Around that same time, we explored a platform for knowledge workers.

Will this happen now in a different format? A GPT for all my collected links and documents will not only deliver a richer knowledge base if I can have a chat with the knowledge LLM (PLM), I am also curious about what new learnings will emerge and even what it will tell me about my interests… As a start I begun uploading my docs in Notions; I cannot imagine they will not create that function seamlessly. And in the meantime, I will dive into the GPT builder.

Events

- 13-19 November: v2 - Rotterdam - presence-(un)precence

- 15 November - Rotterdam - The Hmm @REBOOT

- 16 November - Amsterdam - Just.City.Amsterdam

- 16 November - Online - IFTF Foresight Talks: Dreams and Disruptions: Gamification for the Future

- 17 November - Amsterdam - AI for Good on Machine Learning Labor

- 17 November - London - Future of Coding meetup

- 20 November - Copenhagen - Envisioning the Circular City of Tomorrow

- 21 November - Hilversum - Cross Media Cafe: De Stem van AI

(And as update of the event I organise myself)

- 15 December - Rotterdam - TH/NGS 2023; un/ntended consequencesProgram: second keynote Bas van de Poel announced, 11 sessions confirmed, 15+ projects at the exhibition

Notions from the news

As mentioned above, the announcements of OpenAI got a lot of attention. How to…

Opinions from Ethan Mollick, Kevin Roose, Dan Shipper, Ben Thompson

In the meantime, others are announcing too, like Samsung planning an alternative ChatGPT named Gauss. Elon Musk already did last week Groks

Co-pilots have a business case, apparently. For GitHub it is a moneymaker.

The developments finding faster or smarter chips continue. Next-Gen AI chips will mimic human brain. I am not convinced that is the way to go; as I noticed while watching one of the latest “Two Minute Paper” videos, ChatGPT is making the same mistakes as humans, which should be unnecessary having a different concept of perception. Mimicking humans is apparently more important than being a complementary power. What a time to be alive…

In the meantime, we got confused more and more

The chip is a crucial part in the AI competition… And a new arms race :-(

The How-To’s get popular, it proves the popularity

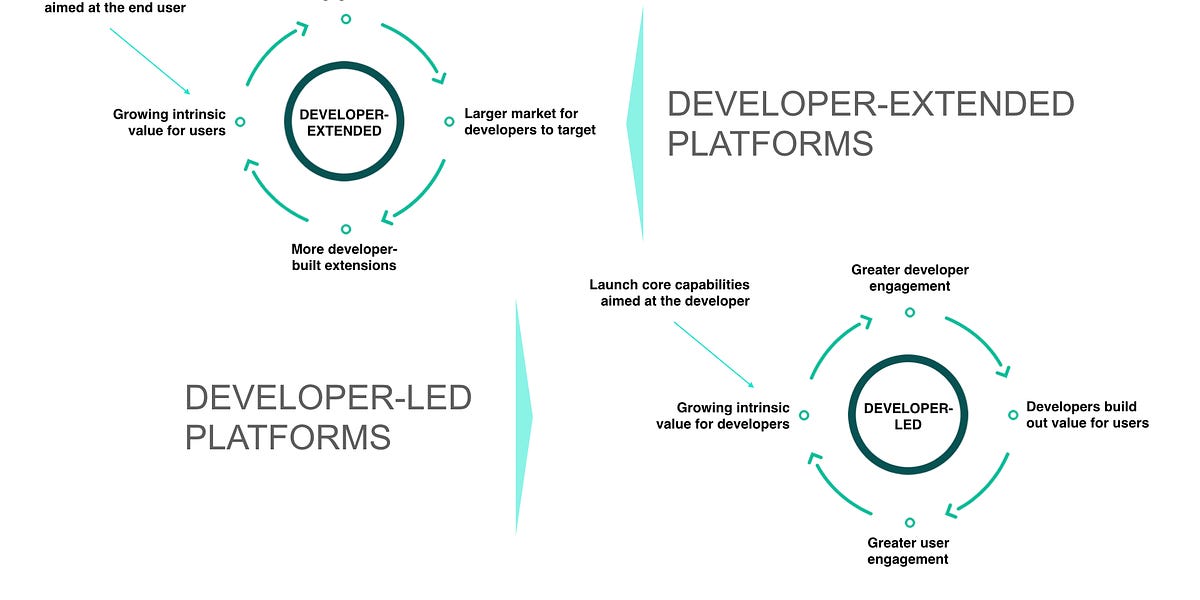

Will DX be more important that UX?

Speaking of changing trends, IDEO is cutting staff which can be linked to the position of Design Thinking: we could be very well **beyond peak Design Thinking** acc Marco van Hout**.** And another reflection by Tobias Revell, and by Louisa Heinrich.

AI might not take over the world, but it might cause a financial crisis, Harari thinks. And Noah Smith on how liberalism is losing the information war.

Did I mention the potential emergence of sweatshops for knowledge to feed the AI? Or do we like to call it partnerships? And we need new evaluation methods

New AI copilots from Instagram, Figjam, GitHub, Waze

Personal Digital Twins were a thing already, but are now more and more the intelligence reference, especially for personal health treatment.

Robots are getting more attention and benefiting from the Gen AI wave (”the GPT moment is near”), which is clear every week again. How robots are expanding their fields of work by attaining emotional designs.

And the dangers of living with robots are clear, too.

Self-driving cars are the perpetual promise… Also, here, we need humans to help the machines.

Making spatial video with the new iPhone is working well, John Gruber was not disappointed at all, and also in the impact of the experience in a second use, he mentioned in the Dithering podcast. Interesting he described how traditional panorama pictures become 3D experiences. Good to know, as I like to capture moments in panos.

For a reflective moment:

Is Singapore the poster child for the solarpunk city? Something else than a sponge city.

Paper for the week

In case I dive further in LLMs, this is an insightful paper I expect: A Survey on Hallucination in Large Language Models: Principles, Taxonomy, Challenges, and Open Questions

In this survey, we aim to provide a thorough and in-depth overview of recent advances in the field of LLM hallucinations. We begin with an innovative taxonomy of LLM hallucinations, then delve into the factors contributing to hallucinations. Subsequently, we present a comprehensive overview of hallucination detection methods and benchmarks. Additionally, representative approaches designed to mitigate hallucinations are introduced accordingly. Finally, we analyze the challenges that highlight the current limitations and formulate open questions, aiming to delineate pathways for future research on hallucinations in LLMs.

Huang, L., Yu, W., Ma, W., Zhong, W., Feng, Z., Wang, H., ... & Liu, T. (2023). A Survey on Hallucination in Large Language Models: Principles, Taxonomy, Challenges, and Open Questions. arXiv preprint arXiv:2311.05232.

See you next week!

I will be up to Wijkbot meetings and student coaching sessions, ThingsCon preparations and most of the time writing on a new policy agenda for creative industries… And depending time- and weather-conditions, we might check out Glow, a yearly tradition…