Weeknotes 265 - broken down institutions and endangered guardrails

The weekly update looks back on the latest developments in AI, robotics, intelligent environments, relations of humans and technology, and more (than human).

Hi, y’all!

I was already a bit pessimistic about the outcomes of the Dutch elections last week, but it became even worse. The extreme right-wing party has gained even more support and become the largest party (in case you are not Dutch and missed the news). What the outcome for the government will be is still to be seen, but it makes me think about how to incorporate discussions and actions into the projects I am working on… Back in 2016, in the aftermath of Trump's election, we organised the Tech Solidarity sessions to cope with it, which was still relatively far away. I'm not sure yet how to cope with it this close. This very moment (this newsletter) it triggered some thoughts related to the core theme of this newsletter: human-tech relations…

Just two podcasts representing the sentiments: Damn, Honey, Waanzinnig Land

Triggered thoughts

The outcomes of the elections look like they are part of a longer developing trend into more populist parties taking control in Europe and worldwide. One of the aspects in the Netherlands that is happening now is the shifting Overton window. It has made it less problematic/shameful to vote for these types of parties as other, more mainstream parties opened the door for collaboration, not only by the election result but also by the media's responses.

The outcome of negotiations will likely be a catch-22; not having the PVV in the government will frustrate the frustrated voters even more in the future, confirming their distrust. Having the party in the government will start to break down the trust in democracy as a whole, as we will have a party in the government that does not subscribe to the democratic system at all.

Apart from what is the best option, there is a link to make with the whole discussion on AI governance and the still-running aftermath of OpenAI crisis (or turmoil). I think that most people believe that the best party to control the consequences of AI development is a government body more than a commercial organisation. One of the lessons of the OpenAI crisis is that the governance structure based on public values is hard to secure in turmoils. What if there was indeed a development with GPT-5 that makes a big leap towards AGI and would endanger potential public interest? The Q* as mentioned (see below). Whatever happened, for the sake of the argument, it is clear that the company failed it’s designed guardrails.

But with all the moves towards potentially authoritarian regimes in usually democratic countries, we don’t feel certain anymore that government is the best option. It is good to learn that the employees of OpenAI managed to play such a big role (a form of TechSolidarity), independently from the real intentions… Designing the protocols and systems is more important than ever.

Benedict Evans has a similar conclusion at the end of his newsletter this week.

The bigger irony, though, is that all of this drama came from trying to slow down AI, and instead it will accelerate it. The now-former board wanted to slow down OpenAI’s research and flow down the diffusion of its technology in app, ‘GPTs’ and agents. Instead the accelerationists are firmly in charge of OpenAI, at least for now, and the rest of the industry has just had a huge kick in the backside to proliferate and accelerate all of this as much as possible. A half-baked coup by three people might be more consequential than all the government AI forums of the last six months combined. Failed coups often do that.

No final conclusions here, let’s hope we can turn the events into energy to push back on a potentially developing perfect storm…

Events calendar

There will be events.

- Immersive Tech Week in Rotterdam, with different programs for those into VR and beyond.

- 28 November - Wageningen - Symposium Positive Energy Districts

- 29 November - Product Tank AMS on data https://www.meetup.com/producttank-ams/events/297437877/

- IoT London, 30 November. https://www.meetup.com/iotlondon/events/296946520/

- 30 nov https://dmi-ecosysteem.nl/events/amdex-acceleration-event-mis-het-niet/

- If you happen to be around Milan, a lifesize version of the intriguing mapping by Kate Crawford and Vladan Joler.

- From 30 November (opening) - v2 Rotterdam: {class} on consequences in algorithmic classification

- 30 November - Amsterdam - Pinch on Responsible apps and other digital dilemmas

Notions from the news

“Get the obvious ideas out of the way, and together, we’ll come up with the good ones.” Ideas and developing technology can take a while but need work.

The three laws of protocol economics.

AI.

It feels like the storm around OpenAI has died down a bit. Or does it not? Still, a lot of articles reflect on the whole saga. On the role of Ilya Sutkever. The impact for AI progress and safety. Ben Thompson hopes to go forward again. “What does seem likely is that this story isn’t over: there is that investigation, and new board members to come, and eventual revelation of what Q is; for now, though, I look forward to getting back to more analysis and less backroom drama.”* We’ll see…

Venkatesh Rao is connecting it to a long exploration of new types of religions. “The saga doesn’t yet have a name, but I am calling it EAgate, after Effective Altruism or EA, one of the main religions involved in what was essentially an early skirmish in a brewing six-way religious war that looks set to last at least a decade.” https://studio.ribbonfarm.com/p/the-war-of-incredulous-stares (paid).

Cory Doctorow: “Effective Accelerationists and Effective Altruists are both in vigorous agreement about something genuinely stupid.”

On Q*. Was there a breakthrough before the OpenAI turmoil? Q* (Q-star), but is it a real breakthrough? “I got 99 problems I’m worried about (most especially the potential collapse of the EU AI Act, and the likely effects of AI-generated disinformation on the 2024 elections)”

“Reshaping the tree: rebuilding organizations for AI”. Ethan Mollick is exploring future organisational structures influenced by AI.

There are more parties than OpenAI of course. The makers of Pi claim to have the second-best LLM with their new release Inflection-2. Bard can read videos. Anthropic releases Claude 2.1 with less hallucination. Stability AI goes into games and next leap of generative video. And Grok (from X) is released next week. If it is not delayed :)

"Our tendency to anthropomorphize machines and trust models as human-like truth-tellers — consuming and spreading the bad information that they produce in the process — is uniquely worrying for the future of science."

Robots.

Wearable robots for better walking

A robot dog to comfort humans on a space mission.

AI robots for agriculture. On the rise in Japan, apparently.

And cobots are still the expected first useful iteration of robots in our daily (work)life

Extensive report on a robotics summit discussing the current and future state of the industry

Resurrection through robotics.

Tripping on Utopia. Robot terror, as seen in the 1930s, with some nice images.

Smart home and other connected things.

“Business arrangements and opaque contracts have broken the pitch of the smart home. Better regulation can fix this problem.” It's good to find Stacey still keeping track of the developments in the smart home.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25099965/236928_Smart_Home_Contracts_7_CVirginia.jpg)

Ink&Switch does great research into all types of interactions. With Embark, their latest published project, they explore dynamic documents for making plans. Nicely mixing in real-world experiences in creating digital tools, and the learnings are in the thinking process.

Misc.

Is this the world upside down? Building as nature.

The season of silly gifts…

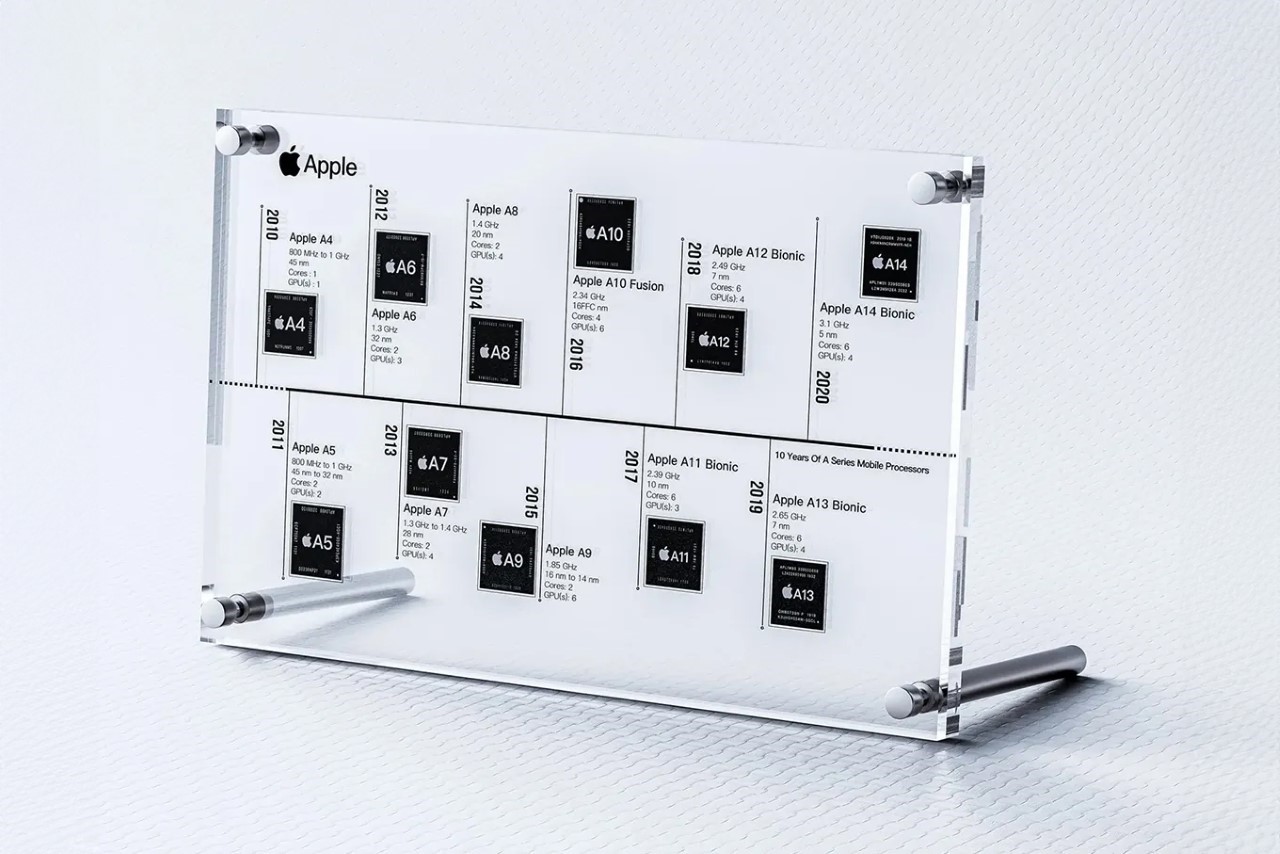

But also very nice and tempting ones; I have a couple of these pocket versions; this is next level…

/cdn.vox-cdn.com/uploads/chorus_asset/file/25092116/K.O._II_front.jpg)

Paper for the week

Listening to a short podcast on this paper, it made sense to share it here. Machine Learning and the Reproduction of Inequality, Where sociologists are reflecting on machine learning influenced by human biases.

Machine learning is the process behind increasingly pervasive and often proprietary tools like ChatGPT, facial recognition, and predictive policing programs. But these artificial intelligence programs are only as good as their training data. When the data smuggle in a host of racial, gender, and other inequalities, biased outputs become the norm.

Alegria, S., & Yeh, C. (2023). Machine Learning and the Reproduction of Inequality. Contexts, 22(4), 34-39.

https://journals.sagepub.com/doi/full/10.1177/15365042231210827

See y’all next week!

Don’t forget to check the almost complete TH/NGS 2023 program! Really looking forward to the mix of 12 sessions, 2 keynotes and more than 20 exhibition contributions.

Next week, I will update you on the projects as we enter a new month. Have a great week (and/or keep tight)!