Weeknotes 276 - Is the end of prompt engineering near?

This week, the latest updates on AI tools and, specifically, the new approaches to unlocking generative AI. And much more, from robotics to proxy citizenship.

Hi, y’all!

Welcome to the newsletter, especially the new subscribers. There is a lot of interesting in the news going beyond the current platforms and new introductions in AI capabilities, making movies that also populate mainstream media. At the same time, Google is upgrading Gemini with some interesting features that might change prompt engineering, which definitely triggered a thought!

Before I continue, let me know if you want to dive deeper into these topics or create specific (near) future studies. Check out the Target_is_New website to find out what is possible.

Triggered thoughts

Apparently oio.studio did work Google’s Gemini new interface. That made me curious as I know them for their interesting thought experiments, as well as real experiments with more-than-human futures, partnerships with autonomous creatures, and creative AI overall.

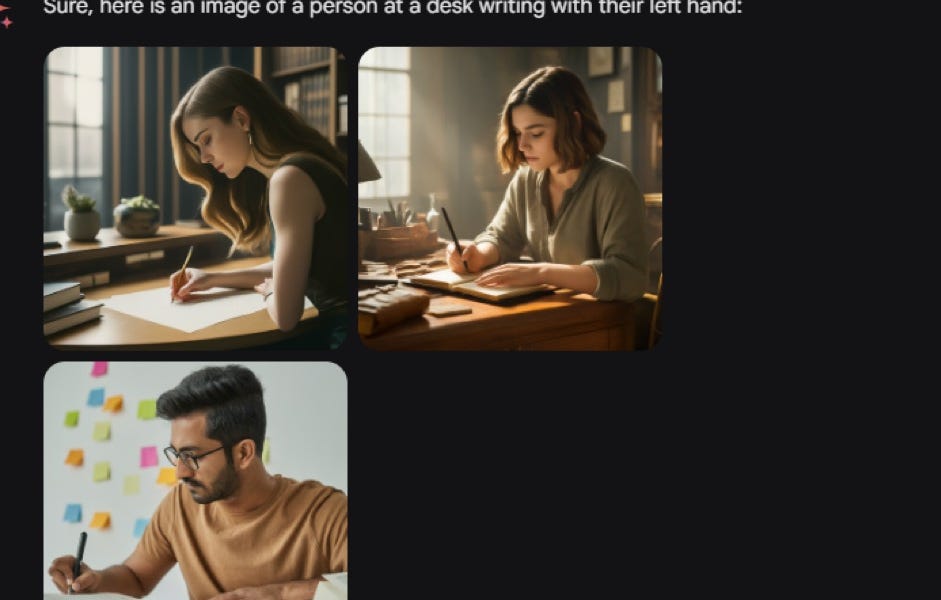

The Gemini explanation shows how they create a reasoning interface that makes a debriefing of the intention of the human input. It makes me think about how we get more human interactions with machines, as we don’t need to adapt to machine-like thinking anymore to get the best. We got used to adapting our thinking a bit to the machine way of thinking, like with search engines. The first step was the translations from natural language to machine-understandable queries in the chat interfaces. Gemini seems to go further by adding an extra layer of ‘understanding’. So it is not only the interface like with chat interfaces. It is also the internal reasoning design that is made differently. In the demo, you see how, under water, Gemini is trying to make sense of what type of question it really is and is adapting the interface to that type.

Would this mean that the profession of prompt engineering is ending soon? For now, the example shown focuses mainly on presenting the search results. It is unclear if it is adjusting the findings, too, and if there is real understanding (see below). As a new approach of shaping the interface, it is following what Perplexity.ai is also trying (check out the interview with the founder at last week’s Hard Fork); finding a new form for building relations with our AI partners.

Events to track

- 21 Feb - Amsterdam UX - Contestability in AI with Kars Alfrink

- 21 Feb - Amsterdam - Sensemakers 3D Vision

- 23 Feb - Amsterdam / Rotterdam; Creative Morning sessions in both cities

- February - Amsterdam - Sonic Acts festival and exhibition

Notions from the news

Why? Cringe. Also, it is interesting how he is trying to enter AR, where VR has always been the main use. While Apple is keeping its distance from reality, hiding branding…

This week on AI

OpenAI to start. The most attention went out to the newly introduced text to video model Sora. You need enough computing power to create distributed movies, but it is definitely impressive. And it is now part of mainstream media, with attention on the potential ‘unintended’ consequences of fake. As always, it will probably not be the fake world leaders that cause the biggest impact, as there will be guardrails for that. There are many more indirect relations. Bullying will get another dimension, trusted officials can make you believe to make certain decisions, etc. There is a promising future for fake-scanning software (like virus scanners). Unhide the hidden dangers…

Sora is stimulating interest in Worldcoin, that other Sam Altman project.

And Google is also updating Gemini. Battle of the Giants.

Advanced Machine Intelligence (AMI, pun intended?) for self-supervised learning.

The now mundane chat-based interfaces are getting more and more enhanced and trustworthy. Long memory makes a difference in building a relationship with your AI buddy. Or AI Valentine.

At the same time, we are still at the beginning, and even easy tasks like creating interesting travel plans deliver boring outcomes. In my experience, it can deliver some (chat-)work to get some inspirational suggestions. It is just like the real world; the generic advice is the default, and real ‘geheimtips’ need extra work.

Statistics versus understanding. There is a consensus that current generative AI does not really understand what it is generating or even what it is using as a reference to generate. On the other hand, what is understanding? Is it not understanding because of a different model of thinking or the lack of experience? What role does it play in the real embodiment of experiencing in understanding? And how do we relate to hallucinating systems, where we have summaries without the original?

Philosophy might help out when our society is getting weird.

I remember that there have been computational music-composing buddies before, but now we have AI to add to the mix…

In case you want to keep track on the developments with the EU AI Act, a dedicated newsletter

And some potential positive (or optimistic) news on the AI footprint.

Some new tools and services are AI-enhanced, like Slack and Reddit, and some existing new AI tooling updated, like Stability AI. For the Dutchies, Erwin has been the master of finding tools for cyber punkers (making creative output with off-the-shelf tools, aka low code) for decades, and now he is keeping track of all interesting AI-based tools.

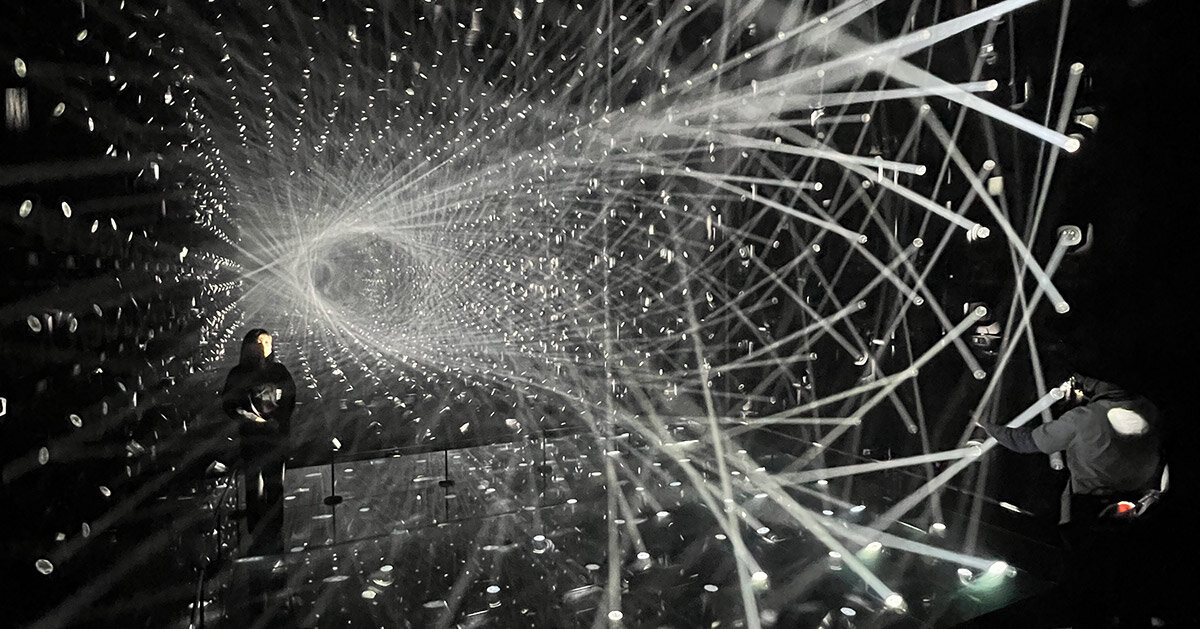

Where Erwin is often creating very functional applications for self-organising and publishing Matt Webb is almost creating an art installation with the same punk attitude.

Robot and autonomous round-up

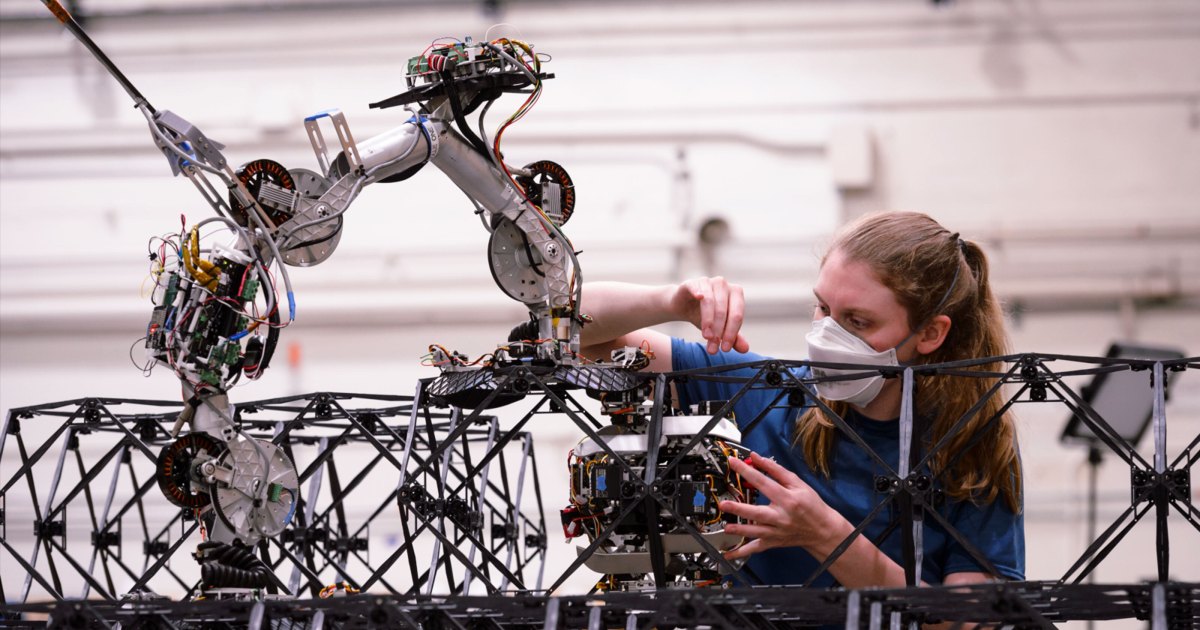

If I read about autonomous space robots, I wonder if there are our delegated workers in space, more like a new breed of species.

What do you think about this application of the robot dog as guidance for the visually impaired? Does it make sense to use the dog as an archetype here for better acceptance both for the visually impaired and the outside world?

We need a new narrative for autonomous vehicles.

Platforms and beyond

I hope I find time to watch the talk of Cory Doctorow at Transmediale.

“So what's enshittification and why did it catch fire? It's my theory explaining how the internet was colonized by platforms, and why all those platforms are degrading so quickly and thoroughly, and why it matters – and what we can do about it.”

Apple has a better image as other big platforms, but there is a danger they will do serious harm to the open web principles.

/cdn.vox-cdn.com/uploads/chorus_asset/file/24402140/STK071_apple_K_Radtke_02.jpg)

I feel with Peter that the good old social internet (aka web 2.0) is in a transition. Whether it is pure fragmentation and reformation or a shift to something new (for years, we have predicted private social networks to become dominant). For a student design minor on interactive technology at Delft University of Technology themed future citizenship, Cities of Things is commissioning an assignment to think about proxy citizens, robotic-like ‘digital twins’ creating new social city fabric in neighbourhoods. Curious what will be the results…

Just nice

If you happen to be near Tokyo…

Paper for the week

Large Language Models: a Survey

The research area of LLMs, while very recent, is evolving rapidly in many different ways. In this paper, we review some of the most prominent LLMs, including three popular LLM families (GPT, LLaMA, PaLM), and discuss their characteristics, contributions and limitations.

https://doi.org/10.48550/arXiv.2402.06196