Weeknotes 280 - multimodal AI as an Act of Performance art

Apple is hinting at its AI plans standing out, and James Bridle created a new project. Are human-AI interactions like performance art? And more notions from the news and event calendar.

Hi, y’all! For the new subscribers or first-time readers: welcome! A short general intro: I am Iskander Smit, educated as an industrial design engineer, and have worked in digital technology all my life, with a particular interest in digital-physical interactions and a focus on human-tech intelligence co-performance. I like to (critically) explore the near future in the context of cities of things. And organising ThingsCon. In the newsletter this week, next to the notions of news on AI, robotics and beyond, I reflect on some thoughts triggered by the newly announced MM1 through a paper in combination with some thoughts triggered by two artists: Marina Abramovic and James Bridle. I end the newsletter with some possible exciting events for this week.

Triggered thought

The new battlefield is multimodal AI, I did mentioned it before, among others with the new Rabbit-device (edition 275). A logic successor in the process from chat to agents. Apple published a research paper last week to claim some of the space here. It would be wise for them of course, to put their foot between the door specifically in that domain; here lies the potential unique proposition for Apple with the huge installed base… Ben Thompson wrote about it in his update this Monday that MM1 is a family of models, the largest of which is 32 billion parameters; that’s quite small — GPT-4 reportedly has ~1.7 trillion parameters — but performance appears to be pretty good given the model’s size. Unfortunately, the models were not released, but the paper is chock full of details about the process Apple went about creating the models and appears to be a very useful contribution to the space

Check out the published paper, and some articles on MM1, like this and this.

Another way of thinking about AI connected to physical world interventions is described in a lovely new experiment by James Bridle: AI Chair 1.0. How do you prompt AI for instructions on how to design a chair based on a pile of scrap wood? They are also building the chair based on the instructions.

Browsing the article, I have to think about the recipes that Marina Abramovic is creating for her performances. Last weekend I was at the exhibition in Amsterdam and the well-known performance art works are intriguing and make you think and feel through the absorbing of the performances. An extra asset is the recipes that are hanging next to the pictures or videos of the work. Clean instructions are a key part of the experience. The AI Chair is also made through this kind of instruction, so you wonder if the interaction of generative AI with humans, as soon as the real world is part of the equation, is an act of performance art? What does that act of interacting mean? Who is the creator of the performance?

Notions from the news

SXSW Interactive did finish around Wednesday. I need to find a good summary, but my impression is that there is also a shift happening at the ‘festival’ from AI as a text-based tool to more integrated solutions and especially a different relationship between humans and AI. But this might be my biased view, of course…

Of course, you can ask AI, like using perplexity. “SXSW 2024 presented a nuanced view of AI and digital technologies, balancing excitement for innovation with critical discussions on ethics, safety, and the human impact.” It does not feel like it is touching the fringes.

Asking specifically for robotics, AI-enabled robots and swarm robots are apparently a thing. I am curious to see if the current focus on humanoids will still be there in one or two years. Is there a Robot Renaissance happening?

Good old-fashioned IPO news: Reddit is taking a step and triggering discussions on the role of authors.

Can you handle more AI news? AI in robotics, in chats, making sense of the physical space. That use case of augmented reality where objects of the real world are enhanced with knowledge of the digital world. In the ‘old’ AR, this was based on positioning with GPS, creating pointers that the user could activate. Now -with AI- Ray-Ban Meta glasses can read the world around them, and if there is a famous landmark, it will start reading remarkable facts. So is the promise, at least.

Next, generative AI will be used to create a funny story based on the current situation, like a live audio guide.

Is there already model commoditization? Are the different models becoming less distingue

How will the Moral Machine change with LLMs to the power?

The news in hard tech is now on AI powering platforms; NVIDIA is entering a new phase

Living with AI…

What will a future with AI look like? Are we indeed delegating all risks to the AI?

How can AI help climate change? 9 ways. Nr 10 could be, of course, to switch off the carbon-consuming AI….

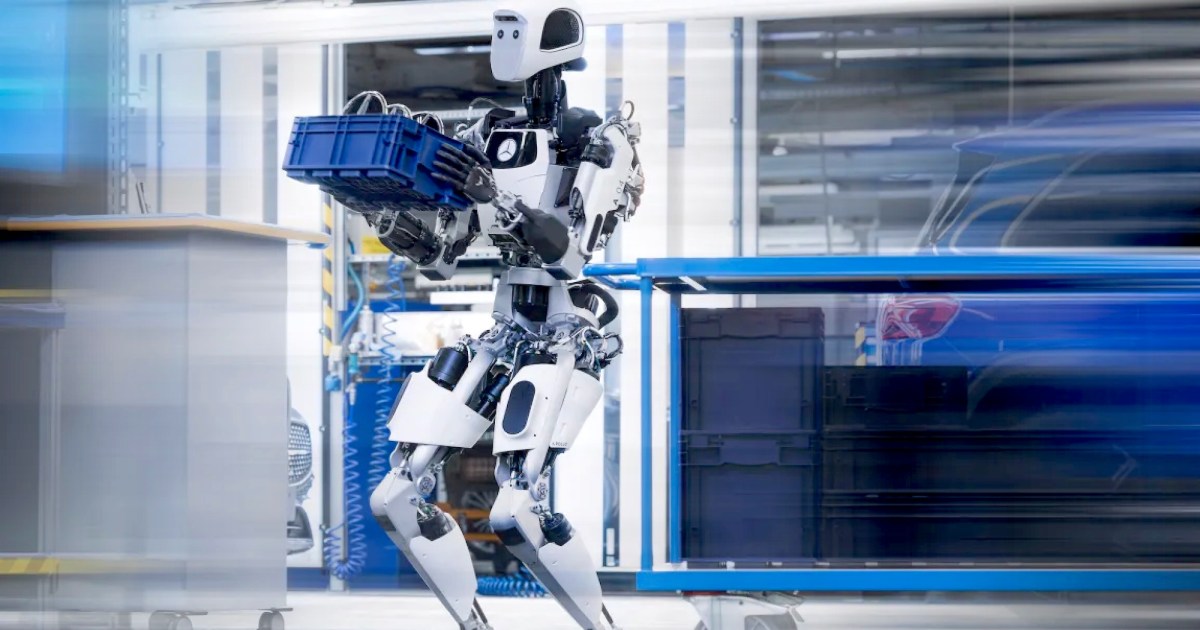

On the humanoid side of things, Apptronik appeared regularly in the news from SXSW. Separately and with the partnership with Mercedes.

Which one to use? Superpowers compared

“As the web becomes an anaerobic lagoon for botshit, the quantum of human-generated “content” in any internet core sample is dwindling to homeopathic levels.”

Cory Doctorow on the “coprophagic AI crisis”

Looking forward to this book on co-intelligence.

…and robots

Another way of human-robotic relation…

Tailor-made fashion will become a different thing.

In the meantime, humanoids are taking all attention. This video is kind of creepy and disturbing, especially through the interaction of humans and robots. On another level, you see big production adding humanoids to the production process.

How will we treat robots?

The study's key finding reveals a fascinating aspect of human perception: advanced robots are more likely to be blamed for negative outcomes than their less sophisticated counterparts”

Job disruptions fuelling

Are robot companies the next frontier for investors?

Cyberpunk design has been all the rage for several years now. Now we are entering Solarpunk for real. According to Figma CEO (interview). Compare Cybertruck and Rivian R3…

/cdn.vox-cdn.com/uploads/chorus_asset/file/25342553/2071157324.jpg)

Paper for this week

If you like to dive into a book: here is one on “Future Intelligence” (open access); it “Curates expert opinions analyzed alongside crowdsourced views on the state of our world in 2050”.

But this paper is more compelling to reflect. “Often overlooked in contemporary debates on machine learning and AI, we argue that Schumacher’s 1973 book, Small is Beautiful, offers a series of insights and concepts that are increasingly relevant for the development of a humanist politics under conditions of computation. With a particular focus on Schumacher’s account of ‘intermediate technology’, we suggest that his emphasis on the social role of human creativity and identification of scale as a crucial concept to deploy in critiquing technology together provide a unique framework within which to (a) address the rise of what we call ‘pathologies of meaning’ and (b) offer a powerful way to consider alternatives to the gigantisms of the FAANG (Facebook, Amazon, Apple, Netflix, Google) and Silicon Valley-style ideologies of digital transformation.”

Berry, D. M., & Stockman, J. (2024). Schumacher in the age of generative AI: Towards a new critique of technology. European Journal of Social Theory, 0(0). https://doi.org/10.1177/13684310241234028

Have a great week…

These last two weeks of March are dedicated to some deadlines for rounding up the Cities of Things Lab010 project and delivering the first phase of a new coalition plan and program. Among other running projects and activities. And try to find time to connect with people for future projects.

You can also check out the Abramovic exhibition, of course, but there is time. Speaking of events; this evening, there is an online session on Slow AI (I will miss…) Later this week the symposium transformation digital art, 21 March in Rotterdam, and 22 March in Amsterdam.

Wednesday, 20 March, there is another talk in the critical futures series.

Studium Generale Delft “For Love of the World” is looking into more-than-human with Elisa Giaccardi back in the Netherlands (23 March). Next Tuesday in Amsterdam a session on responsible AI & uncharted design leadership.

Enjoy! See you next week.