Weeknotes 284 - LMM devices; magic or magicians?

Exploring thoughts on interaction paradigms of LLM devices, and catching up with the latest news on human-AI and robotic performances and immersive connectedness.

Hi, y’all!

There was a solar eclipse that was well visible in a heavily mediated area of the world, so it took over the news. It was special occasion that there was now a perfect fit of the moon and the sun (factor 400 is important here). This will not happen again for some 1200 years, and did not happen the last 1200 years. It makes people think and what remains and changes over time… A world with different speeds of consciousness.

I am thinking about how to make a bridge to the triggered thoughts on LLM-based interaction paradigms. Maybe make a slight detour first. I was at the STRP Festival last Friday, a yearly ritual I do not try to break; the festival always collects interesting art pieces. This year, half of the 12 works had a quality that stood out, I think. The immersive experiences were important: have yourself entering a hunger game-like situation with other visitors of that day. There are also always some artist talks, and I attended Ling Tan explaining her work “Playing Democracy 2.0”. The multiplayer game lets you make decisions that shape the playing field, and that has a great influence on the gameplay. It worked really nicely to make you think about the consequences through a relatively basic rule-setting play. Keep this in mind while considering the role we will give LLMs in helping us with decision-making….

Triggered thought

A returning category of new products is the task-based multimodal physical spatial AI-enabled devices. Like Rabbit and Humane. The latter had his review version delivered to tech journalists last week, and their experiences were not all 5 out of 5, to say the least. The one of The Verge is rather telling: it is a promising category, and the device is even increasing the belief there is a need for it, but this device is not even close to delivering the promises.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25379325/247075_Humane_AI_pin_AKrales_0144.jpg)

A central problem seems to be the choice to make the LLM-like interaction with a cloud-based architecture the central way of interacting. Even if the interactions were not LLM-based at all, like asking to play a song. It makes you wonder if LLMs as touchpoints are the future. If the quality is right, and the understanding of the commands is flawless, there is a strong case for it, as it is creating a much more flexible and forgiving interaction protocol. In the best execution, it helps to find unclear requests by being creative in asking and suggesting, making it kind of human, so to speak.

The review's conclusion that LLMs are overkill apart from the current state of technology and hardware is not right. It is right that the current state of tech and execution makes LLMs a bad choice for now, but as a way of thinking, it is really interesting.

I have to think back to the Google Glass saga from 2013. As an early adopter trying to create apps for the device and presenting about it, I feel there are similarities in the way it triggers thinking on new interactions. What I liked about playing around with Google Glass was the new interaction paradigm it unlocked: a timely and context-sensitive way of thinking about services on the go. The limits of the screen of the Glass and the way to interact with it made you create timely based interactions. Rethinking from designing for destinations to designing for triggers. (Check this this old presentation, here presented in Dutch)

It became part of the way we design for mobile and wearable apps. For notification-based interactions, for divided services over multiple channels that are kind of part of a total service ecosystem. It unlocked the potential of mobile wearable services. Now, we are mixing in a layer of understanding that potentially is changing the way we interact with things again. The LLMs function as a touchpoint with the stored intelligence more than it is the end goal and will also trigger new interaction paradigms of real duplex conversations.

For the new subscribers or first-time readers, welcome! A short general intro: I am Iskander Smit, educated as an industrial design engineer, and have worked in digital technology all my life, with a particular interest in digital-physical interactions and a focus on human-tech intelligence co-performance. I like to (critically) explore the near future in the context of cities of things. And organising ThingsCon.

Notions from the news

Let’s try some new categories for structuring. Human-machine partnerships. You may read AI, but I like to stress the new relationship more than the pure tooling. Robotic Performances capture the new executions of robots and robotic systems. Immersive connectedness refers to both the things we used to call Internet of Things, as the Smart Cities, and is embracing all immersive technology developments like AR and VR as long there is no robotic or AI. More general societal pieces will be collected in the last category on tech societies

Human-machine partnerships (aka human-AI)

Another week, another round of AI magic tools. Music-making is the hot thing, and Udio the app of the week. Adobe Premiere is getting generative AI embedded. Dropbox’s AI will help you manage your content. Intercom is enhancing AI-first customer service.

An artistic exploration of new habits when machines replace the core of our work, like the AI assistants.

For healthcare, it might not be ready.

In the Netherlands mainstream news, we got headlines that an AI-composed one replaced a human voiceover. TikTok is also betting on AI influencers for advertising. You might wonder if human influencers are already performing like AI, or at least optimize to feed the AI.

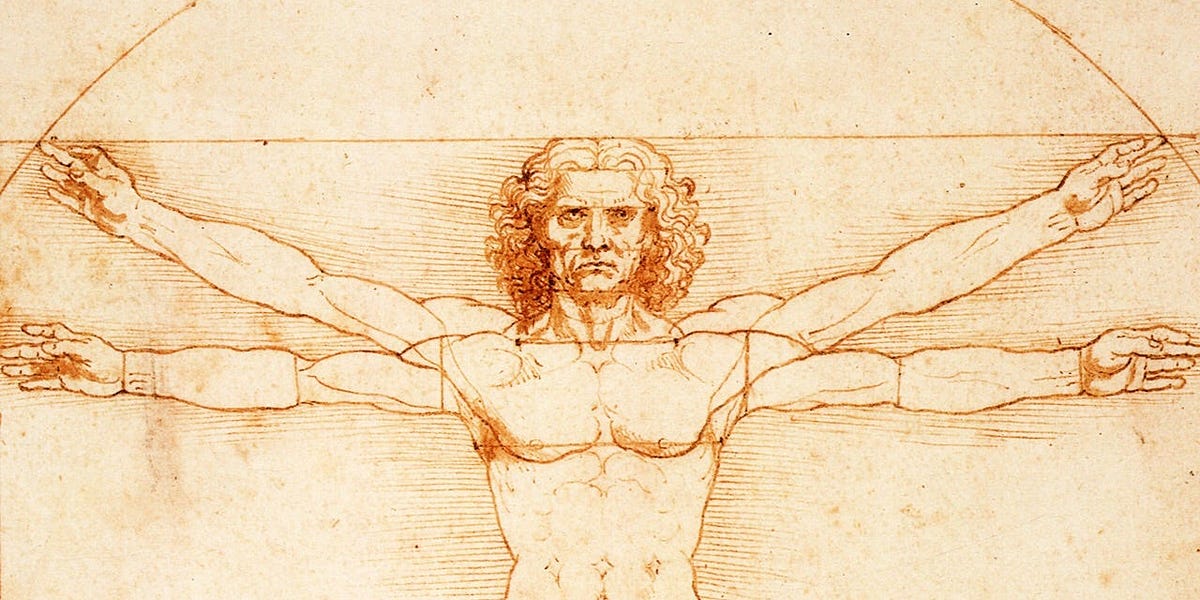

When teaching about AI you can focus on the difference of the artificial part, or reverse the perspective and try to formulate what human intelligence is really about

Another iteration in the AI Chair experiment, with some other models.

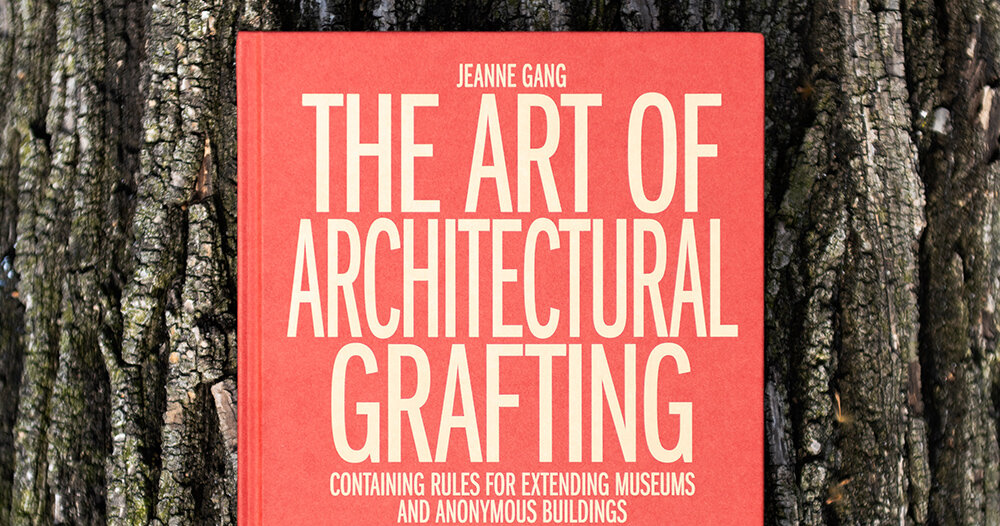

This looks rather nice and enhances existing buildings. I am wondering what would happen when you combine this with the AI Chair concept: analyzing a building and letting the AI create the recipe for an enhancement…

What will be AI's impact on our languages? Will we grow into fewer languages as we need a bigger base for our LLMs, or will the possibility of understanding different languages through AI-assistance create more use for smaller languages?

“The wait for LLMs that can plan might be over, according to the comments by OpenAI and Meta executives. However, this comes as no surprise to AI researchers, who have been expecting such a development for some time.”

AGI might be rather near, or at least not so far away, Anthropic's CEO is predicting in this interview.

Predicting stock markets with big data and algorithms was a promise from some years ago that did not succeed. But what if we use conversational AI to build predictions in concert with human thinking? And how is AI decision making influencing our own capabilities?

Robotic performances

The next iteration of robotic restaurants, like burger places, will add AI and promise improved taste. Apparently, this is not happening yet in this place.

Last week, I shared rumors about an Apple roaming home robot. What are the chances of success for this kind of product?

For countries with patchwork pavement.

Immersive connectedness

I had to think about the early days of the Internet when we got regular overviews of the adoption of the internet in households, the percentages of use divided per country. Some research publications were started just for this kind of demographic. Now we a new technology for new trend diggers.

It is important to promote repairability to push the big device makers to pay more attention to sustainability. Apple is the example, small steps but still. It is a pity that these earbuds are not so recognizable as a better choice when used.

An AR function that shows what spaces you have completed while vacuuming would be great for control freaks or just a nice helper. Or is this a way to let the habits of robot vacuums steer our human behavior?

/cdn.vox-cdn.com/uploads/chorus_asset/file/25386842/855_PDP_Feature_Stack_Start_your_session.jpeg)

What are our personal boundaries when brain-computer interfaces become a kind of reality

The new concept of ghost in the machine.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25391405/IMG_0053.png)

Limitless is a new LLM-based listening device that is always there and promises to create a backup memory with empathic reminders. Not sure what to think…

/cdn.vox-cdn.com/uploads/chorus_asset/file/25394750/All_Colors_Back.jpg)

What is real? Synthetic memories.

Tech societies

“Decentralizing software involves using immutable data, universal identifiers, and user-controlled keys to enable a distributed system without a central source of truth.“

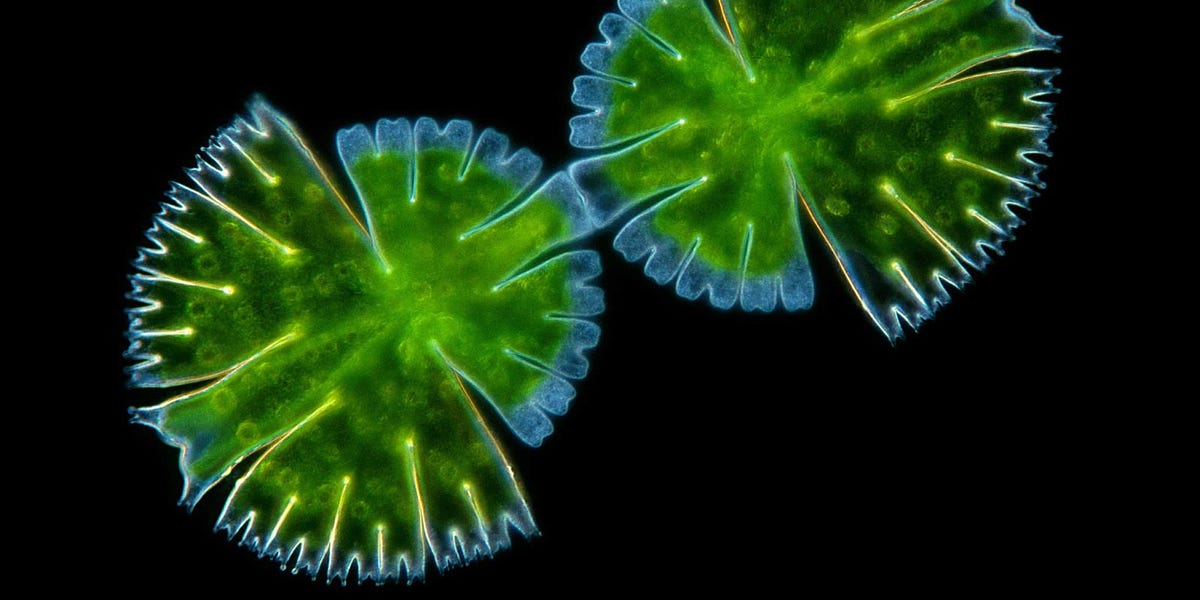

Personalised medicines and vaccines have a moment.

Next up in regulation: the foundation models.

Trends in AI, in Stanford's annual AI Index report.

I will read the piece by Matt Jones more intensively later. Still, in short, he is “aligning donut economics with planetary boundaries and a Kardashev Type I civilization to create sustainable growth and well-being. It explores how increased sustainable energy use, such as solar power, can support global growth and new capabilities without harming the environment. The concept of an "enlarged donut" envisions a future where significant energy growth leads to technological advancements, elevated living standards, and new economic models within sustainable and equitable frameworks.” As summarized by Readwise.

Paper for the week

Some critical exploring: The Dark Side of Language Models: Exploring the Potential of LLMs in Multimedia Disinformation Generation and Dissemination

This review paper explores the potential of LLMs to initiate the generation of multi-media disinformation, encompassing text, images, audio, and video. We begin by examining the capabilities of LLMs, highlighting their potential to create compelling, context-aware content that can be weaponized for malicious purposes.

Barman, D., Guo, Z., & Conlan, O. (2024). The Dark Side of Language Models: Exploring the Potential of LLMs in Multimedia Disinformation Generation and Dissemination. Machine Learning with Applications, 100545.

https://doi.org/10.1016/j.mlwa.2024.100545

Have a great week!

If you are in Milan for the Salone, have fun and inspiration! It is still on my list to experience one time if it fits the calendar… I will be attending Smart & Social Fest. Next to some exciting talks and workshops, I am very curious to see the first results of the four teams of Delft Industrial Design students Interactive Technology Design minor working on future citizenship and Wijkbots. I will be doing a pecha kucha on the Wijkbots for students of the Rotterdam University of Applied Sciences. I am also curious to hear about the new agenda Key Enabling Methodologies, that have a soft launch on Thursday.

Earlier, I needed to complete a proposal for a part of the ThingsCon conference that will take place at the end of the year. We have a theme defined (generative things) and have a great idea to bring this to life.

Next week, I will attend the AMS conference, Reinventing the City. There is also an evening program related to Mobility as sparse good. Metamorf is happening in Trondheim and Rotterdam.

Enjoy your week!