Weeknotes 286 - blurring as a service of tangible AI

Reflecting on AI's impact real life, generative UIs, and lots more notions from the news on human-AI nearby and further futures

Hi, y’all! Welcome to the weekly update, which includes notions of the news (scroll down) and reflections on what happened. This week, the topic is AI and societal impacts.

Last week was packed with events; I joined the AMS scientific conference Reinventing the City partly and attended three fruitful sessions and a conference dinner with good conversations at the table. Next to that, I attended the NL AIC day (the Netherlands AI Coalition). I did not know what to expect, was curious about the crowd, and found a good mix of education, government, and industry. As far as I participated, the general talks focused on the possibilities for AI in services and beyond and the still open playing field, with only 4% of companies being AI-ready.

The theme was “collective, people-oriented, and empowering.” I chose to attend a couple of sessions that focused on the societal impact of AI. The bias of generative AI and creating awareness is significant for organizations that deal with general interest. A session on preventing food waste with AI turned out not so much driven by societal goals but more by business goals. It can go hand in hand, of course.

Triggered thought

In a panel discussion at the same NL AIC on AI and society, the first results of the research will be published this week on what people-oriented AI means and the general public's attitude. There are dangers in the unknown of AI, such as the risk of chilling effects. The second aspect is that opaque power structures can appear; who decides what works and influences the outcomes? The third aspect that the research found is about surveillance and freedom.

It is remarkable and important to note that there is a potential for a new reality in an AI-dominated society that is disconnected from the known agency structures. It can lead to distrust in the systems. It is crucial to think about the right guardrails and literacy for all to judge the systems defining our lives…

It connects to another event I joined, the Poverty Escape. This was a special ‘experience’ connected to an exhibition on Poverty and art at Schiedam Stedelijk Museum. The Poverty Escape is a role-playing workshop where teams have to make decisions on the budget choices for a man who is fighting poverty. It makes you understand the stress and challenges people deal with. There are a lot of issues in the way the support system functions, and sometimes, it worsens situations. I will not go into all the details here. Still, it connects to one of the projects I am involved in now, setting up a program where we try to connect support systems, domain specialists, and the power of design to proactive intelligent digital services in such a way that it is really helping and supporting and not creating stigma and distrust. It is a delicate and essential topic.

From the overall impression of the NL AIC day, I am not sure where the balance lies; it depends a lot on what lens you choose to discuss empowering and people-oriented. The last session I attended at Reinventing the City dealt with the way the municipality of Amsterdam is dealing with citizen participation and AI applications. Creating a framework making AI more tangible for citizens, risk simulations, and blurring as a service. It is promising that the intentions are right. The proof of the pudding is in the eating, or maybe more even before that in the baking… (sorry for this contrived imagery).

For the subscribers or first-time readers (welcome!), thanks for joining! A short general intro: I am Iskander Smit, educated as an industrial design engineer, and have worked in digital technology all my life, with a particular interest in digital-physical interactions and a focus on human-tech intelligence co-performance. I like to (critically) explore the near future in the context of cities of things. And organising ThingsCon. I call Target_is_New my practice for making sense of unpredictable futures in human-AI partnerships. That is the lens I use to capture interesting news and share a paper every week.

Another triggered thought

I was watching the Rabbit r1 live demo that was part of the handing out of the first batch of devices in a hotel in New York. The first reviews are now in (The Verge, The Shortcut, The Atlantic), and more are to be expected in the coming week.

Apart from living up to the promises, I think there were some interesting concepts presented. The built-in teaching mode, for instance, and the ideas about AI safety and building some kind of scrutiny system (that is something else as privacy architecture that is questioned with this first release by the critics) were interesting.

The new version of the promised LAM (Language Action Model), 1.5, has the mantra to learn physically and act digitally, so it brings back the experience of use in the physical world back into the models that drive the digital behavior. You would expect, indeed, but it is about execution in the end.

There is also popping up a new concept of generative UI—UI that adapts to the user and the specific moment of use. Rabbit is hinting at an AI native desktop they want to provide (as a service?). We saw that concept earlier in the proposed UI for Gemini by oio-studio for Google. I am especially curious about the way Apple will integrate AI in its new OS. It makes a lot of sense that it can leverage this, just like it introduced an adaptive UI for the phone back in 2007 with the app store…

Notions from the news

There has been a lot of attention on the official decision to ban TikTok in the US unless it is sold in nine months, just past the elections. What it will mean for the voting is unclear. The tech media agrees that it cannot wait that long, and there will be legal appeals by the company. People cannot believe it will disappear. The app's growth has been enormous over the last years, with 170 million users in the States. Either way, it will influence the debate.

Threads has overtaken TwitterX now in MAUs. At least, that is what Meta is claiming. And Ben Thompson thinks Meta is super strong, in the long run at least.

In modern times, FT and OpenAI are partnering to share content and capabilities.

Human-machine partnerships (aka Human-AI)

Small, locally run AI language models have been an imagined thing for some time (it was part of the envisioned co-performing co-pilot multicore DLM concept for the STRCTRL-platform I was working on last summer). It is becoming a thing with Microsoft’s Phi-3 concept, and we all are expecting some Apple variant at WWDC…

/cdn.vox-cdn.com/uploads/chorus_asset/file/24401977/STK071_ACastro_apple_0001.jpg)

People think the next phase of AI focuses on practicality, coexistence with humans, and its impact on daily life.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25417605/unmask.jpg)

As expected (I thought this was already there) the Meta Rayban is an AI-device.

Relevant in these times; will human soldiers ever trust their robot comrades?

A new category for testing tools.

Maybe it is not always about partnerships…

Some AI tools for this week: updated Github co-pilot capabilities, Deepl writing assistant, Synthesia offers deep fake avatars.

Medicines are engineered with AI, and now vegan cheese is also used.

Fear and loathing AI, and why there are other perspectives.

Robotic performances

This is definitely the most direct execution of robotic performances. The new soccer-playing robots of Deepmind. The interesting part is how they learned much quicker from themselves than when they were trained by their human trainers. And it is maybe even more important to learn to fall well…

Right: “The BMI serves as the "information highway" for the brain, facilitating communication with external devices and providing cutting-edge technologies in human-machine interaction and hybrid intelligence”

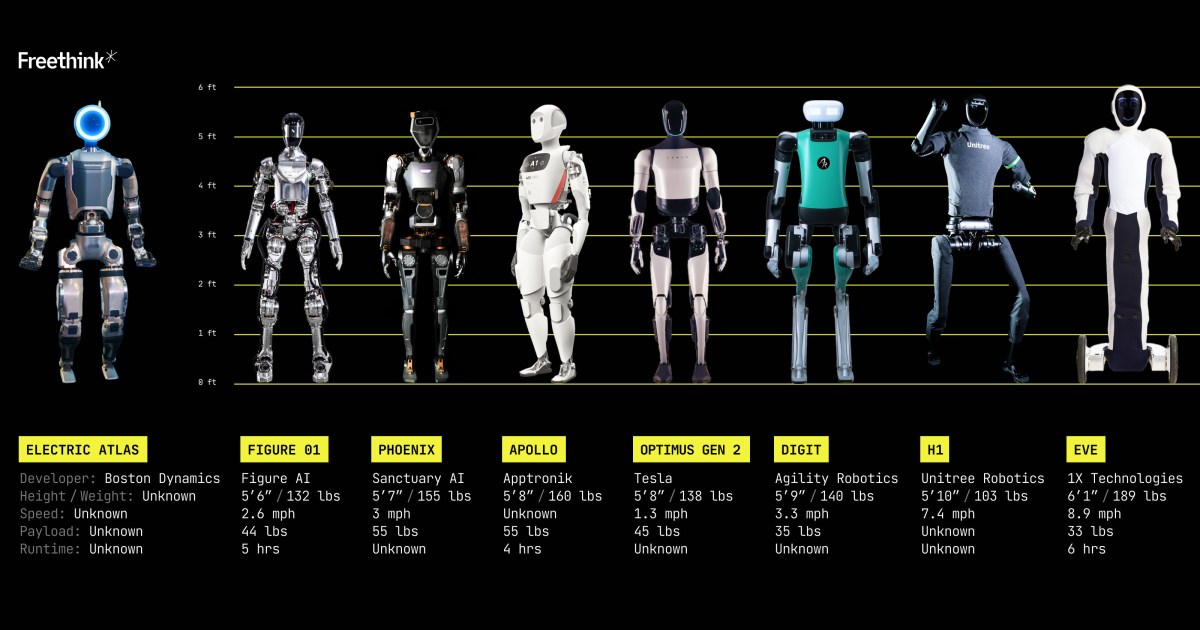

Another overview of the near-present humanoids.

Are 29 fatal accidents over 5 years with autopilot a lot?

Immersive connectedness

The Holotile infinite floor will bring us a step closer to a Ready Player One world, I presume.

I dare to say that many of these Best of Milan design week are physicalities derived from virtual realities.

Enforced security for connected homes.

/cdn.vox-cdn.com/uploads/chorus_asset/file/23249791/VRG_ILLO_STK001_carlo_cadenas_cybersecurity_virus.jpg)

Tech societies

This sounds like good news, at least in a good direction.

Not-so-good news: new geo-power battles. Not only taming SV is needed…

I think we have seen this trend before, but being able to choose to switch on and off from hyper-connectedness makes sense.

Sign of the times? The safety and security board for AI is largely built upon industry leaders.

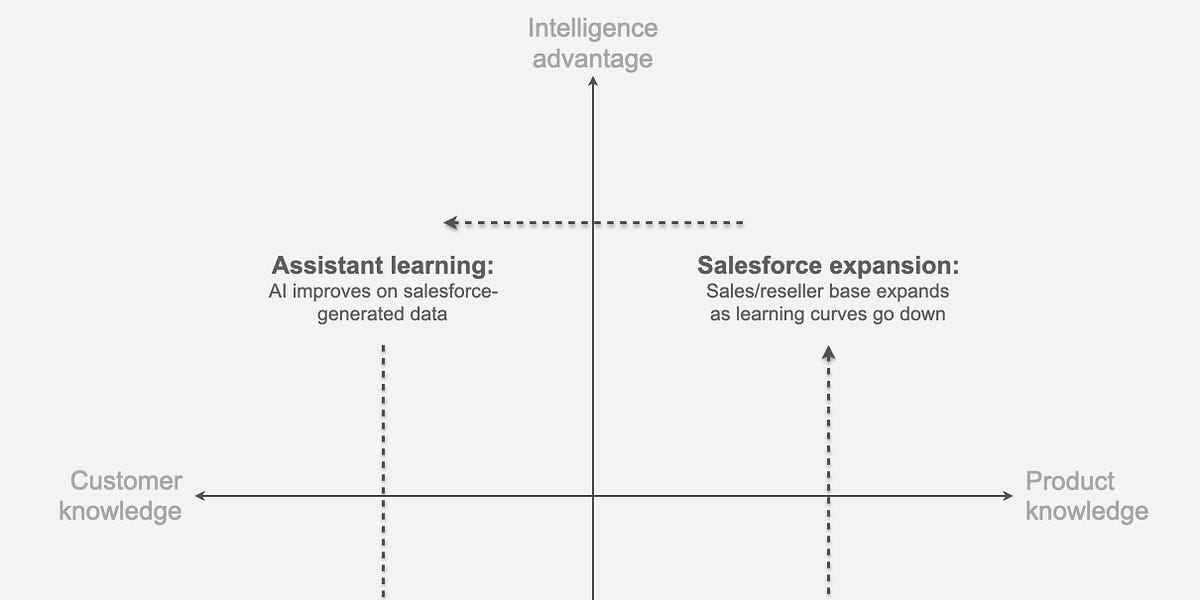

Platforms through the AI lens

To read on, later…

Paper for this week

“This article presents a design investigation of how to address social justice concerns in participatory activities when debating energy futures. As the climate crisis grows and technological progress exacerbates environmental issues, the design field is increasingly committed to understanding and mitigating the impact of new products in the world.”

Antognini, R., & Lupetti, M. L. (2023). Debating (In)Justice of Energy Futures Through Design. Diid — Disegno Industriale Industrial Design, (81), 14. https://doi.org/10.30682/diid8123g

Have a great week!

After a week packed with events, this coming week is dedicated to writing proposals, updating websites, and finishing reports. Probably in the end, with too little time after all… 🙂

Waag organizes the State of the Internet this Thursday in Amsterdam, a yearly lecture and discussion. Also, The Hmm is organizing a new program in the coming weeks if you are into crafting perfect feeds in different cities.

See you next week!