Weeknotes 287 - a new layer of digital life twinning

Are LLMs another twinning layer of our digital life? And the clash of politics vs democratizing as resilient tech strategies. And more notions from the news on human-AI, robotic performances etc.

Hi y’all! Welcome to my new subscribers and readers! Below is some background on this newsletter, but let me dive right in now.

I was thinking of reflecting on the event last Thursday I attended the yearly (since 2019) “de staat van het internet” (State of the Internet) organized by Waag. But then something else popped up; see below. I don’t want to let it pass completely. It was a different version this year, compared to last year, that was more deep and thoughtful with James Bridle. This year, it was much more “applied,” with European politician Kim van Sparrentak sharing her ideas and actions on digital and AI. To be honest, the talk was a bit bleak and predictable, what you might expect maybe from a complex political process. I respect her efforts, but it is more confirmed how hard it is to change things and create a real impact.

The panel afterward was, however, very nice and underlined the feelings that arose: are we really fighting the polarization with more politics, or do we need another angle? I wrote down for myself that we need to focus on democracy more than politics. More concretely, it is all about social fabrics, education, building trust in the system, and resilience. Use more fundamental beliefs of people to build a more fair debate and constructive policymaking. Hopefully, that will result in more reasonable politics, too, respecting fundamental rights above popular opinions.

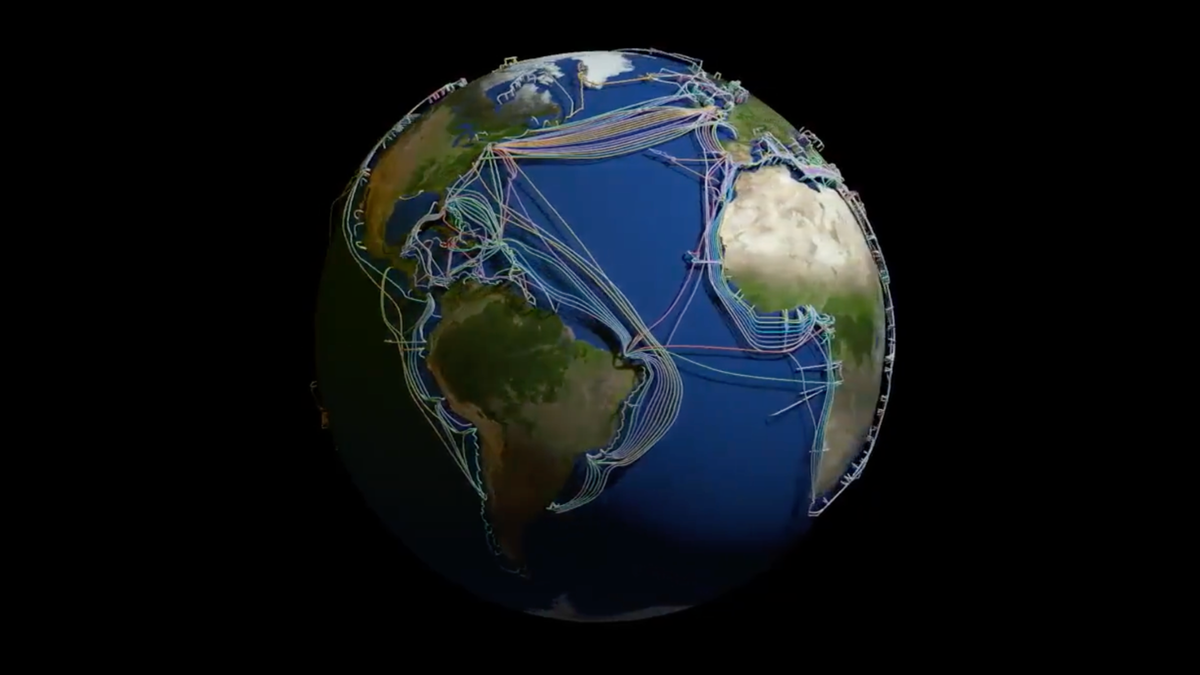

Some examples made it clear that there are possibilities to use design for change, like creating platforms to find consensus, as in Taiwan, not focusing on opinions. I was happy with the remark from the audience stressing that we are talking too much about digital as a result of real life, as a separate reality that can be regulated separately, while it is now the other way around; digital is real life and should be governed similarly. And that is a segue into the triggered thought, after all…

Triggered thought - LLM is a ‘digital twin’ for our digital lives

Benedict Evans has a column in his newsletter (sub) on the role and impact of LLMs for search behavior and the way search engines will change face with fewer and fewer links. True, but one phrase triggered a different thought: how search engines create a layer on top of the open internet. That is no news; we see much of our reality through the lens of the search engines we use (mainly Google) and partly the social networks. The adagium of propriety internet has been a common notion for a decade at least.

But we are now digital creatures because most of our emotional and social life is happening via all kinds of digital tools. The discussion on the change in role and presentation of search influenced by LLMs and other forms of AI-enhanced search is not only a change in search itself but might be an addition of a new layer. The way we interact with the LLM intelligence to enhance our own thinking with inspiration, advice, and reflections might tap into a different digital presence, a new type of digital consciousness. We might, in that sense, start treating the LLM-enhanced tools as partners more even than the ‘old’ search that are more tools to us.

In another opinion piece on AGI, Evans explores the promise of AGI and our relationship to it. For me, this is a related topic that impacts the way we treat things as citizens.

If we start by defining AGI as something that is in effect a new life form, equal to people in ‘every’ way (barring some sense of physical form), even down to concepts like ‘awareness’, emotions and rights, and then presume that given access to more compute it would be far more intelligent (and that there even is a lot more spare compute available on earth), and presume that it could immediately break out of any controls, then that sounds dangerous, but really, you’ve just begged the question.

(A form of) AGI might be there already as soon as the AIs are able to answer all questions by combining multiple sources. Perplexity is already on that path. Combining that with some interaction with humans to improve the results, we will see a form of AGI that is not autonomous but in constant conversation with humans, has a value reference and is not acting overly secure, bragging like the current generative AI tools tend to do.

The embodiment of AI is an important step towards a more AGI-like feel. AGI is often framed as a danger to human life, as in taking over more jobs and maybe starting to influence our own views and behavior in such a way that we are losing our own agency. These are possible dangers that we can overcome through education, by prebunking systems, and by making people more aware and literate. And so it connects back to the conclusion of the State of the Internet…

For the subscribers or first-time readers (welcome!), thanks for joining! A short general intro: I am Iskander Smit, educated as an industrial design engineer, and have worked in digital technology all my life, with a particular interest in digital-physical interactions and a focus on human-tech intelligence co-performance. I like to (critically) explore the near future in the context of cities of things. And organising ThingsCon. I call Target_is_New my practice for making sense of unpredictable futures in human-AI partnerships. That is the lens I use to capture interesting news and share a paper every week.

Notions from the news

Last week, the first impressions of the Rabbit R1 were not positive; the full reviews are even worse (like this and this). It is an unfinished product. The opinions differ on whether it would be a useful, promising AI device or the wrong concept. I am still curious to experience it, but I do no know if it will be delivered soon… There are some rumors, though, or better said, some investigations into the CEO's business history that might create some turmoil. It is fresh and a bit unclear what the intentions are. A pivoting company or just scam, or anything in between. I checked the Discord channel for any discussion on this, but not until now.

Human-AI partnerships

The battle of the LLMs is continuing. Microsoft is now creating its own version, which is next to the deep investments in OpenAI. MAI-1.

BCIs -brain-computer interfaces- are as intriguing and scary, especially when initiated by a certain regime type. It would be as scary if it were controlled by Google or Amazon, of course.

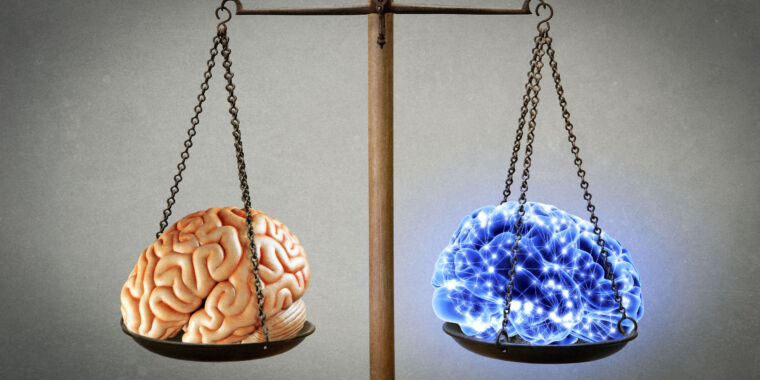

“Better moral judgment than a human” raises the question: What would happen if all judgments were validated through an AI, and is that judgment still human?

This feels like a different step in human-AI partnerships. Dealing with repetitive, boring tasks was the promise of robots, and automation was already the case; this is a new level of creating distance.

Is spatial intelligence another term for multimodal embodied AI?

Was business/enterprise application not always part of the LLM promise?

Simulation is also a form of business mapping.

Waag & NL AIC officially published new research this week (discussed it last week).

New dilemmas: If AI and LLMs can create a form of communication with space and alien civilizations, do we dare delegate those conversations to our AI friends?

Teens are building friendships with AI chatbots. “Her” revisited?

/cdn.vox-cdn.com/uploads/chorus_asset/file/25425111/247098_Teens_and_Character.AI_ASu.jpg)

A look into the next generations LLMs

I am a bit reluctant to compare AI and religion. “It invites readers to consider not just what AI can do but also what it means for our understanding of consciousness and the human experience in the context of rapid technological change.” I leave it to you to draw conclusions.

We will learn from co-piloting, first with coding.

This can be a social game for family gatherings.

Robotic performances

So we get standards for exoskeletons… And exosuits.

Robotic performances might become more intelligent.

Robots will keep having a challenge to outperform animals.

Immersive connectedness

Low-power networks and sensors are needed to make the business case for high-volume sensing in agriculture. This is an interesting new step.

I have not shared much here lately on quantum developments, but apparently, there have been some valuable breakthroughs.

Tech societies

The impact of all of AI was sometimes fear a lot. Did he change his mind?

Another round of critical remarks on the intentions of Sam Altman.

The future, also for our next nearby elections?

Should we aim for one dominant player?

Synthetic presences are now fashionable.

The future is bright? This artist's impressions suggest a heavy, crowded environment.

Paper for the week

Ramping up to the PhD defense of Kars on contestable AI, a related paper:

The Right to Contestation: Towards Repairing Our Interactions with Algorithmic Decision Systems

As algorithmic systems continue to make more decisions about our lives and futures, we need to look for new ways to contest their outcomes and repair potentially broken systems. Through looking at examples of contemporary repair and contestation and tracing the history of electronics repair from discrete components into the decentralized systems of today, we look at how the shared values of repair and contestation help surface ways to approach contestation using tactics of the Right to Repair movement and the instincts of the Fixer.

Collins, R., Redström, J., & Rozendaal, M. (2024). The right to contestation: Towards repairing our interactions with algorithmic decision systems. International Journal of Design, 18(1), 95-106. https://doi.org/10.57698/v18i1.06

Looking forward

It might be a short week for you, or maybe you are still in vacation mode. My week will be filled with planning more proposals for Wijkbot and Generative Things and updating my digital presence (aka website). I do not have events on the list but I will check the PhD Defense of Willem on Positive AI this Wednesday and check the Apple keynote on new iPads (probably) this evening. If you are in London next week, you might want to check a new edition of Interesting (15 May).

Enjoy your week!