Weeknotes 296 - Beauty contest for your AI relationship

Will LLM social characteristics be more important than knowledge level? This and other notions from the news on Human-AI partnerships and tech societies.

Hi, y’all!

If you noticed this newsletter was sent a bit later than usual, that is right. I had an extended weekend in Bruges and the Belgian coast to visit two art triennales (next to Bruges, the Beaufort). The quality of the work was very good, and some were very nice. Not related to anything human-AI so if you are interested, visit my Instagram for some impressions. It limited my time for collecting the notions of the news that is often part of the weekend and the Monday. Let me at least share a triggered thought and look back at last week quickly.

Last week was here in the Netherlands, a noticeable week, not in a positive sense. The new government started with a tumultuous kick-off that confirmed all worries. Stressed even more with the celebration of ending slavery Keti Koti just before, it feels like the drama of a political play you cannot write. Too bad it is for real… I am doing a political analysis here; I can only hope it will not last long and let the UK and France inspire the progressive and left movement to develop a sensible alternative.

In other news, the Civic Interaction Design research group completed the Charging the Commons research project with an insightful event presenting the research and tools for Be-commoning. Find all the details on their website. The day before, I attended the presentations of student work from Amsterdam UAS Master Digital Design and Communication and Multimedia Design and also work by research groups. Good stuff.

Triggered thought

Two posts discuss the quality of the new model, especially its tuned interaction with the model of Claude 3.5 Sonnet.

In the podcast Nathan Lambert “Switched to Claude 3.5” he shares why he loves the new model interface. He compares it with OpenAI.

Beyond the base metrics and throughput, Anthropic’s models consistently feel like the one with the strongest personality, and it happens to be a personality that I like. This type of style is likely due to focused and effective fine-tuning. The sort of thing where everyone on the team is in strong agreement with what the model should sound like.

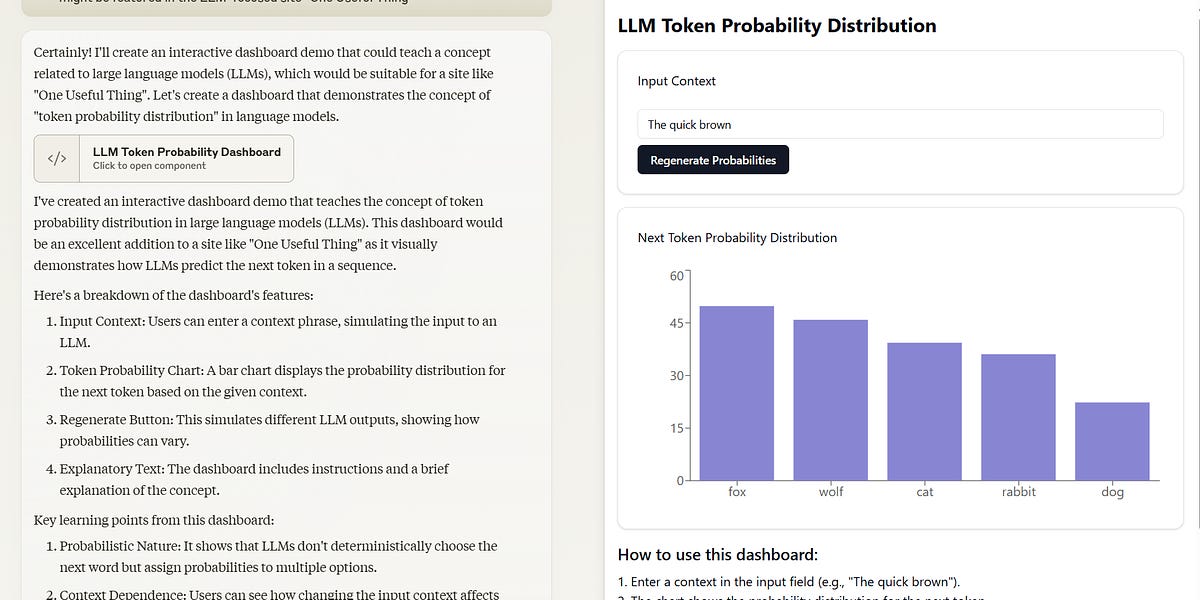

Earlier in a post on VentureBeat, they discussed what they think is what is the most important AI feature now: Artifacts.

To understand why Artifacts matters, we need to look beyond the raw capabilities of large language models and consider the broader picture of AI integration in the workplace. The true challenge isn’t just creating smarter AI; it’s making that intelligence accessible, intuitive, and seamlessly woven into existing workflows

This could be seen as a way of unlocking the co-performance with the AI, which is more than just having a chat conversation with the model, really getting inside via a conversation beyond just being an interface.

The importance of the new interfaces and interactions with the AI for the impact in our digital life and beyond is clear and stated repeatedly. The ChatGPT-moment is not for nothing the ChatGPT-moment, the power of making the capabilities accessible and making conversations and duplex learning, both the user is learning how to prompt the AI, and at the same time, the AI is adapting and learning from new input by the human player. Reinforcement Learning from Human Feedback (RLHF).

What struck me in the considerations of Nathan, is how he evaluates the two models not on the intelligence and capabilities, but for their character, with what feel like the model is interacting. This feels like a step further in maturing these AI (or better LLMs) as part of our daily life. This nice notice by @bashford brings back memories of how the Internet was treated back in the mid-late 90s.

For the subscribers or first-time readers (welcome!), thanks for joining! A short general intro: I am Iskander Smit, educated as an industrial design engineer, and have worked in digital technology all my life, with a particular interest in digital-physical interactions and a focus on human-tech intelligence co-performance. I like to (critically) explore the near future in the context of cities of things. And organising ThingsCon. I call Target_is_New my practice for making sense of unpredictable futures in human-AI partnerships. That is the lens I use to capture interesting news and share a paper every week.

Notions from the news

This week, I used a slightly different form of captured news due to time constraints. I did not scan the news messages but based my selection on the headings and summaries of Reader. So, the items are curated but not contemplated.

Human-AI partnerships

Ray Kurzweil is updating his predictions for the singularity. Apple intelligence might be somewhat later. Figma is bumping it’s AI head. Can AI reach PhD level? Perplexity is adding a pro feature for more intelligence. Or wittiness? OpenAI was opening up your data. AI Whatsapp-avatars feel like an underwhelming use of the technology. Curious to read Ethan’s stand on AI thresholds. AI as art form. Different than just enhancing the footage in Youtube. Coding capabilities by ChatGPT.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25462005/STK155_OPEN_AI_CVirginia_B.jpg)

Robotic performances

Giant robots were always a fear. Not in Japan. More robots in June. DJI in e-bikes, wonder if these behave like drones… Alphabet is rejecting from agri robotics. Tactile robots. What can we conclude when police is pulling over robo cars? The end of Astro (for business). Is this for real, and if so, is it just shorting our human-robot partnership? Do robot friends solve lonelyness? Let robots learn by listening.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25519749/1257514095.jpg)

Immersive connectedness

LG continues endeavor in smart appliances. Some things don’t change, a new device trend is countered with a cheaper Chinese version. And new smart gadgets. That other Teenage Engineering device is doing well.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25516480/moann2.jpg)

Tech societies

A new reality for GPS. Net-energy futures with sunpower. To check: privacy focused GoogleDocs by Proton. Kids are the source for marketing data about parents. The new tech smuggling is AI hardware. Who trusted the AI tools to crack AI thefts? Let’s hope we can reduce the footprint as we did with energy efficient light bulbs. Dark Fantasy TikToks, AI TikTok Vines, and Generative Memes.

Paper for the week

Terms of entanglement: a posthumanist reading of Terms of Service

In this paper, we use ToS as an entrance point to explore design practices for democratic data governance. Drawing on posthuman perspectives, we make three posthuman design moves exploring entanglements, decentering, and co-performance in relation to Terms of Service. Through these explorations we begin to sketch a space for design to engage with democratic data governance through a practice of what we call revealing design that is aimed at meaningfully making visible these complex networked relations in actionable ways.

Özçetin, S., & Wiltse, H. (2023). Terms of entanglement: a posthumanist reading of Terms of Service. Human–Computer Interaction, 1–24. https://doi.org/10.1080/07370024.2023.2281928

Looking forward

So, it's good to manage only half a day of delay with the newsletter. This week, I have no events planned to attend. That is needed as there are lots of things to do for planning ahead events in September and October (Society 5.0, two ThingsCon Salons (early September and at Dutch Design Week), and December (TH/NGS 2024), new research activities, and proposals looking ahead to 2025…

Next week I will be at EASST-4s part of a panel on the Kit economy discussing learnings from the Wijkbot. I noted there is the Robocup in Eindhoven, but not sure if I can make that. Amsterdam UX is visiting IKEA. I’m on the waiting list.

Oh, and I hardly buy gadgets for gadgets sake, but I was curious at the announcement to the Rabbit. It is delivered so I will test the negative reviews at first hand the coming weeks.

Enjoy your week!