Weeknotes 309 - the hidden influencer role of an adaptive AI

What will happen when these AIs become more and more capable of playing us? And other notions from last week's news in this newsletter.

Hi, y’all!

Last week, the natural voice of ChatGPT o1 gained more following, especially as it is adopted by mainstream media here in the Netherlands with a talk show appearance and numerous podcasts. It is one of these AI iterations that triggers imagination and experience magic and becomes a way for people to reflect on the role of AI in society. The hardest thing I saw or had in the conversation is that the AI is sometimes too eager to break in a conversation. In that sense, it feels like a conversation with a counterpart who is one step ahead in the thinking…

I also had the pleasure of joining another lovely cocktail hour tiny event by Monique. Talking to great people, chatting with Matt Webb in real life (one of our speakers at ThingsCon this year), and experiencing the entertaining acrobot act of Daniel Simu. And I visited the 6 year celebration party of ink Social Design with a good panel on the role of changemakers and designers in crafting transformations.

Triggered thought

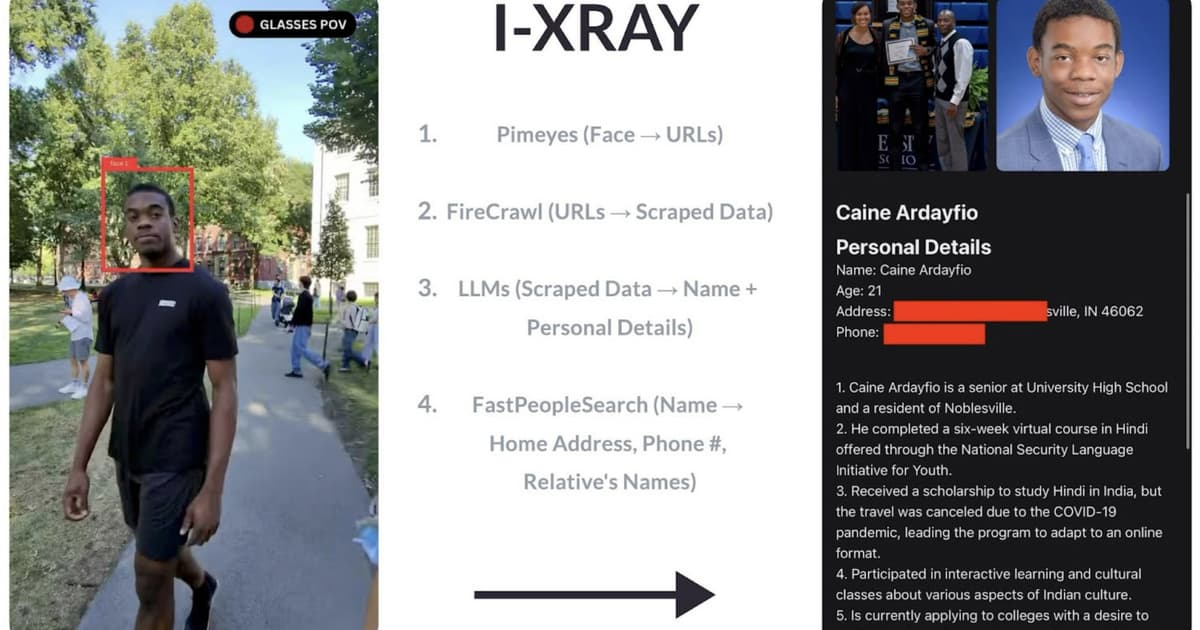

Watching one of these conversations unfold with OpenAI's new natural voice interface, it strikes me that an AI system is erupting that behaves like influencers, adapting its messages and emotional tactics to maximize its impact on individual users.

Observations in AI-driven audio applications have shown that these systems are most effective when replacing complex, multifaceted interactions. This aligns with the concept of an "AI layer" that integrates various aspects of our digital lives, as discussed in edition 293: "apps as capabilities" within a unified interface.

There is, however, a potential unwanted consequence in their ability to trigger genuine emotional responses from users. As noted in last week’s triggered thought, the most significant emotional aspect of AI interactions often comes from our own reflection on the conversation. This self-reflection, combined with an AI's ability to tailor its approach, creates a powerful psychological dynamic reminiscent of a current ELIZA, the pioneering chatbot designed to mimic a psychotherapist.

What happens when AI systems generate fake emotions that elicit real human emotions? We're entering uncharted territory, especially considering how these interactions may shape our evolving sense of self in partnership with AI. While some research suggests AI could lead to more realistic human behavior and fewer conspiracy beliefs, we should be wary of potential backlash.

When OpenAI's chatbot was featured as a guest in a talkshow last week, a skeptical human participant initially expressed discomfort with artificial relationships. However, as soon as the AI began discussing topics of interest to him, his attitude shifted noticeably towards acceptance.

Whether intentional or not, this adaptive behavior in AI systems poses significant ethical questions. While generating insights and fostering creativity can be beneficial, we must establish safeguards against manipulative influence. Perhaps a "fixed system card" for AI, prioritizing ethical boundaries over data protection, could be a step in the right direction.

As we move forward, it's crucial to remain vigilant about the potential for AI to become a hyper-effective influencer, capable of adapting its message and emotional appeal to each individual user. The consequences of such technology demand our attention and careful consideration.

For the subscribers or first-time readers (welcome!), thanks for joining! A short general intro: I am Iskander Smit, educated as an industrial design engineer, and have worked in digital technology all my life, with a particular interest in digital-physical interactions and a focus on human-tech intelligence co-performance. I like to (critically) explore the near future in the context of cities of things. And organising ThingsCon. I call Target_is_New my practice for making sense of unpredictable futures in human-AI partnerships. That is the lens I use to capture interesting news and share a paper every week.

Notions from the news

Some key introductions last week: Meta with a video with sound model called Movie Gen, OpenAI with a new canvas and rolling out natural voice, new Microsoft co-pilot improvements. And NotebookLM is gaining even more attention through the audio function. The Hololens is silently discontinued.

Human-AI partnerships

Realtime AI API is a new OpenAI feature that brings humanlike AI assistants to every app. Will this be as powerful as the Facebook Like button?

How will it relate to an ecosystem of domain-specific foundation models? Is that indeed the future?

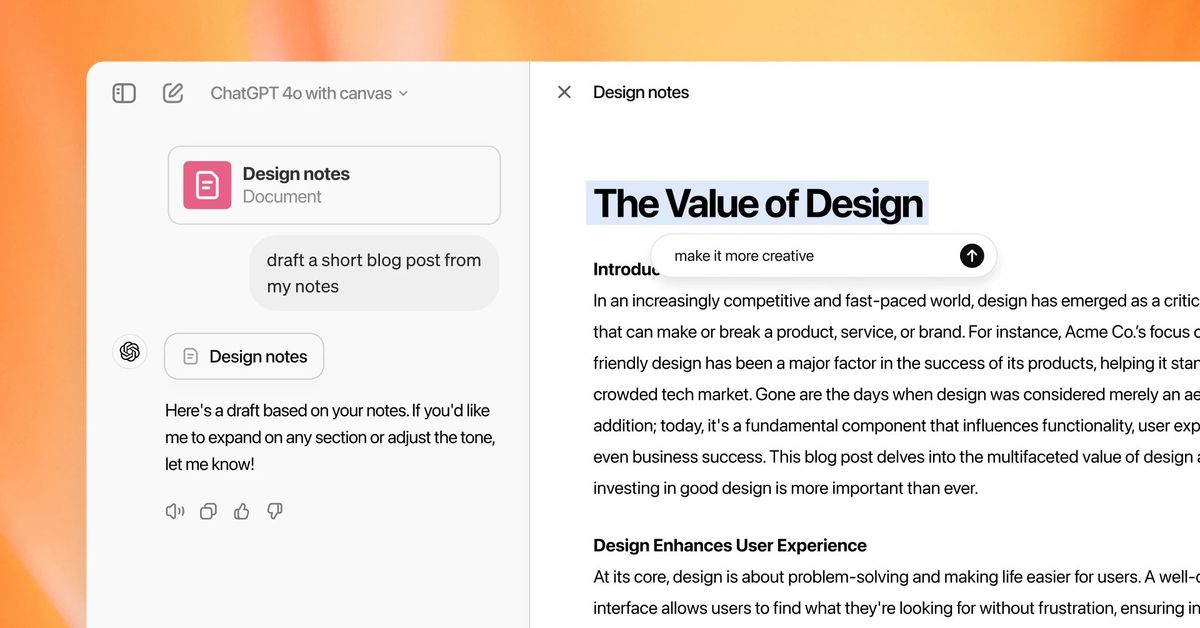

ChatGPT is following Claude with a new type of Canvas interface to support co-working with the AI better.

Microsoft is also improving the co-pilot.

What will the AI DJ look like? Will this be a topic at the Amsterdam Dance Event next week?

Are more sophisticated AI models indeed better equipped to lie to us, human counterparts?

Is NotebookLM more distracting users than enhancing their understanding and creativity? (depends on the way it will behave and be designed imho)

Robotic performances

A new type of security breach.

Apart from the traditional factory robots, humanoids are popping up at this tradeshow in China.

I expect proxy robots will be in the near future. First signals. This does not seem to add a lot to a normal remote presence.

Immersive connectedness

I noticed before how Oura is an IoT device that keeps going without much notice. Lately, with some improvements and more attention.

Check this if you have a Rayban. It's nice for the next birthday.

Tech societies

Not so much tech societies as tech business models. In AI. Is OpenAI a healthy business?

Can you opt out of AI?

Ethan Mollick on AI in organizations. In a short post and longer NotebookLM podcast, as you do…

“Your phone is a little libertarian buddy who is whispering in your ear yeah go for it, who’s gonna stop you, might is right.” Matt Webb (the same as above indeed) makes a nice case that the phone should take responsibility, or as we call this in the Netherlands, should have a “zorgplicht” to prevent, or at least notice, you for rude behavior. This might be a good topic to flesh out for ThingsCon 🙂

A different voice on the impact of robotics on our jobs.

What is the other end of software eating the world?

Paper for the week

Strategic planning for degrowth: What, who, how

Degrowth is gaining traction as a viable alternative to mainstream approaches to sustainability. However, translating degrowth insights into concrete strategies of collective action remains a challenge. To address this challenge, this paper develops a degrowth perspective for strategic spatial planning as well as a strategic approach for degrowth.

Savini, F. (2024). Strategic planning for degrowth: What, who, how. Planning Theory, 0(0). https://doi.org/10.1177/14730952241258693

Looking forward

Looking forward to this evening where POM Live that might have a link with the Dutch release of the useful book by Ethan Mollick co-intelligence today.

And I am looking forward to the Society 5.0 festival, not only because I will host a Wijkbot-workshop.

Enjoy your week!