Weeknotes 316 - shifting perceptions of smartness

What is the difference between smart and intelligent objects? Is this the same as ten years ago? Some thoughts, and notions from the news from last week.

Hi, y’all!

Yesterday Monday, about 20.000 people demonstrated against the plans for breaking down education and research in the Netherlands. I could not attend, but I endorse and support this, of course.

Welcome to this week's notes, which are slightly different from the usual. Due to a busy schedule, they're being published a day later than usual. Despite the delay, time remains tight, so I also keep a bit shorter. While I haven't had much time for deep reflection and thought, I did participate in a session with students from the "Minor Interacting Environments" program at TU Delft. This experience provided some thoughts to share. Before diving into that, let me give you a brief overview of the week ahead: The main focus remains research into civic protocol economies. And the ThingsCon preparations are consuming significant time and energy, with the event just 2.5 weeks away. Please check out the program and consider purchasing a ticket to join us. I'm also working on the Wijkbot project methodology, which needs to be completed by year-end. So busy as always plus a bit extra, but filled with exciting projects.

Triggered Thought

Now, let's delve into some thoughts sparked by the student session. The topic revolves around our relationship with new, intelligent, autonomous, and generative objects or entities. The minor program focuses on designing and conceptualizing interactive environments, exploring their impact on people's lives.

One of the commissioners for student assignments is Schiphol Airport in Amsterdam. They're interested in exploring new functions for the airport during periods of reduced flight activity, envisioning it as a space that combines work and shopping in innovative ways. During the session I attended on Tuesday, we discussed smart objects. Students had prepared an exercise inviting participants to take positions on various aspects of smart objects, such as whether they make us smarter or dumber, and what defines a smart object.

This exercise prompted me to revisit my own definitions and research on predictive relations from 2018-2019. At that time, I made a clear distinction between smart objects and intelligent agents:

- Smart Objects: These are adaptive and responsive to their environment but follow pre-programmed rules. Examples include vacuum robots and programmable thermostats.

- Intelligent Objects: These are capable of creating their own strategies by combining different types of information and insights. They don't have agency or consciousness but can adapt more flexibly than smart objects.

- Things with Agency: These not only have intelligence but also make their own decisions and valuations. They learn from their actions and can even initiate interactions with humans.

What struck me during the student session was how their definition of smart objects aligned more closely with what I previously categorized as "things with agency." This shift in perception suggests an evolution in how we conceptualize smart technology.

The students' view of smart objects as having agency and intelligence reflects a changing understanding of what "smart" means in our society. It raises questions about the future roles of these objects in our lives and how we'll interact with them. This evolution in perception also prompts us to consider what the next level of intelligence or agency might look like beyond what we now consider "smart." Will it be something even more human-like, or will it develop in entirely new directions? These are fascinating questions to ponder as we continue to explore the rapidly evolving landscape of smart and intelligent technologies.

For the subscribers or first-time readers (welcome!), thanks for joining! A short general intro: I am Iskander Smit, educated as an industrial design engineer, and have worked in digital technology all my life, with a particular interest in digital-physical interactions and a focus on human-tech intelligence co-performance. I like to (critically) explore the near future in the context of cities of things. And organising ThingsCon. I call Target_is_New my practice for making sense of unpredictable futures in human-AI partnerships. That is the lens I use to capture interesting news and share a paper every week.

Notions from the news

So is the real value of AI general intelligence? You can guess the answer.

It's good to check the presentations of Benedict Evans always. There is a lot of data in this one, but as always, good insights.

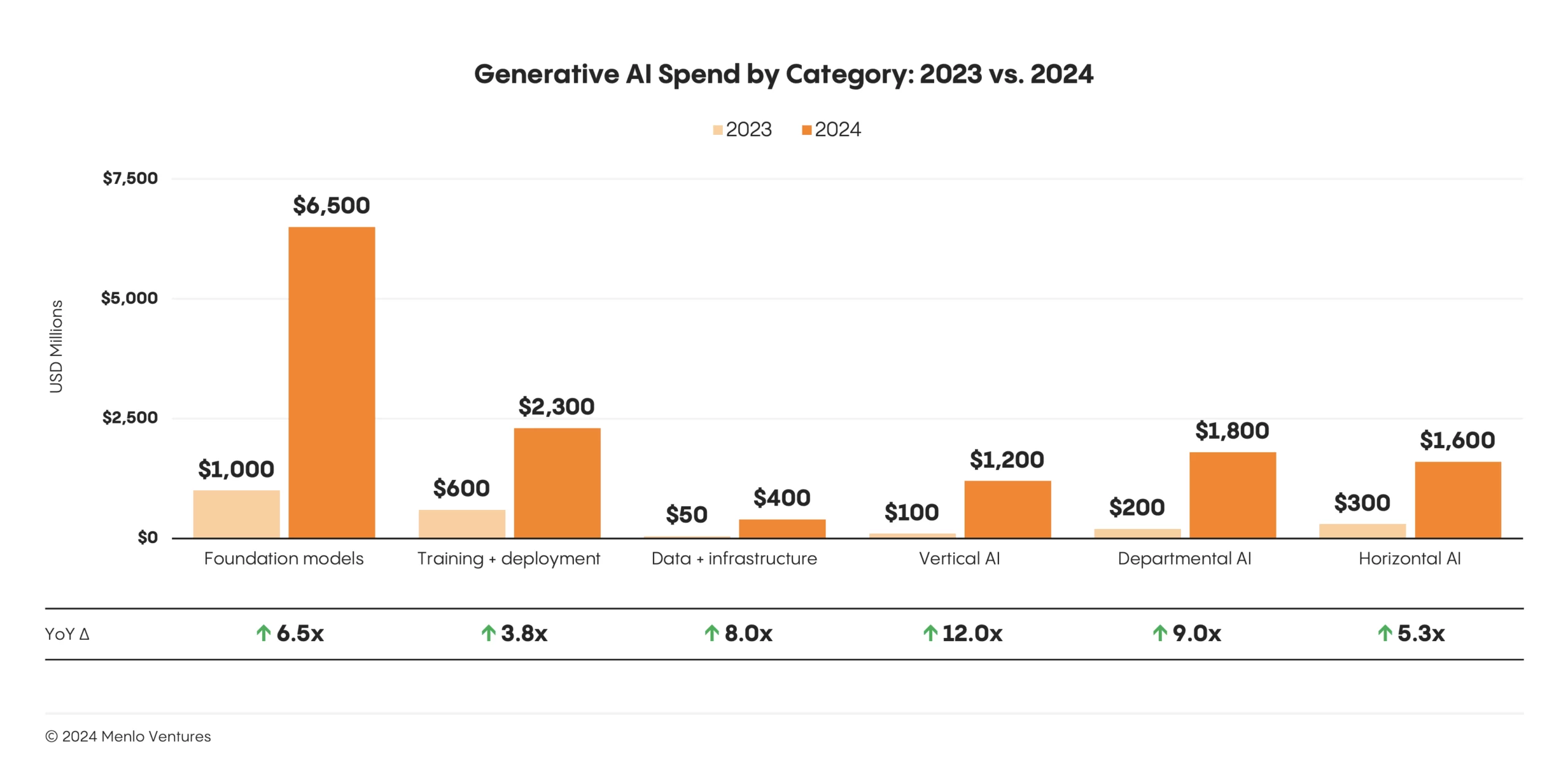

And the state of AI in the enterprise, more data.

Human-AI partnerships

This is another interesting Hardfork podcast, specifically the discussion on medical AI. The researcher compared doctors' results: doctors using GPT are worse than doctors using GPT alone because they will not take the advice from GPT. (Research paper)

The same goes for a Robot photographer apparently.

OpenAI goes Swarm AIs

And what are AI agents? IEEE is defining.

Gemini is extending the span of control of apps.

Will this influence my workflow? Claude can match your writing style.

Not sure if there are still people that just start prompting. Ethan Mollick is advising.

Robotic performances

New forms of sport for robots: endurance.

Or being small and autonomous inside our body.

The Robot Hub as economic area becomes a thing.

Immersive connectedness

Foreshadowing a smart home device from Apple connecting different objects.

New boards: seems cheap and capable.

Tech societies

Who is weaponizing AI-generated content for politics, are you surprised?...

I am not sure how much it qualifies as AI, but it would for sure qualify in that case for sneaky AI…

I am not giving developments in social tools that much attention here, but a lot is happening with the shift from X to BlueSky (yes, I have been there too for some time), and maybe that is influencing Threads to become less algo-filtered.

Foursquare; the end of an era.

Paper for the week

With recent developments in deep learning, the ubiquity of microphones and the rise in online services via personal devices, acoustic side-channel attacks present a greater threat to keyboards than ever. This paper presents a practical implementation of a state-of-the-art deep learning model in order to classify laptop keystrokes, using a smartphone integrated microphone. When trained on keystrokes recorded by a nearby phone, the classifier achieved an accuracy of 95%, the highest accuracy seen without the use of a language model.

Harrison, J., Toreini, E., & Mehrnezhad, M. (2023, July). A practical deep learning-based acoustic side-channel attack on keyboards. In 2023 IEEE European Symposium on Security and Privacy Workshops (EuroS&PW) (pp. 270-280). IEEE.

Looking forward

This week I limit it to working on the research project and preparations of thingscon.

Enjoy your week!