Weeknotes 317 - designing for understandable predictive intelligence

What will AI do for automated systems in the real world? Are we already expecting algo being AI? Agentic AI and more.

Hi, y’all!

It's been a busy week as we approach the end of the year. As I mentioned (many times) before, these weeks are particularly hectic because of the upcoming ThingsCon event happening in a little bit more than a week. I'm looking forward to this edition because we have a great program with many interesting sessions, inspiring keynotes, and stellar installations on exhibit.

Including the special exhibition showcasing prototypes of future generative things that could become enhanced or enchanted with generative capabilities. We're excited to see the nicest designs and hope to have a good collection of prototypes to instigate interesting discussions and answer questions. I won’t bother you with other aspects like balancing a budget around break-even ;-)

Aside from the event, more projects need to be finished by the end of the year: An exploratory research project at the Civic IxD group. And finalizing an extra iteration on the knowledge product for designing hoodbots as a means to give voice to citizens about technology in their neighborhood and beyond.

Triggered thought

This week's "triggered thought" comes from a personal experience. It's about a conversation I had regarding my parking situation. In Amsterdam, we have this sophisticated parking space built almost 20 years ago. It's a system where you drive your car onto a pallet, get out, and then use your badge to have the system move your car underground.

Last weekend, a situation occurred when I called for my car using the service to call in advance, but someone else arrived at the garage and used their responder to collect their car without the software service. This made me think about how AI could influence such systems in mundane situations.

For example, a more intelligent system could recognize when someone has called in person, and prioritize their car as it is closer by the exit, even if someone else had placed the call first.

It's similar to modern elevator systems in skyscrapers, where you choose your destination floor before entering the elevator. This allows the system to optimize everyone's journey based on the floor it travels to. This disconnects your actions from the behavior of the automated system.

This can be even more intelligent. Not only using the current situation of the different elevators, but also mixing in patterns from the past and predictive knowledge from similar elevator systems. Than we have predictive relations that are creating strategies for operating based not only on the context but also on expected behavior.

The behavior of the thing (elevator, parking automat) is disconnected from the users control. This raises interesting questions about user experience and trust in AI systems,. How do we design these systems to balance efficiency, user understanding, and intentions? We will need conversational AI interfaces that can explain decisions and offer the possibility to drive. And create tangible moments of interaction.

For the subscribers or first-time readers (welcome!), thanks for joining! A short general intro: I am Iskander Smit, educated as an industrial design engineer, and have worked in digital technology all my life, with a particular interest in digital-physical interactions and a focus on human-tech intelligence co-performance. I like to (critically) explore the near future in the context of cities of things. And organising ThingsCon. I call Target_is_New my practice for making sense of unpredictable futures in human-AI partnerships. That is the lens I use to capture interesting news and share a paper every week.

Notions from the news

What was last week's biggest news (in the context of this newsletter)? A ban on social media in Australia for under-16? Interesting development and might be good for setting a societal reference, but it should not shift responsibility away from the makers of toxic social services.

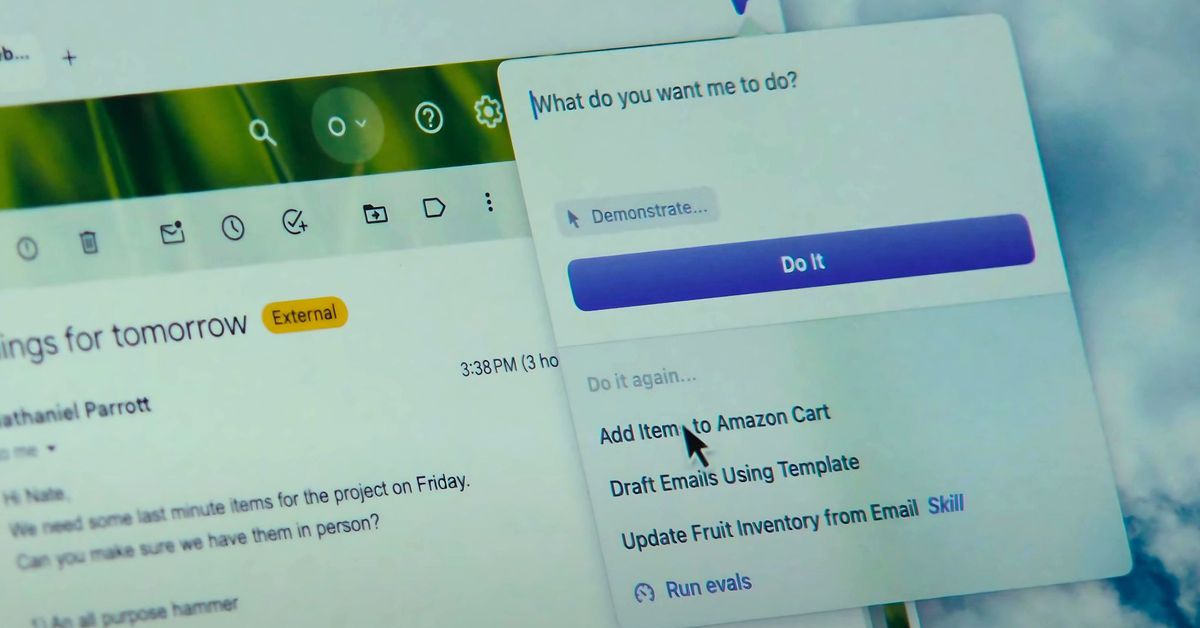

Agentic AI remains the hot topic of this quarter. And will stay hot in the products for next year. Like this new agentic browser from Arc. The take of the AI Daily Brief podcast having a prediction that we will use the agents in a total different way.

Human-AI partnerships

I heard it also somewhere else as a topic for a conversation on agentic AI: we see that AIs are built to verify and validate the output of other AIs, all within something that appears to the outside as one AI.

Who or what will define the behavior of our (agentic) AI? We do! At least that is the argumentation in the new article of Modem on Digital Doubles.

Robotic performances

This collected 10 robotic developments of last Month indicates how diverse the term robot still is. It reminds me how I like to focus here on robotic things (not humanoids) and not the function but the interplay with humans. Just a note to myself 😄

Immersive connectedness

In other news, some small product updates; Google is integrating Waze functions more and more in the core product.

Tech societies

Google Search is already in decline. Are we entering a new era of how we use the internet slowly but certainly?

Deepseek is that Chinese AI that seems to beat OpenAIs o1.

Developments over the decades. Peter triggers thinking, agrees on the steps, and likes the two folds of 10s and 20s. Maybe nice to have that for all decades: a source and an interface. Like the 80s where sandboxes & hardware, and the 90s services & software. Network & web followed in the 00s. So what will be next? Something like protocols & conversations.

Year endings, new year ahead

December has started that time of year. Year reviews are popping up, and trends will be predicted. This is an example with a different angle: the rest of the world.

Paper for the week

Moral Crumple Zones: Cautionary Tales in Human-Robot Interaction

Analyzing several high-profile accidents involving complex and automated socio-technical systems and the media coverage that surrounded them, I introduce the concept of a moral crumple zone to describe how responsibility for an action may be misattributed to a human actor who had limited control over the behavior of an automated or autonomous system.

Elish, Madeleine Clare, Moral Crumple Zones: Cautionary Tales in Human-Robot Interaction (pre-print) (March 1, 2019). Engaging Science, Technology, and Society (pre-print), Available at SSRN: https://ssrn.com/abstract=2757236 or http://dx.doi.org/10.2139/ssrn.2757236

Looking forward

The coming week looks like the last one, but with ThingsCon even more near 🙂

I will not have time to visit any other event, however I will have a short look (and meeting) at Immersive Tech Week (of 3 Days). Especially the panels in the morning are interesting, in case you are planning to go. Sensemakers has an DIY edition.

Enjoy your week!