Weeknotes 248; the fundamental nature of AI

Exploring the AI Barbenheimer moment, new personal software paradigms, xenobots and much more, but not too much on x.

Hi y’all! Happy Barbenheimer…

I am not yet part of this meme, but I might become one, as the movies are both on the watchlist. The Oppenheimer movie is linked to the state of AI a lot by the way. You can wonder if there is a similar Manhattan program now aiming. Still, the goal to gain a competitive advantage on nation level and the potential deconstructive impact might make the comparison not strange.

I have to say that I still believe that AI will not rule or threaten our life directly very soon, but the impact of AI systems and AI tools on how we as humans will play out conflicts and life, in general, might create a shift in agency and dependency. And in that sense, might the control of the biggest AI in this 21st century be comparable to what control of the biggest bomb was back in the 20th century…

The future of AI was also a topic in last Sunday's conversation in Zomergasten on Dutch television with Thomas Hertog, a famous cosmologist that worked with Stephen Hawkins most of his life. The conversation dealt with the relationship of humans with fundamental research, and the concept of artificial physics was mentioned as a way how people will relate more to and learn about the fundamentals of physics. Will AI offer the next Einstein to invent fundamental science? I had to think about another angle that was closeby in the conversation: will there be a moment that the artificial intelligence will behave as if it follows fundamental nature laws. Fundamental AI laws. Do we need to develop a fundamental science to understand AI as we need fundamental science for understanding life and nature?

The whole of the 3-hour conversation was quite interesting, so if you are understanding Dutch, and did not watch yet, check it out...

Another tip for Dutch speaking, as a vacation read; the summer special of De Groene has a guest editor in Marleen Stikker discussing AI for all, “Other Intelligence”. I did not read all yet, so I cannot judge if the close follower of AI developments will learn much, but it covers a nice range of topics.

I am writing this while we are collectively saying goodbye to a once-loved conversational companion. The blue bird of Twitter… I do not intend to add a lot here to all the memes and commentary on this move. I am not, in principle, against rethinking a service like Twitter that was changing its face for some time, even before Musk took over. But this is so clearly a move to steer away from the real-time conversational engine that made so many connections and shortened distances, levelled playing fields and at the same time derailed from the positive place into the ultimate amplifier of a polarised media space… We miss with X all kinds of references to ‘tweeting’ and derived concepts. X seems more like a foundation for services that need interactions between people. The message protocol will be used for sending functional data mainly probably. But ok, something to return to later, as we have seen more of the future implementation plans…

Last week there was the academic conference on conversational AI: CUI 2023, which has a lot of interesting papers, see the whole list here. I did not have the chance to go through them all, but following some people on X attending the conference like @mlucelupetti, gave some good pointers, like this short provocation on creative capabilities of AI that made me think that it might be more about the creative attitude, being bold while humble, daring with the notion of consequences; that might be helpful for successful creative reframing AI...

Summer events

I could only find a few events to visit in this summer slowness.

- Tomorrow UX London will welcome Matt Webb to talk on his new plans: Acts Not Facts. Too bad there is no possibility to watch online, so only for people around…

- Also in London but with a possibility to join via Zoom, the Design for Planet Collective tonite. https://www.meetup.com/design-for-planet-meetup/events/294723475/

- And of course: save the date or rsvp right away: ThingsCon Salon 6 September in Rotterdam

Notions from the news

AI safeguards are hot, and seven large AI companies subscribe to that notion through a commitment made to the US government. Is this a good step or just another way to ethics washing?

SAP, the classic ERP giant, is doing the Microsoft trick by investing in several generative AI startups.

Google is creating an AI tool that can write news articles. It feels like something that is already a thing, but alas.

Opening up the models? Meta announced their Llama 2 is open source, however it has limits, both in what is open and who can use it (700 mln users cap)

OpenAI is adding new features at the same time: custom instructions to make ChatGPT more specific when used in other tools.

No surprise Apple is testing ChatGPT-like tooling. It seems like it is already built for internal use to prevent employees from using the tools of competitors.

It might make sense to have a model that is based on all of the metadata shared in using your devices combined with large language models that would deliver a different take.

The physical part of AI: 3 questions on AI semiconductors. Is there more than Nvidia?

Self-fulfilling generative AI, training the models on synthetic data, lowers costs but might lower the quality.

Some new AI tooling to test, debug and monitor LLM applications

What is time? It is a perception that can be manipulated now.

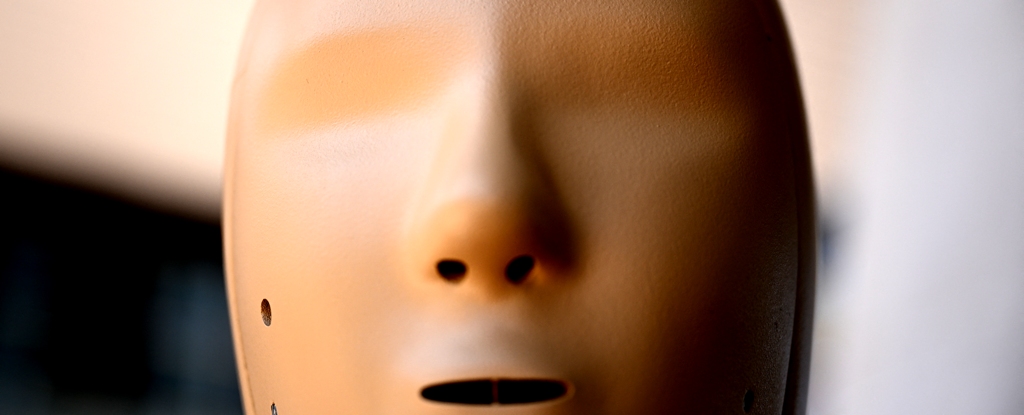

Another humanoid is being shaped, resembling the Tesla look; GR-1; with a weird choice of representing textual data on the head.

A different type of robot is “Xenobots”, self-replication living robots. It is a research project in progress now.

The future of building;

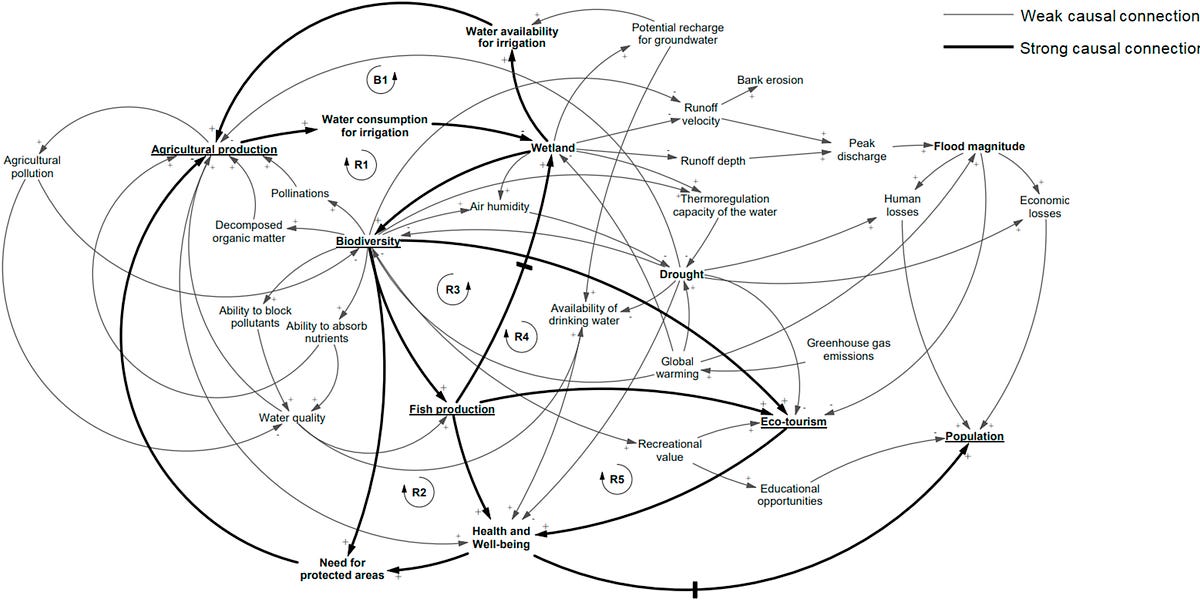

The role of feedback loops explored the importance of these reflective learning.

The AI summary of this: “Ethan Mollick argues that there is an opportunity to democratize access to education and use AI's transformative power, but this requires a new vision for education and work systems and a recognition of the genuine and widespread disruption brought by AI.”

I believe in the power of different AIs working as a team with different perspectives together in tackling problems. A paper has proven this now:

Is there a new type of software beyond the SaaS era, a hyper-personalised app that is created as forks of a master and adjusted using AI coding support? Dan Shipper is exploring this concept.

Should we move back to the time of simplistic Web 1.0 to break the influence of the algorithmic services of the big companies back to the Internet of people? It feels like there are different routes, like personal tools.

/cdn.vox-cdn.com/uploads/chorus_asset/file/23318433/akrales_220309_4977_0182.jpg)

Tesla is changing strategies by opening up their Supercharger network to other brands, creating a service proposition (and potential ‘EV dongle town’), and also is licensing their Full Self-Driving technology. And is investing a lot in its supercomputer that does the self-driving AI training.

General Motors has developed a technology that allows autonomous vehicles to communicate with human drivers more traditionally and can signal their path and intention to other drivers through notifications sent to infotainment screens or smartphones.

Or should autonomous driving be based on nature-inspired AI?

Are the current heat waves worldwide a signpost of climate change? Robot technology is used to create crash test dummies for heatwaves.

Paper for this week

A designerly look to the AI discourse: Risk and Harm, unpacking ideologies in the AI discourse.

The study employs a humanistic HCI methodology, applying narrative theory to HCI-related topics and analyzing the political differences between FLI and DAIR, as they are brought to bear on research on LLMs. Two conceptual lenses, “existential risk” and “ongoing harm,” are applied to reveal differing perspectives on AI’s societal and cultural significance.

(…)

The immediate consequences of longtermism lead to neglecting marginalized communities, exacerbating social inequalities, and undermining efforts to address ongoing harm and human rights violations. We believe that HCI’s engagement with AI and its societal impact should focus on these harms and advocate for the rights and voices of the marginalized. Making design choices that contribute to this focus should become standard practice.

Ferri, G., & Gloerich, I. (2023, July). Risk and Harm: Unpacking Ideologies in the AI Discourse. In Proceedings of the 5th International Conference on Conversational User Interfaces (pp. 1-6).

https://dl.acm.org/doi/10.1145/3571884.3603751

See you all next week!

Some changes in work focus; more on that later. These ‘low vacation times’ are not quiet anyhow, preparing for new events, further Wijkbot iterations and more.

Have a great week!