Weeknotes 234; large languages as companions

Hi all! I hope you had a nice Easter break. I took the chance to read a bit more from last week's news articles and watch some videos. One of them was **The A.I. Dilemma** Tristan Harris and Aza Raskin. Discussing the impact of Generative AI compared to the Manhattan Project of the Atom bomb.

Gollem class AI

That this goes fast and holds dangers is alone interesting to watch. The double exponential curve of AI training AI with humans in and out of the loop. But it is more interesting to explore how to organise the consequences. Democracies might need to be reshaped.

An example of the impact; AlphaPersuade. AI is not only training how to become a better player, as in AlphaGo. It is training against itself to become a better persuader.

Sacasas writes about an Apocalyptic AI this week. “The madman is not the man who has lost his reason. The madman is the man who has lost everything except his reason.”, he quotes. Is there an AI apocalypse to be expected, and what will that mean? “AI is apocalyptic in exactly one narrow sense: it is not causing, but rather revealing the end of a world.” AI is here more than robotics driving these developments. AI safety is also the week's topic for OpenAI, publishing their vision. And we need global oversight.

More buzz of the week was about Twitter and Substack. An interesting episode of Sharp Tech (paywall) on Twitter and Substack feud strategies: Clown Car History Lessons, Both Sides of the Twitter-Substack Fight, Parenthood Tech Strategies.

I had to think about the moments thinking about Twitter strategies in the past, how it should have been a messaging platform for the real world…

Events

I had to miss my planned attendance at the meetup last Wednesday; a proposal needed to be completed. So I can’t report on that. Check the video here, though.

This week is the STRP festival in Eindhoven, including the ThingsCon Salon on Listening Things; we organise ourselves this Friday.

And other events that pop up:

- Data & Drinks in Amsterdam on Thursday. Never been there, seems a big one: https://www.meetup.com/data-drinks/events/292108501/

- London IoT next Tuesday in person again https://www.meetup.com/iotlondon/events/292386828/

- v2 has the monthly open lab on 14 April (online from Rotterdam) https://v2.nl/events/open_lab-2023-iv

- A little bit further ahead: CHI is something I would go for if I had the time and budget :) This time in Europe, the pleasant city of Hamburg. https://chi2023.acm.org/

- Same for the Salone. Never been there; the side program is often even more interesting, I understand. Check Tobias Revell if you are there.

- Also, next week, the Hmm at Tolhuistuin Amsterdam on 19 April on generative podcasts.

Notions from last week's News

Let’s start with the weekly AI updates. From the apply-side, so to say, we see integrations of AI in Expedia, and in Siri.

Following the presentation of Aza Raskin and Tristan Harris, language is the core element in generative AI development. Multiple new systems are popping up.

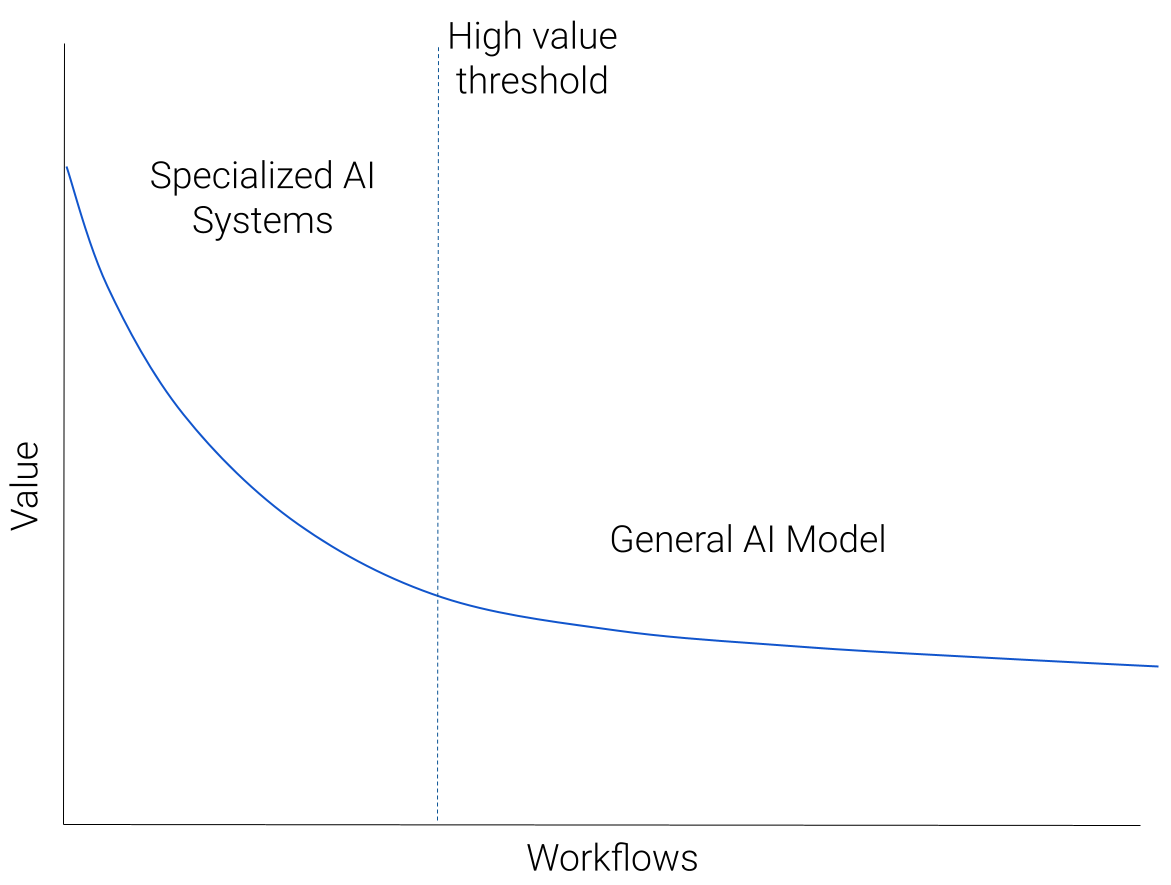

Does one Large Model Rule Them All? The three authors are excited about the developments and believe in a diverse landscape of AI components with a few general AI models.

The role of ChatGPT in education is interesting to explore. MIT did this and concluded that it would lead to a change and not destroy it.

The companionship of AI and humans is a hot topic this week too. The critical writer Ethan Mollick describes a companion for thinking. “it's important to remember that it is a tool to support human decision-makers, not replace them.” That might be true for now and in the coming time indeed.

Speaking of companionship feel, I mentioned I did some quick programming with GPT-4 last week. This is also possible by voice commands. And it can create a GitHub repo and deploy it too.

Jarvis is now reality.

— Mckay Wrigley (@mckaywrigley) April 3, 2023

My GPT-4 coding assistant can now build & deploy brand new web apps!

It initializes my project, builds my app, creates a GitHub repo, and deploys it to Vercel.

All from simply using my voice. pic.twitter.com/0D5UbOjT5s

The relationship with AI is a topic for careful consideration. An example is how easily Google’s Bard can be seduced to lie.

Do LLMs have agency? Feedback from humans is feeding the agency of machines. Gordon Brander.

Mike Barlow from O’Reilly is adding the danger of Bias and especially bias that is in the eye of the beholder, and one step further, that the bias is hidden.

Ezra Klein pleas for public-initiated development, not leave it to the big companies.

A sketch of market parties and categories.

Excels can make a difference in shaping the intelligence of the AI

Meta is introducing a system to isolate objects from visually sensed imagery. Good for target advertising, of course:

A returning notion: AI is physical, too like the processors. Happy WebGPU day.

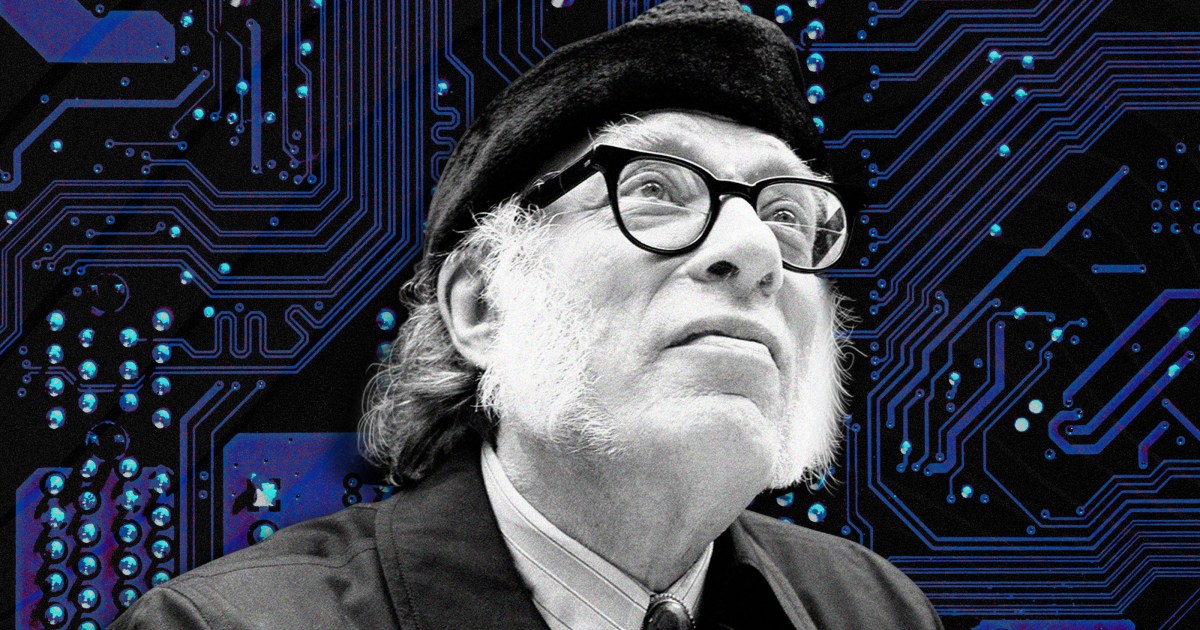

The identity of AI is also influencing robotics. Jonny Thomson is a philosopher and proposes a fourth law for robotics (next to the original Asimov rules): A robot must identify itself.

Time for some structure in our lives after all these items. The Grid is famous, a good grid design is learning the deeper structures.

Still, an issue; is trust in IoT devices. Will these stop working sooner than you expect? Bricks or bricked?

Gary Marcus is looking to GPT-5, that he expects will not be completely different, has the same way of working and lack of real understanding, and a better-pretending machine.

Mind control is getting near. As long as we are connecting control to agency.. Graphene is the promise.

Buildings and complexes are designed to be recognisable from different angles, like a satellite. It makes me think, why not create a building resembling a QR code from above?

Paper for this week

This week again aligns with the topics discussed, the core of languages and companionship. HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in HuggingFace

It is quite common nowadays to introduce new products and features with an academic(like) paper.

Considering large language models (LLMs) have exhibited exceptional ability in language understanding, generation, interaction, and reasoning, we advocate that LLMs could act as a controller to manage existing AI models to solve complicated AI tasks and language could be a generic interface to empower this. Based on this philosophy, we present HuggingGPT, a framework that leverages LLMs (e.g., ChatGPT) to connect various AI models in machine learning communities (e.g., Hugging Face) to solve AI tasks. Specifically, we use ChatGPT to conduct task planning when receiving a user request, select models according to their function descriptions available in Hugging Face, execute each subtask with the selected AI model, and summarize the response according to the execution results.

Shen, Y., Song, K., Tan, X., Li, D., Lu, W., & Zhuang, Y. (2023). HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in HuggingFace. arXiv preprint arXiv:2303.17580. Chicago

https://arxiv.org/abs/2303.17580