Weeknotes 251 - generative creativity as physical waveforms

Notions from the news and thoughts on AI, mundane robotics, and new form factors for generative creativity.

Hi y’all! Summer has started (again) here after a period of miserable weather. It is striking how we get used to the slightly higher base temperatures but are still complaining as ever (we, as in the Dutchies). In the meantime, terrible climate-driven weather stress is happening all over Europe; it started in the deep South, moved to the mid and last week, and hit the North.

In other news. Congrats to everyone liking hip-hop. 50 years; does that change the character of the music? And is it now settled, part of the establishment? The technology of sampling - a signature part of hip-hop, a new instrument was born - is more common and easy to apply than ever of course. For those who like to dive into history, 50 samples in 30 minutes (via the all-time favourite blog of Kottke). I just did this while typing; it is a ballet of waveforms that must be considered in modern DJ-ing. I have to think about some smart waveform applications in Soundcloud that capture a whole social system. And will it be as important as the progress bar became for experiencing video media influenced by YouTube? (I remember a nice presentation on this topic at my first SXSW in 2011). Can the character of the AI DJ of Spotify be read from the waveforms? And do we get new waveform-based AI-generated music like this?

I think the rest of the week was a bit low on thought-provoking listens. The LK-99 saga was the topic of the podcasts, as was Zoom (AI needs your data too). There was a good exploration of Uber’s impact on cities and urban crises in This Machine Kills, and the unintended consequences of regulations of section 230 (US-centric) in the latest Radiolab, which is signalling culture; would it be so extreme without the deeply rooted claim culture? And unrealistic fear of limitations of boundaries. Taking responsibility for edge cases should be possible without breaking the baseline of platform functioning. Some are trying to fix this upfront with the new AI reality.

Too bad to hear that Stacey Higginbotham has decided to stop with the Internet of Things Podcast. It was always great to listen to keep up to date on the latest IoT (home) devices, but the developments are not always that inspiring; still waiting on some bigger changes. And I especially liked the more and more critical reflections on the IoT with data breaches and more general, sloppy designs. We will miss this; Stacey will likely remain the signalling tech.

Events to notice

Still peak summer for tech meetups.

- As mentioned last week, tomorrow's UX Crawl is starting. They have reached their capacity, though.

- And also, this Wednesday - Sensemakers DYI

- If I were in London, I would check this expo in the Science Gallery

- 18 August - 3 September: ZigZagCity Rotterdam, another type of event, in case you are into architecture.

The route passes Afrikaanderplein, where we will show some of the Wijkbot projects this Sunday as part of the preview of the “Grondstoffenstation”. - We added the RSVP for the ThingsCon Salon workshop.

Notions from the news

Any new AI assistants this week? Goodnotes add handwriting features; with Scale you can test and evaluate LLMs, Amazon’s product descriptions for sellers, Augie, and make videos with AI clones of your voice. Share your prompts via PromptWave. Digital generated avatars from text. Also as deepfakes…

Faster, smarter, cheaper, for those who want to dive into the latest of Anthropic.

The AI critique for this week is from a couple of well-known opinion makers (as in shared before). Like Gary Marcus with doubts: “If hallucinations aren’t fixable, generative AI probably isn’t going to make a trillion dollars a year. And if it probably isn’t going to make a trillion dollars a year, it probably isn’t going to have the impact people seem to be expecting. And if it isn’t going to have that impact, maybe we should not be building our world around the premise that it is.”

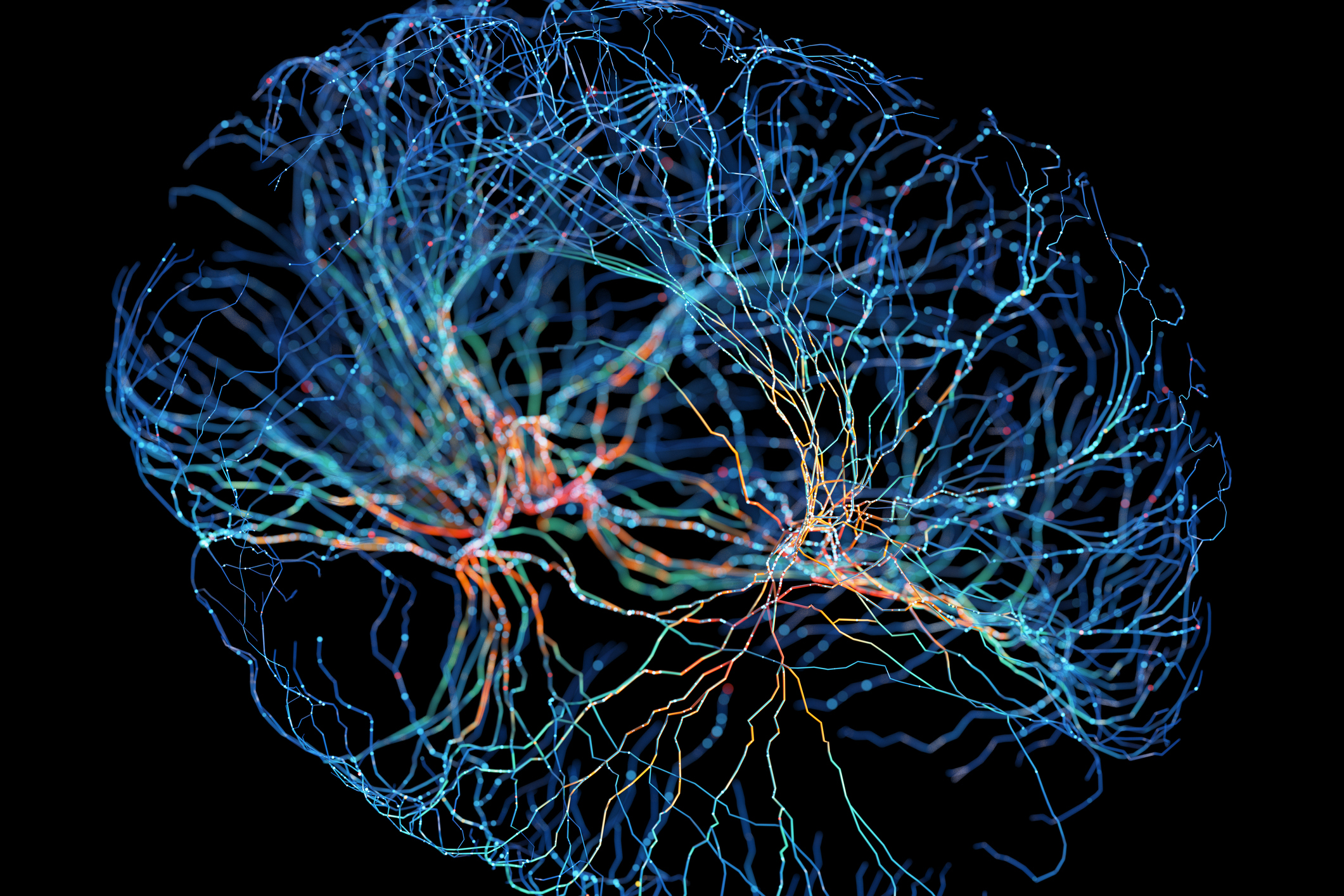

And Ethan Mollick believes in the power of AI as a creativity-enhancing machine: “LLMs are very good at this, acting as connection machines between unexpected concepts. They are trained by generating relationships between tokens that may seem unrelated to humans but represent some deeper connections. Add in the randomness that comes with AI output, and the result is, effectively, a powerful creative ability.”

How will this work for architects?

Subconsciousness on a chip that feels related, or more an extended version creating a superpower.

“Meet the artists reclaiming AI from big tech – with the help of cats, bees, and drag queens.”

In Time the impact on society is carved out:

Design for trust in a generative world is triggering new challenges.

China is drafting rules for facial recognition. Will these rules mean to protect citizens or provide a stable source for government surveillance?

/cloudfront-us-east-2.images.arcpublishing.com/reuters/5G3XGTM7IVNGDLNKZXY3CDDOXQ.jpg)

What do you think? Based on these pavilion designs, will you save extra savings to visit the World Expo 2025?

In the mundane robotics category, Serve Robotics has some serious investing and services Uber Eats. Is it still too early?

This title triggers thinking. This is indeed the future; we are learning our robots by interacting to grow up. And at the same time, do we have these learning robots already?

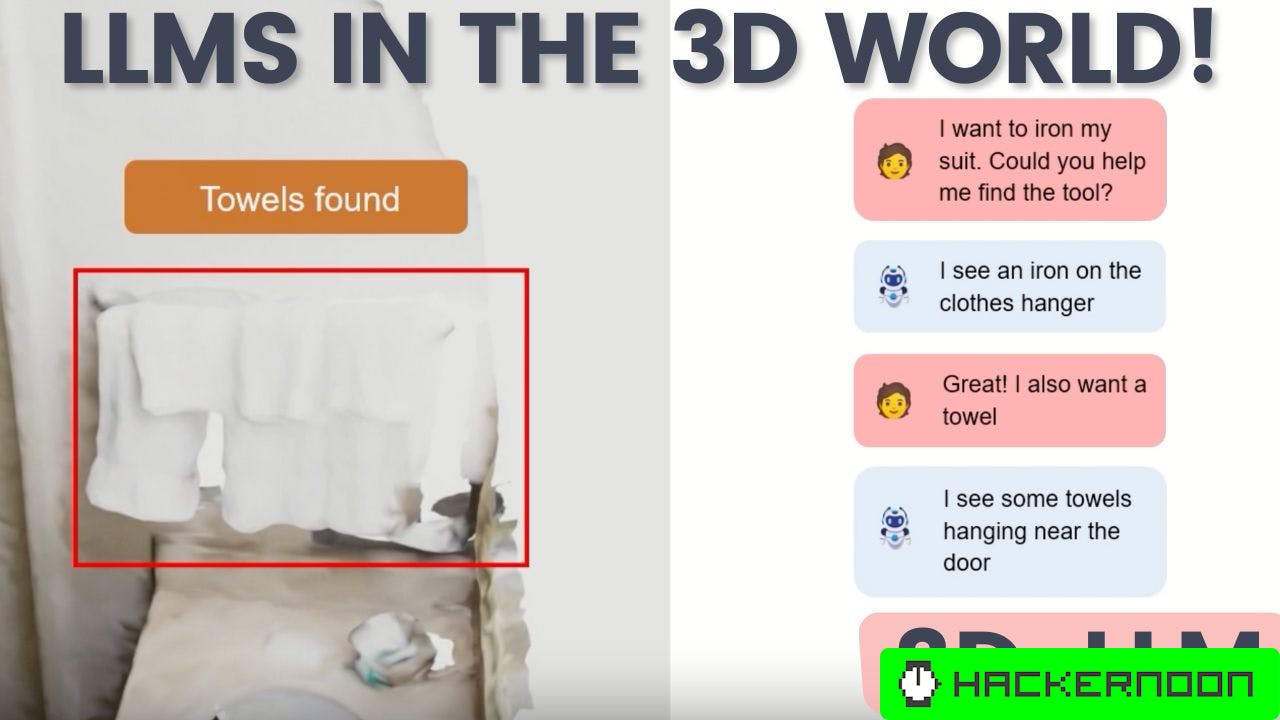

Sounds intriguing: 3D-LLM

In San Fransisco, robot taxis are allowed. Not everyone trusts the right behaviour:

Is the video call revolution dead, indeed? Maybe as a revolution, it is, but it gained a spot in our communication toolbox more than before the big reset. Remember the times we only had Skype? That always was buggy. It is still buggy, but we have shifted from an audio call to a video call as default.

/cdn.vox-cdn.com/uploads/chorus_asset/file/24371428/236492_MacBook_Pro_16__2023__AKrales_0068.jpg)

In case you are in LA, this is an interesting exhibition. Or at least an interesting premise for the exhibition. “Glitch Ecology and the Thickness of Now at Honor Fraser Gallery, a timely exhibition that explores the roots of our relationship to ecosystems.

3D printing at home, will it become a reality after all?

A long read on protocol narratives by Venkatesh Rao; summary by Reader AI: “The essay explores the concept of narrative protocols, which are never-ending stories driven by a narrative formula that generates novelty over an indefinite horizon. The author argues that focusing on short-term actions rather than overarching purposes is beneficial during crises. The essay also discusses the connections between narrative protocols and computation, life processes, and economic narratives.”

The elite colleges are behind India’s tech colleges.

A lovely critical maker's projects: creating an analogue Apple watch from electronic waste,

Paper for this week

It was a bit in the news last week; this paper on human-machine co-performance, partner in crime, or Combining Human Expertise with Artificial Intelligence: Experimental Evidence from Radiology

Humans assisted by AI predictions could outperform both human-alone or AI-alone. We conduct an experiment with professional radiologists that varies the availability of AI assistance and contextual information to study the effectiveness of human-AI collaboration and to investigate how to optimize it. Our findings reveal that (i) providing AI predictions does not uniformly increase diagnostic quality, and (ii) providing contextual information does increase quality.

Agarwal, N., Moehring, A., Rajpurkar, P., & Salz, T. (2023). Combining Human Expertise with Artificial Intelligence: Experimental Evidence from Radiology (No. w31422). National Bureau of Economic Research.